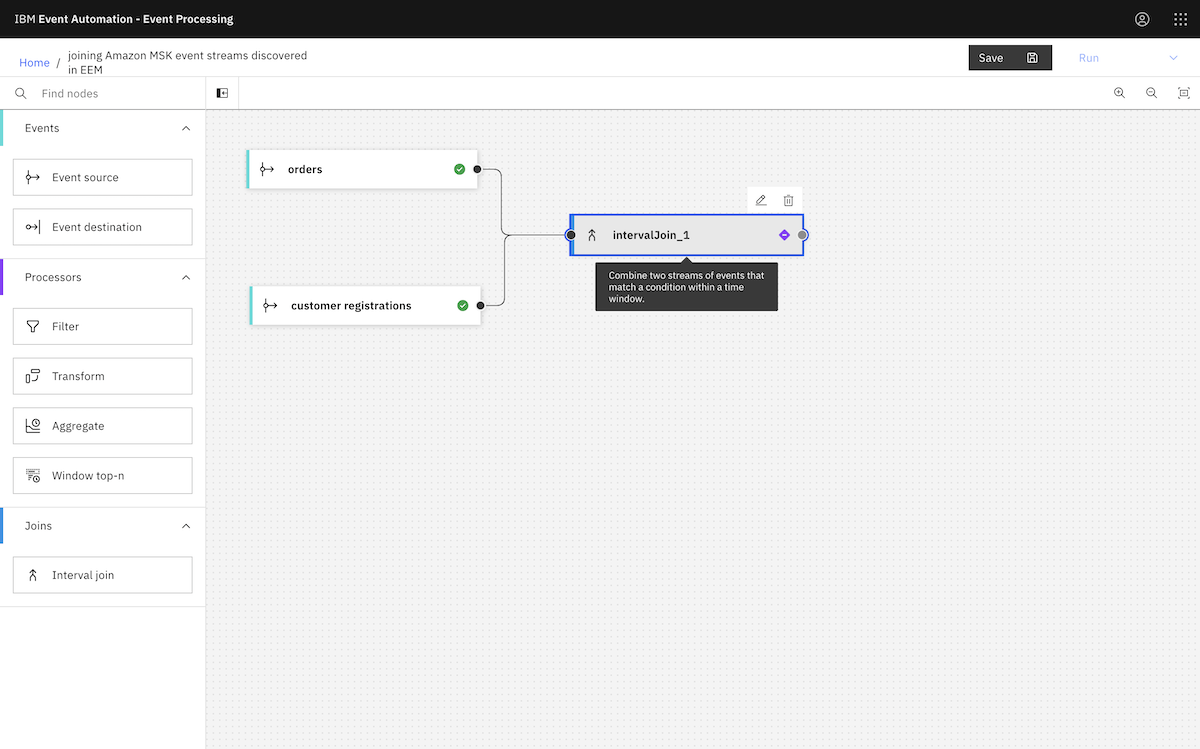

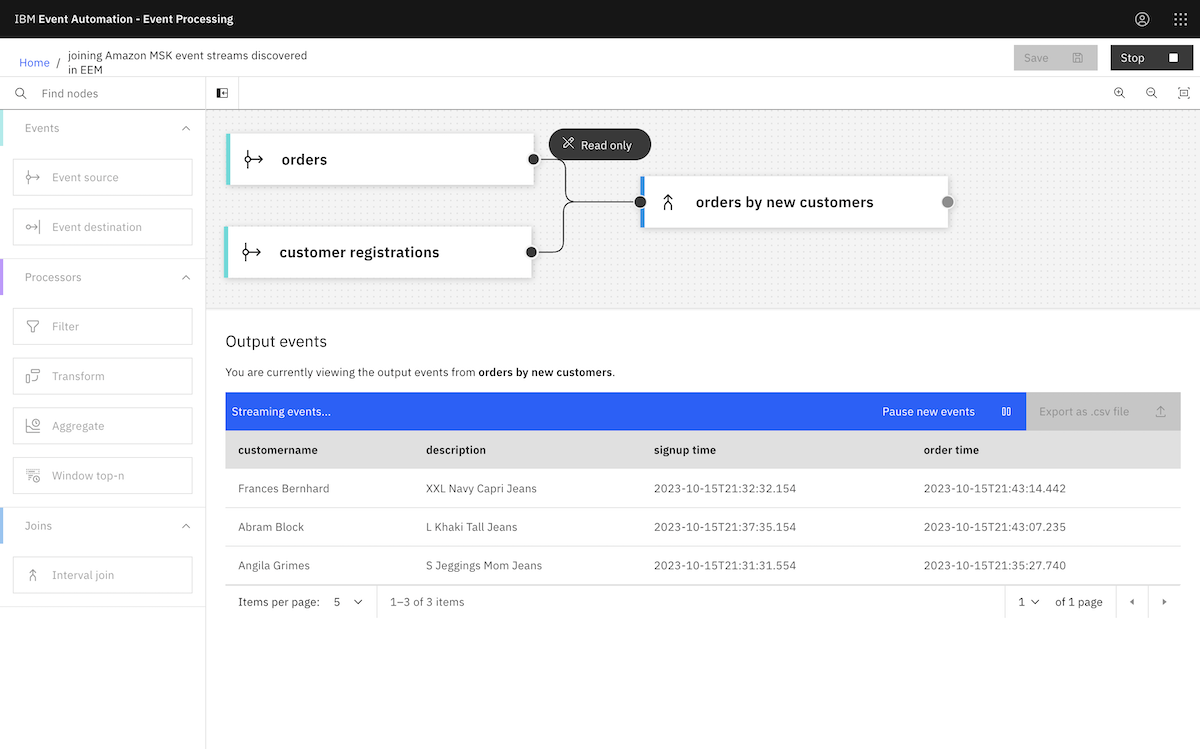

To start with, we demonstrated how Event Processing can be used with Amazon MSK. We showed how Event Processing, based on Apache Flink, can help businesses to identify insights from the events on their MSK topics through an easy-to-use authoring canvas.

The diagram above is a simplified description of what we created. We created an MSK cluster in AWS, set up a few topics, and then started a demonstration app producing a stream of events to them. This gave us a simplified example of a live MSK cluster.

We then accessed this Amazon MSK cluster from an instance of Event Processing (that was running in a Red Hat OpenShift cluster in IBM Cloud). We used Event Processing to create a range of stateful stream processing flows.

This showed how the events on MSK topics can be processed where they are, without requiring an instance of Event Streams or for topics to be mirrored into a Kafka cluster also running in OpenShift. Using the low-code authoring canvas with Kafka topics that you already have, wherever they are, is a fantastic event-driven architecture enabler.

How we created a demonstration of this...

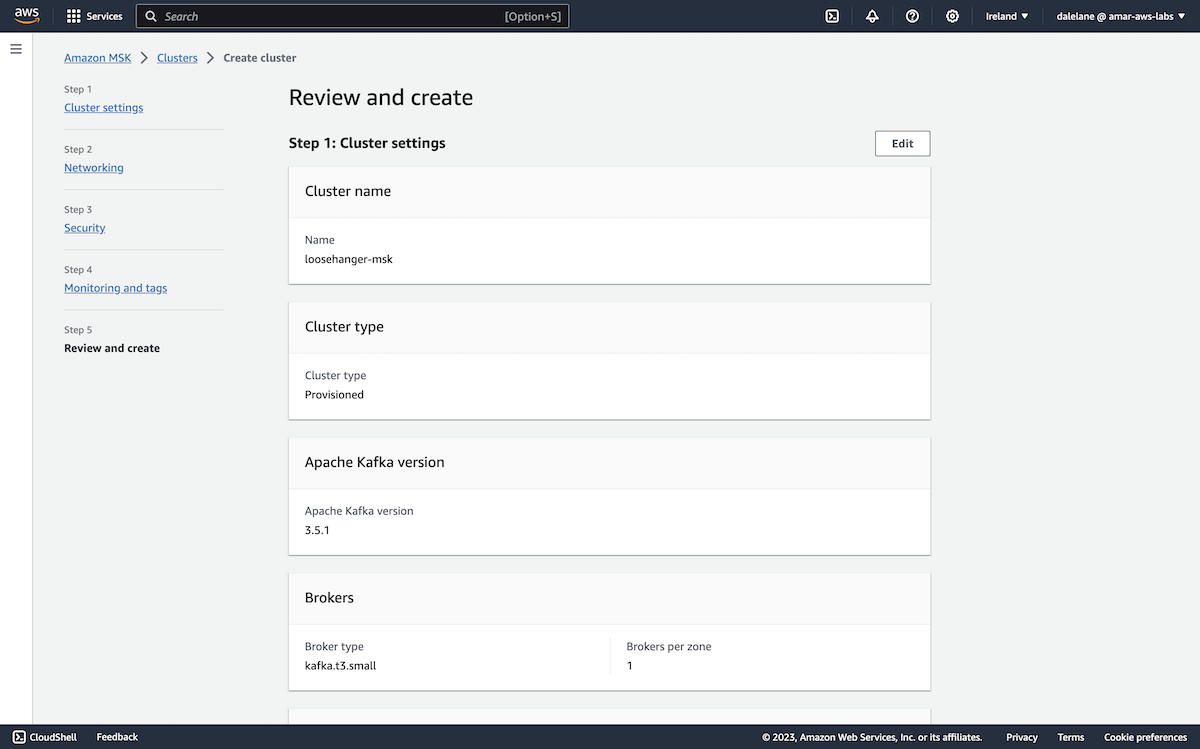

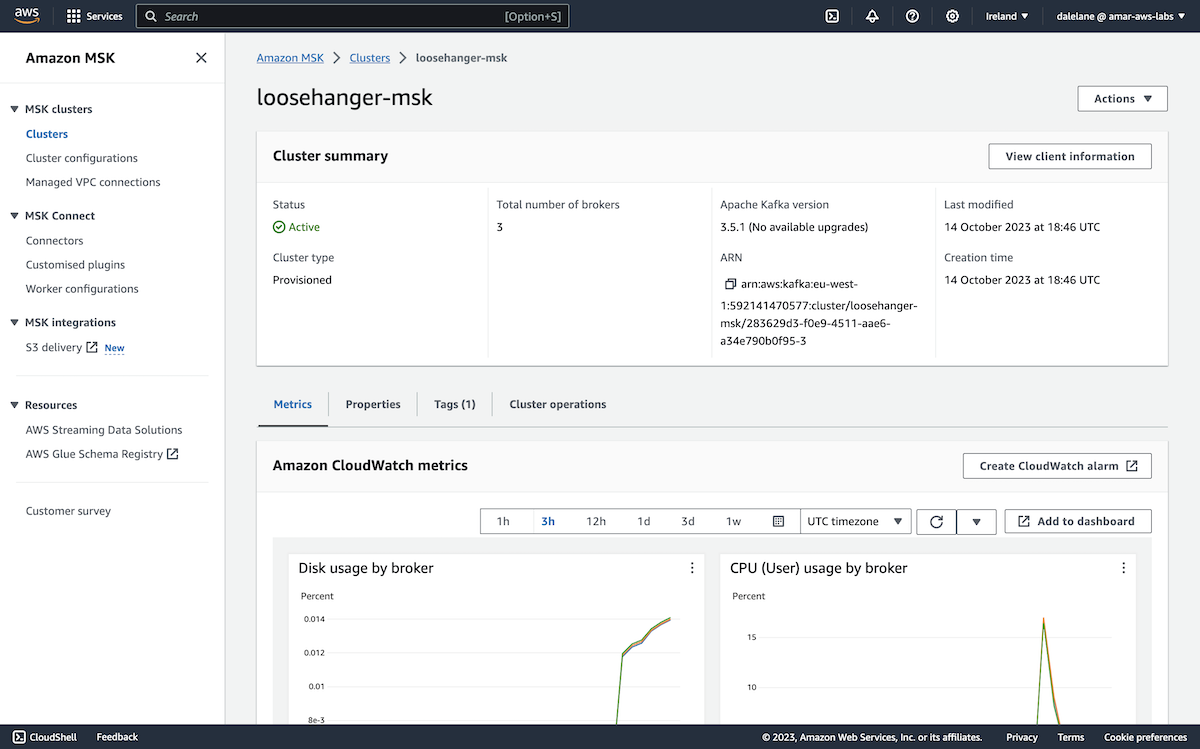

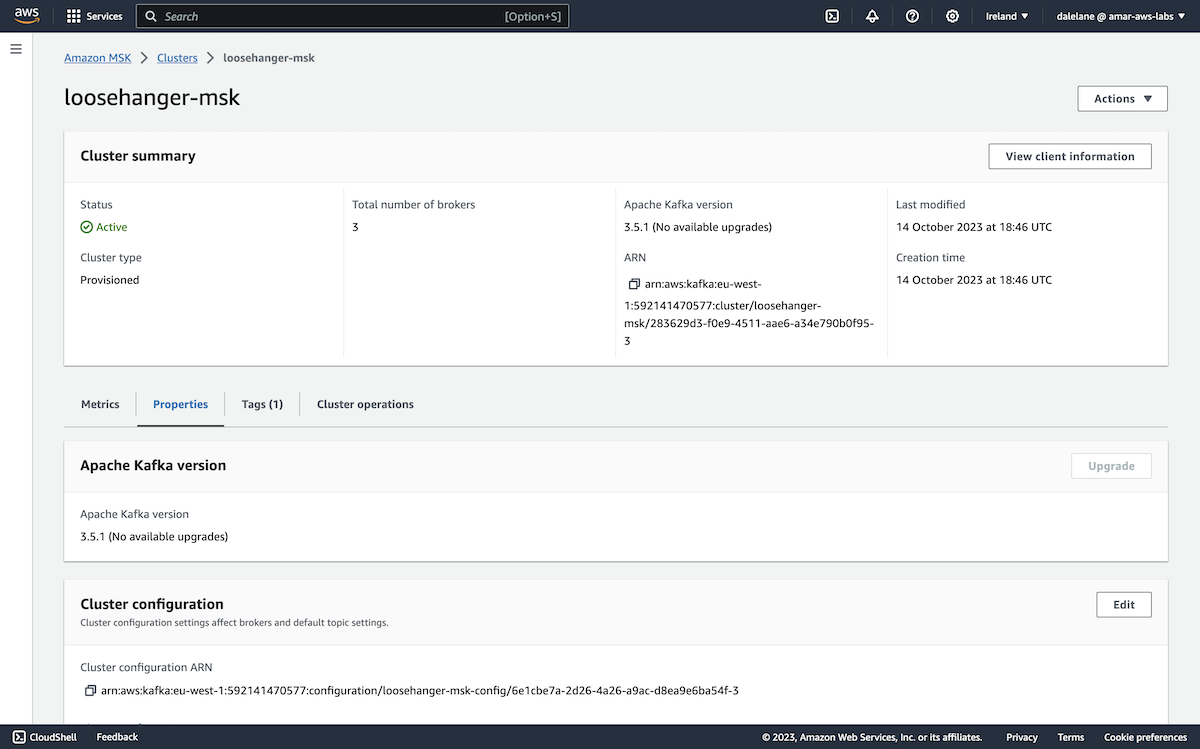

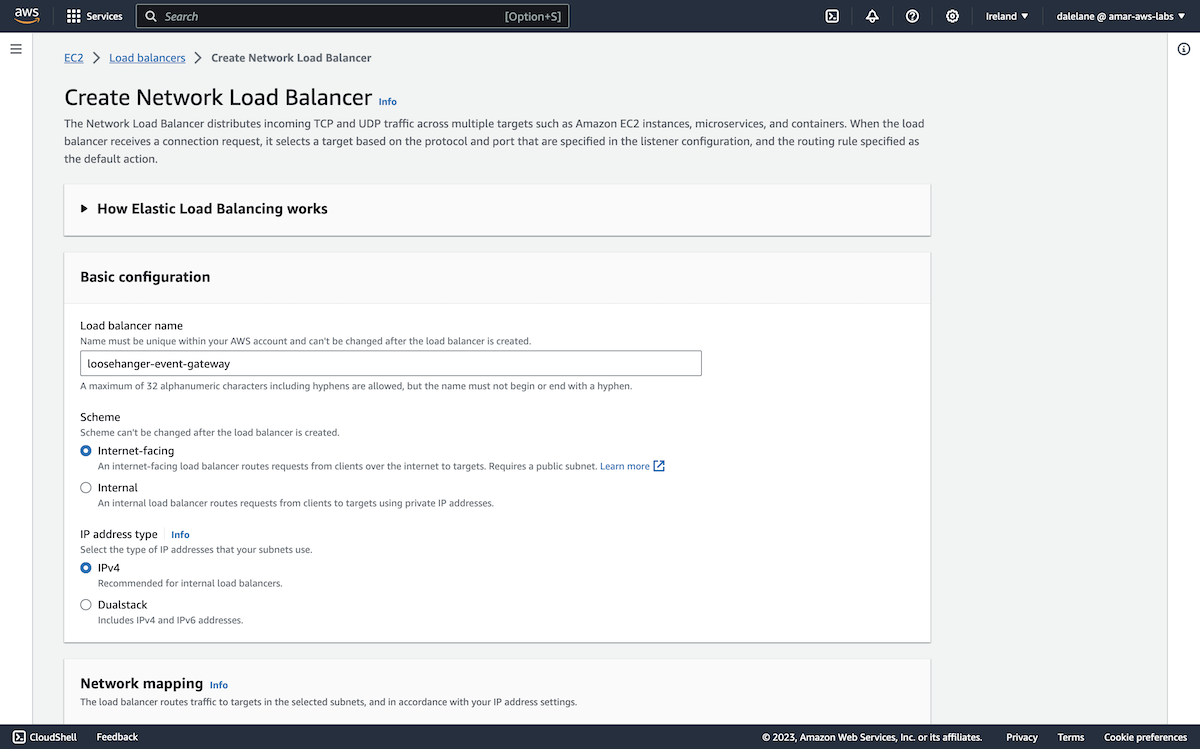

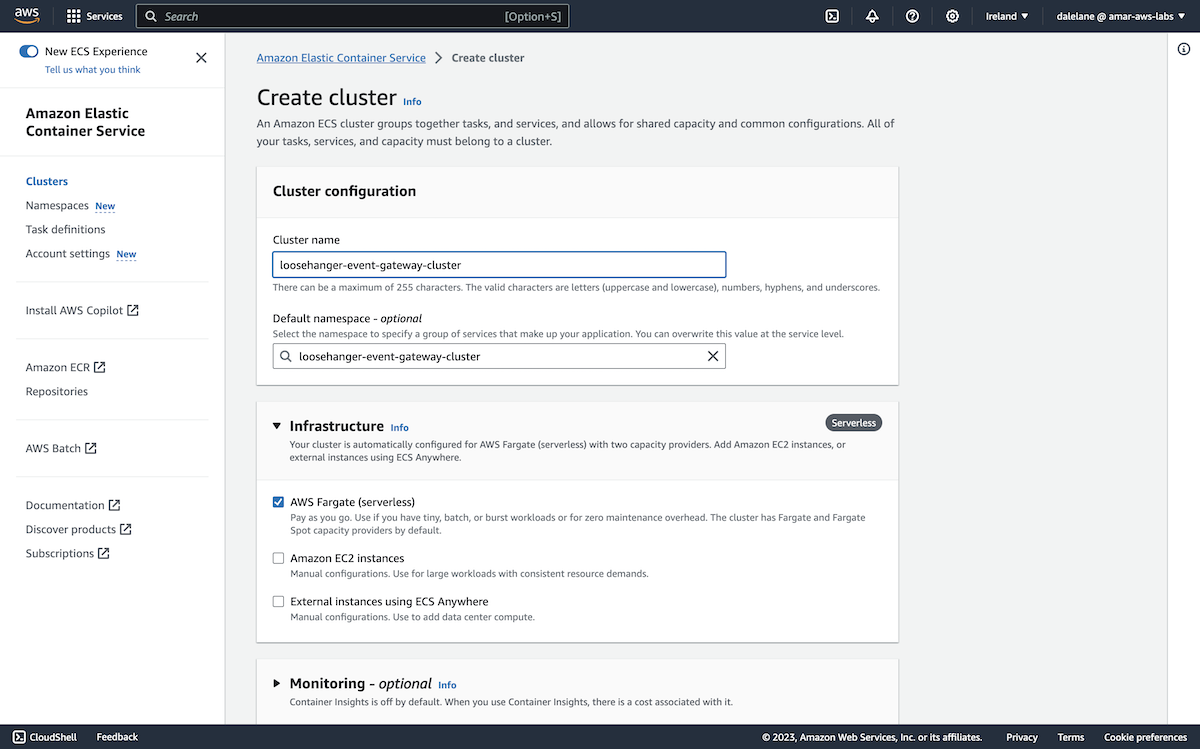

We started by creating an Amazon MSK cluster. ▶

We opened the Amazon Web Services admin console, and went to the MSK (Managed Streaming for Apache Kafka) service.

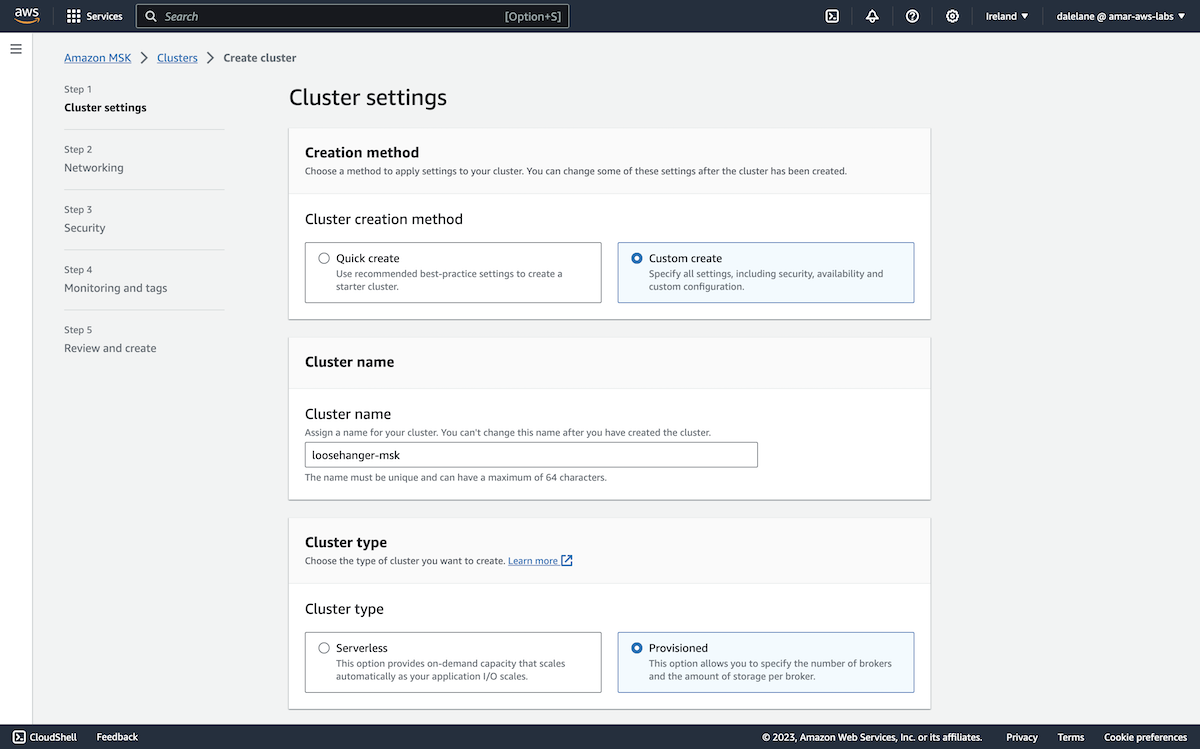

To start, we clicked Create cluster.

We opted for the Custom create option so we could customize our MSK cluster.

We called the cluster loosehanger-msk because we're basing this demonstration on "Loosehanger" - a fictional clothes retailer that we have a data generator for.

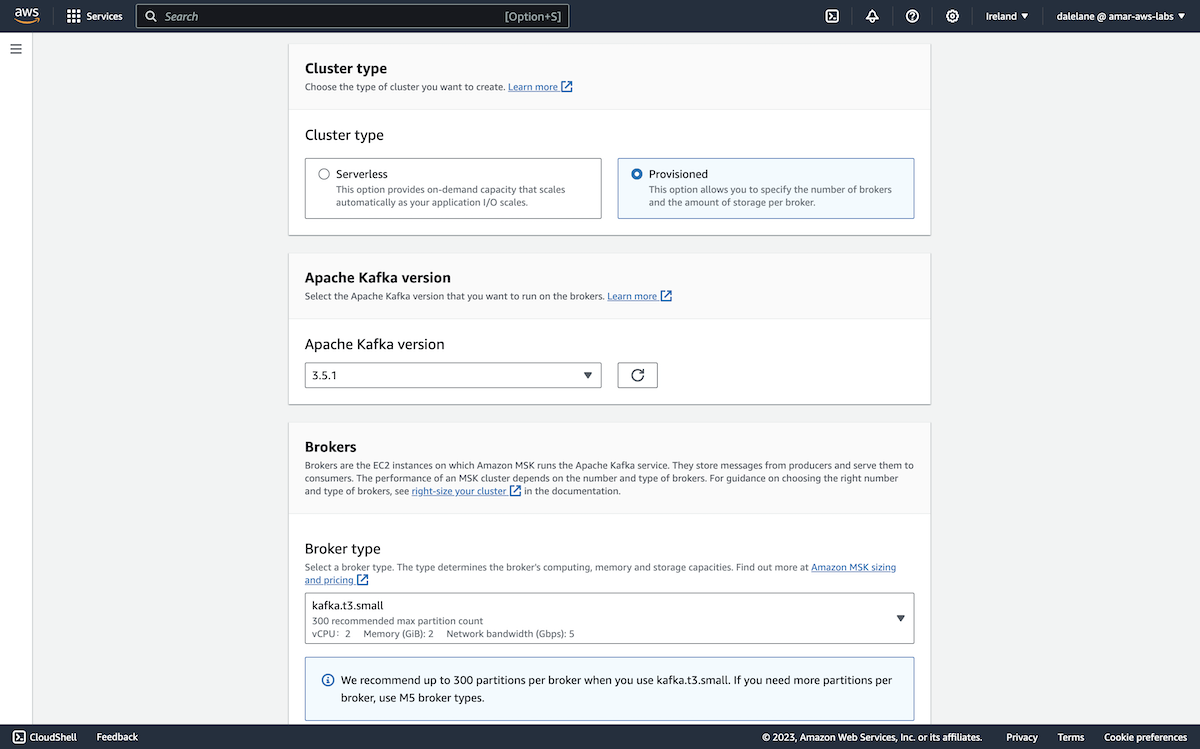

We chose a Provisioned (rather than serverless) Kafka cluster type, and chose the latest version of Kafka that Amazon offered (3.5.1).

Because we only needed an MSK cluster for this short demo, we went with the small broker type.

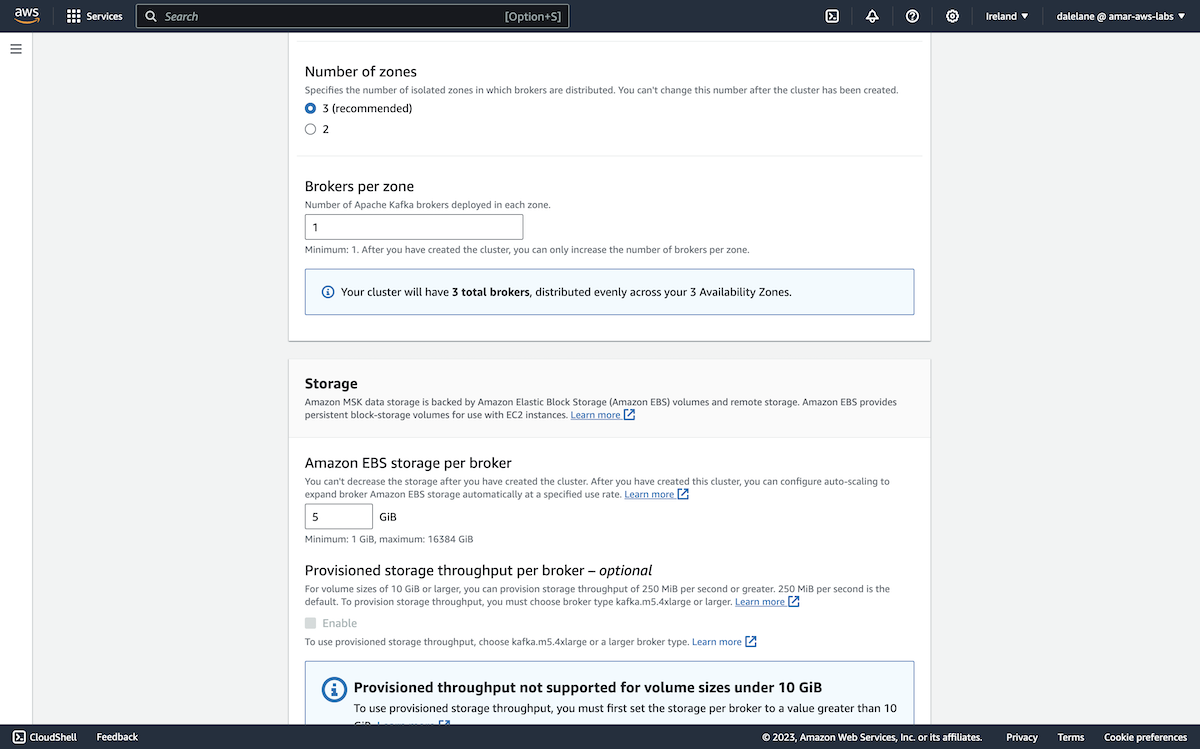

We went with the default, and recommended, number of zones to distribute the Kafka brokers across: three.

Because we only planned to run a few applications with a small number of topics, we didn't need a lot of storage - we gave each broker 5GB of storage.

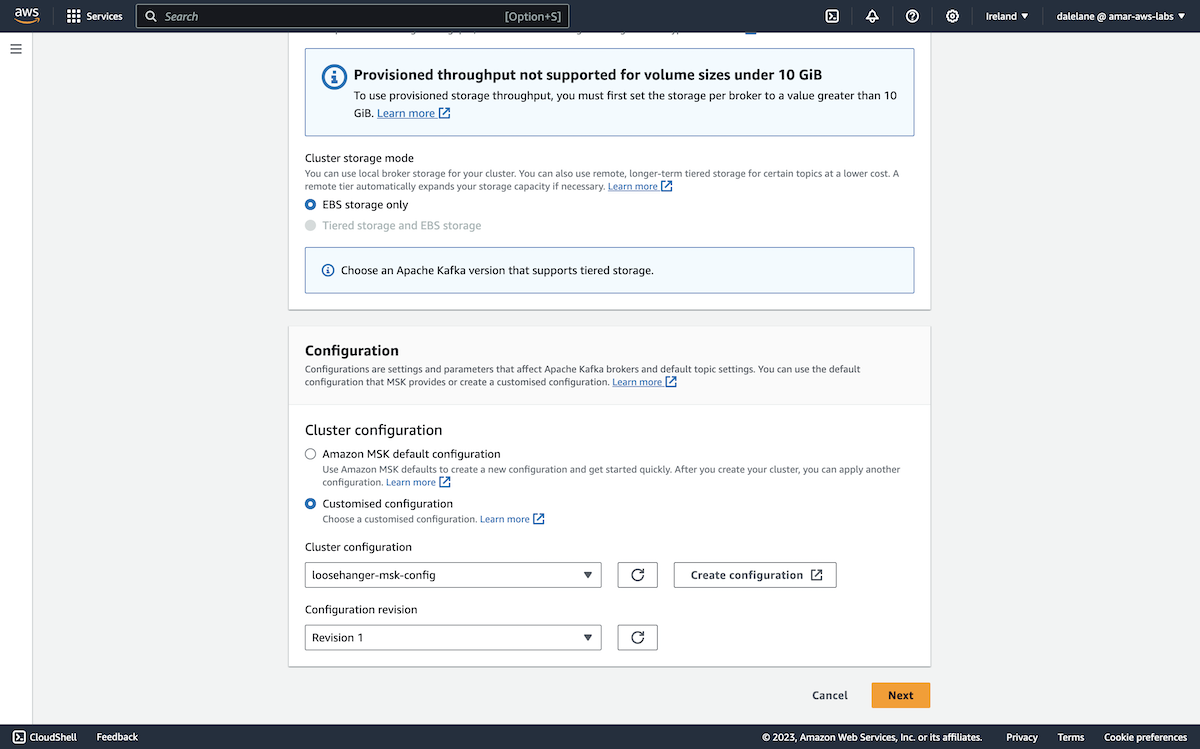

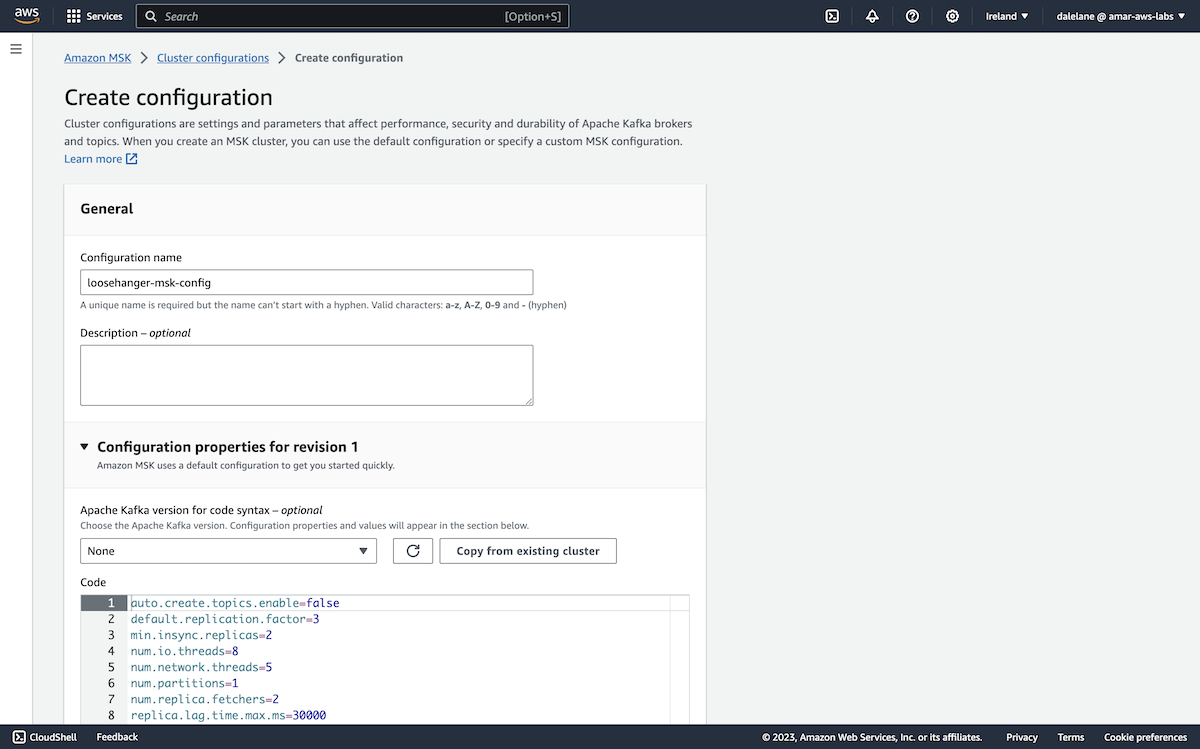

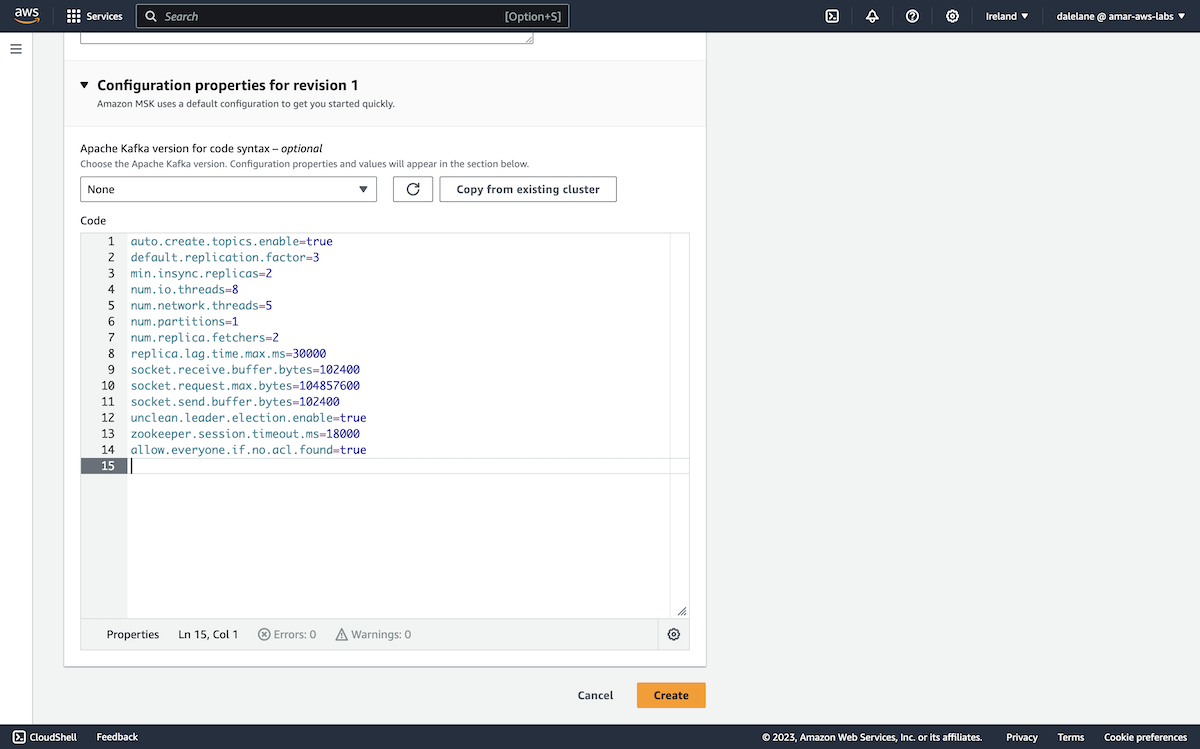

Rather than go with the default Kafka configuration, we clicked Create configuration to prepare a new custom config.

We gave the config a name similar to the MSK cluster itself, based on our scenario of the fictional clothes retailer, Loosehanger.

We started with a config that would make it easy for us to set up the topics.

auto.create.topics.enable=true default.replication.factor=3 min.insync.replicas=2 num.io.threads=8 num.network.threads=5 num.partitions=1 num.replica.fetchers=2 replica.lag.time.max.ms=30000 socket.receive.buffer.bytes=102400 socket.request.max.bytes=104857600 socket.send.buffer.bytes=102400 unclean.leader.election.enable=true zookeeper.session.timeout.ms=18000 allow.everyone.if.no.acl.found=true

The key value we added to the default config was the allow.everyone.if.no.acl.found one, to make it clear that we would start creating topics before setting up auth or access control lists.

We clicked Create to create this configuration. Once back on the MSK cluster settings screen, we chose this custom config and clicked Next to move on to the next step.

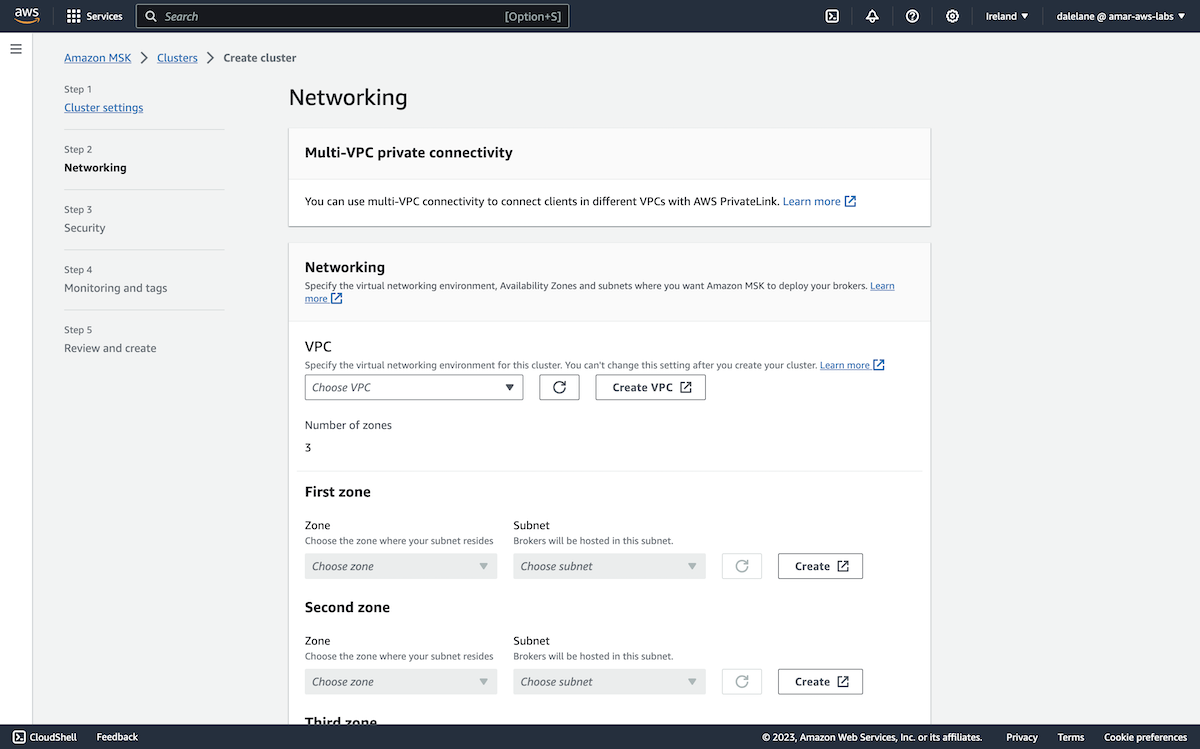

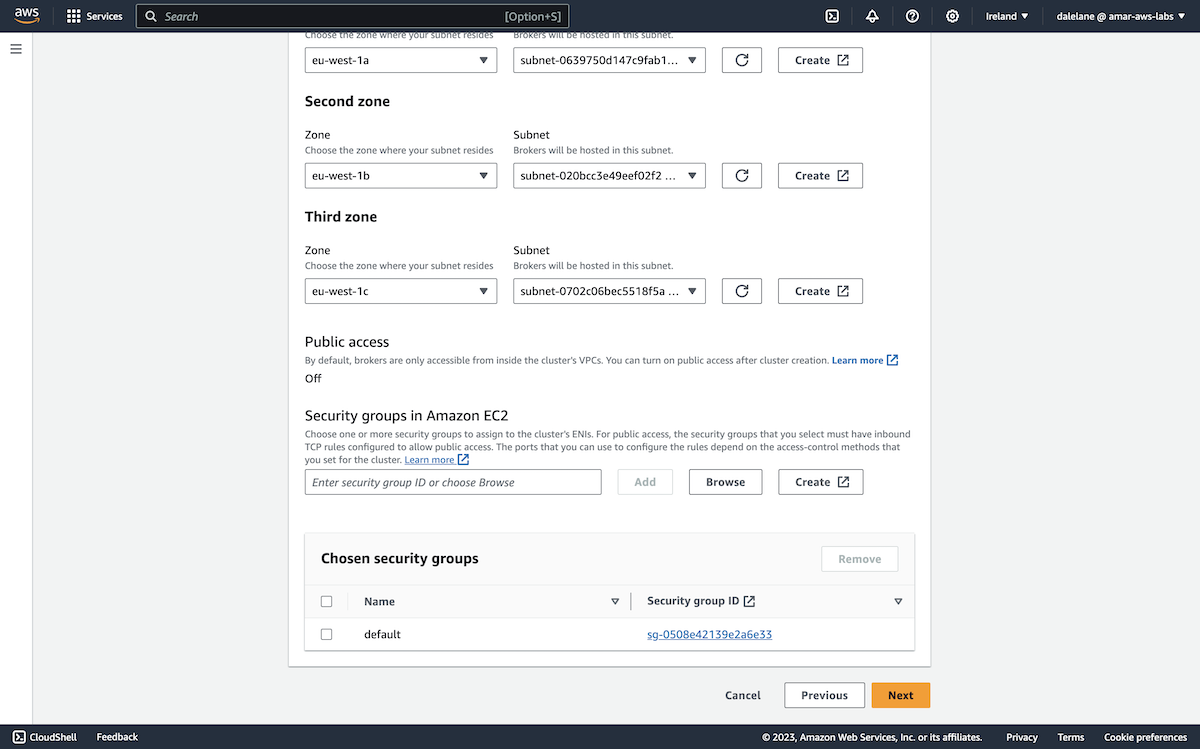

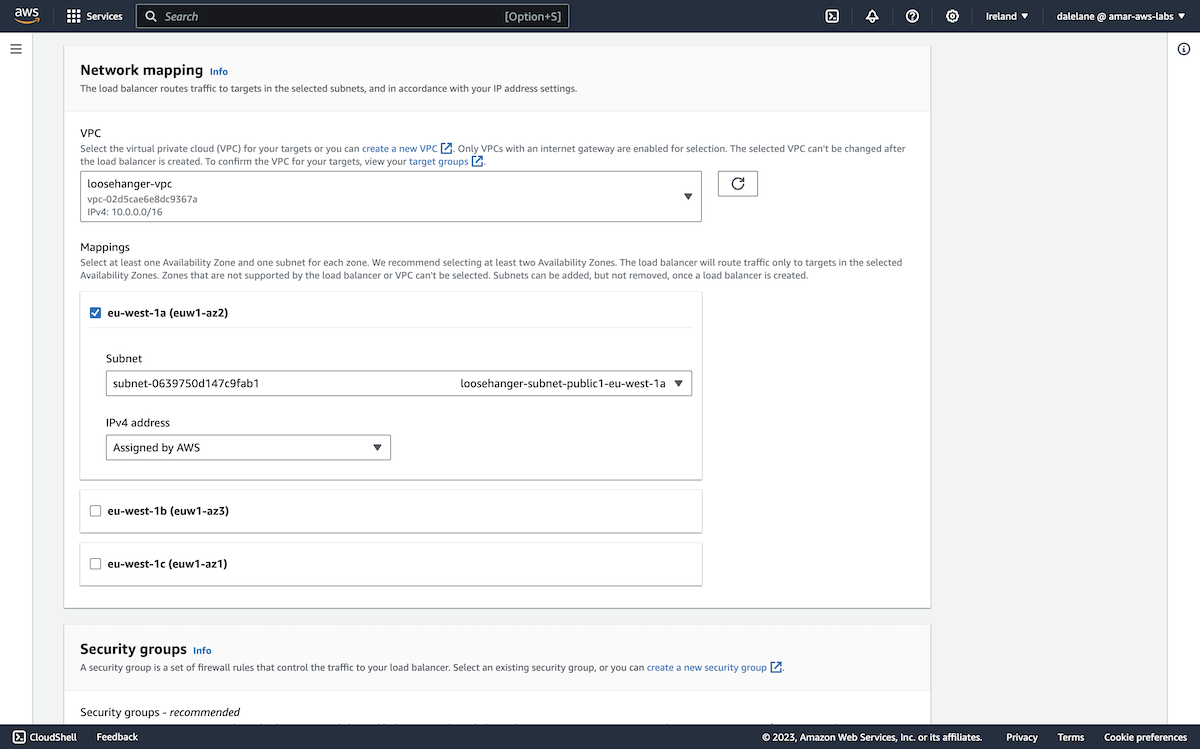

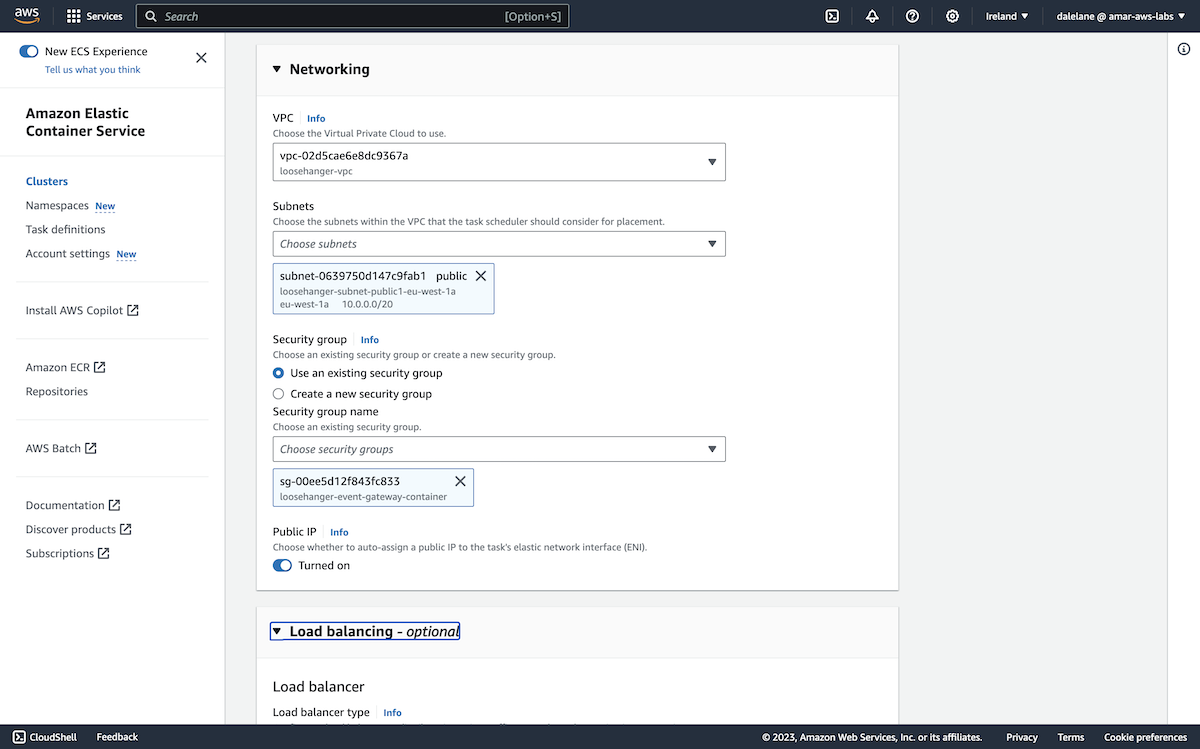

Networking was next.

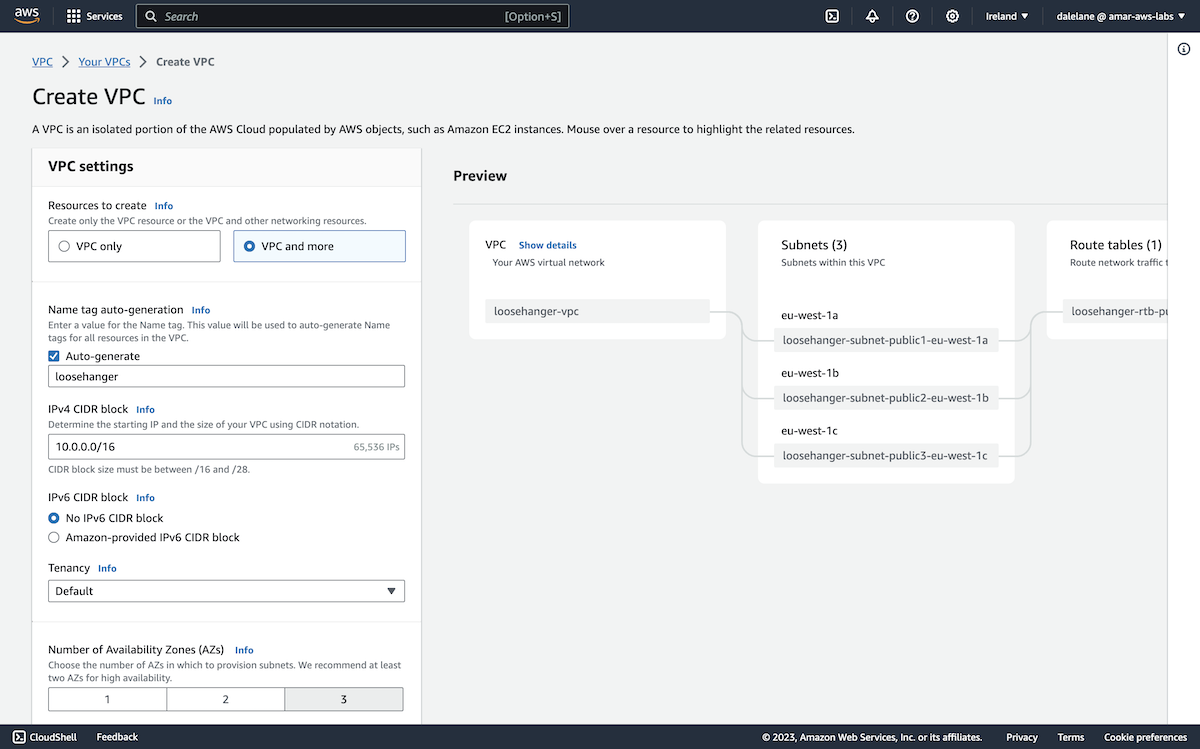

We clicked Create VPC to prepare a new virtual networking environment for our demonstration.

We opted for the VPC and more option so we could set this up in a way that would support public access to the MSK cluster.

We chose three availability zones to match the config we used for the MSK cluster - this would allow us to have a separate AZ for each MSK broker.

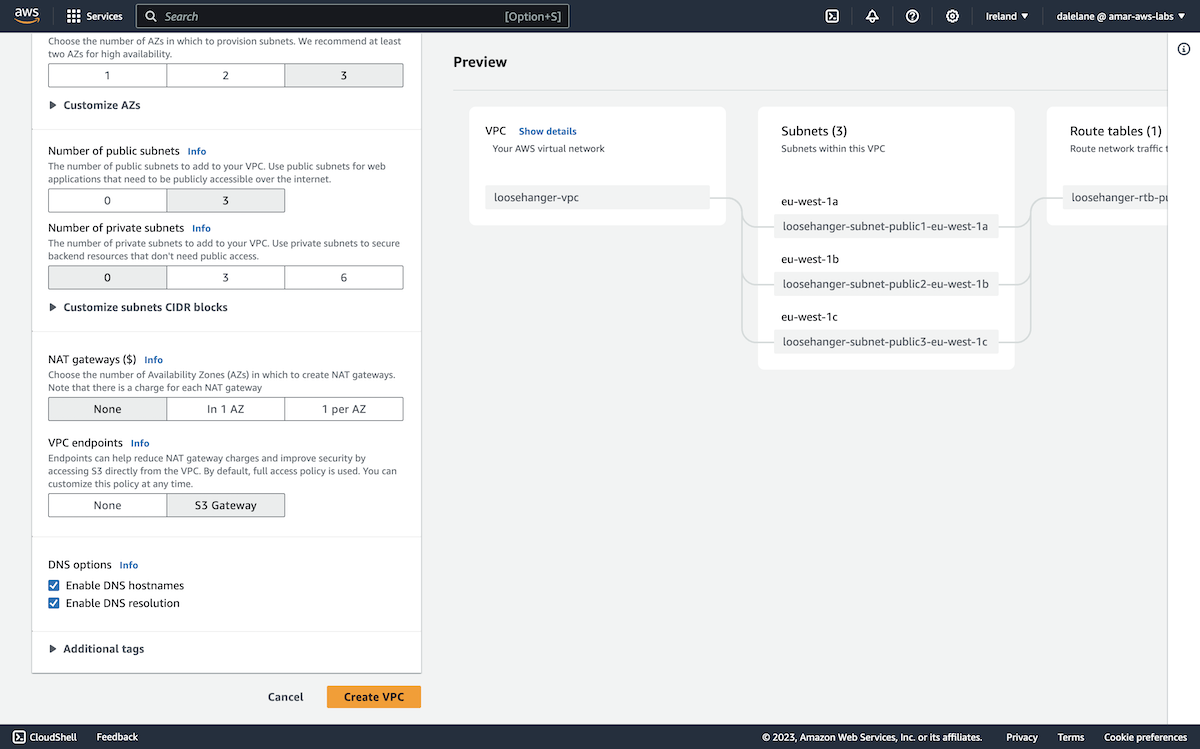

We went with three public subnets and no private subnets, again as this would allow us to enable public access to the MSK cluster.

We left the default DNS options enabled so that we could have DNS hostnames for our addresses.

Next we clicked Create VPC.

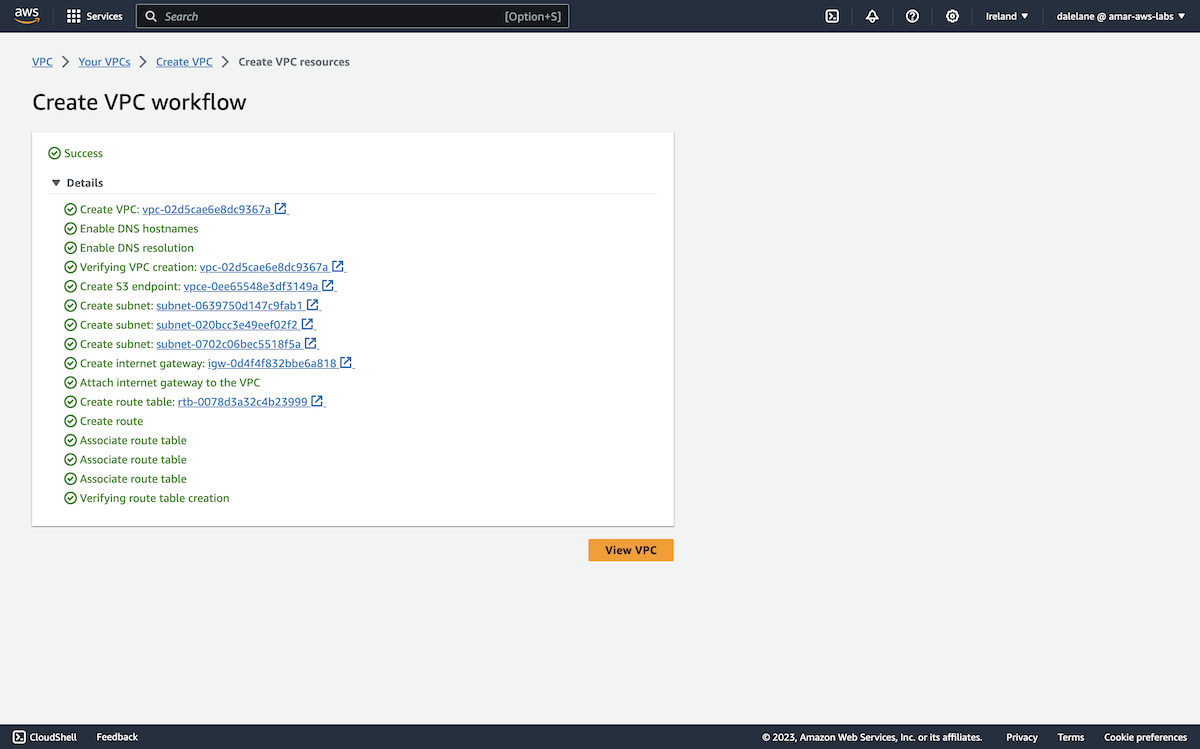

We verified that the VPC resources we requested were created and then closed this window.

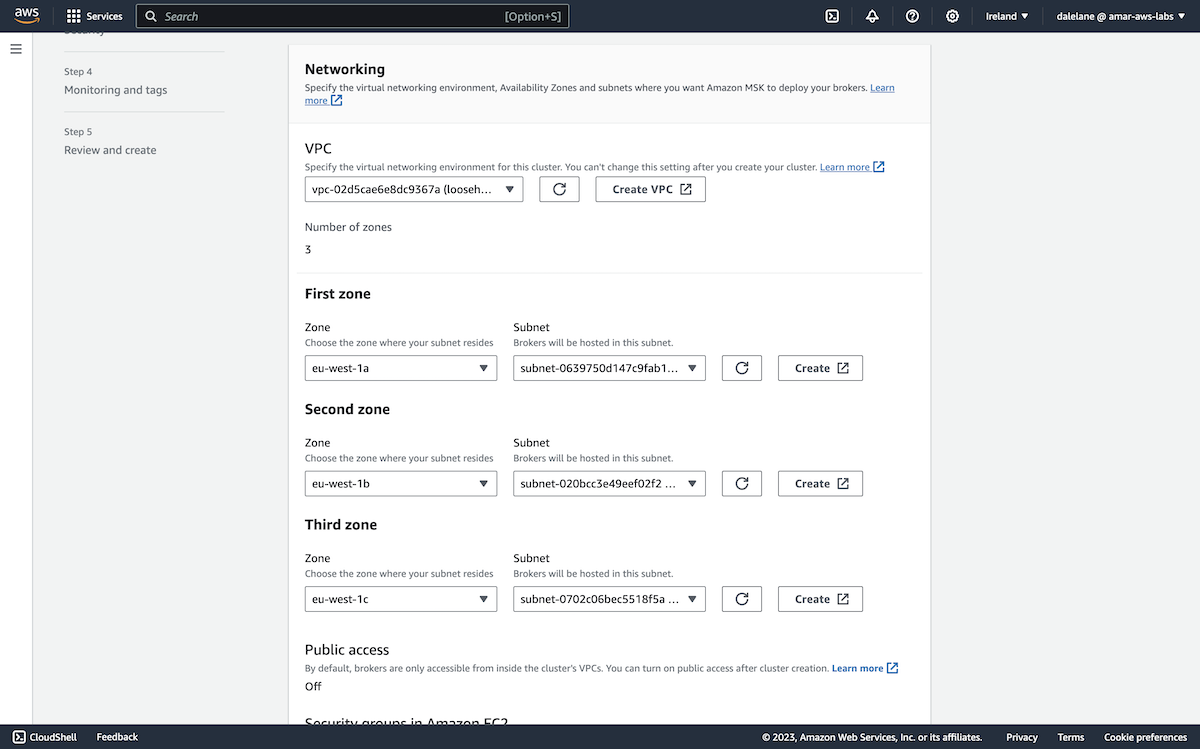

Back on the Networking step of the MSK cluster creation wizard, we were now able to choose our new VPC, and select the zones and subnets. The match of three availability zones for the MSK cluster, and three availability zones for the VPC meant it was just a matter of choosing a different zone for each broker.

We wanted to enable public access, but this can't be done at cluster creation time, so this remained on Off for now.

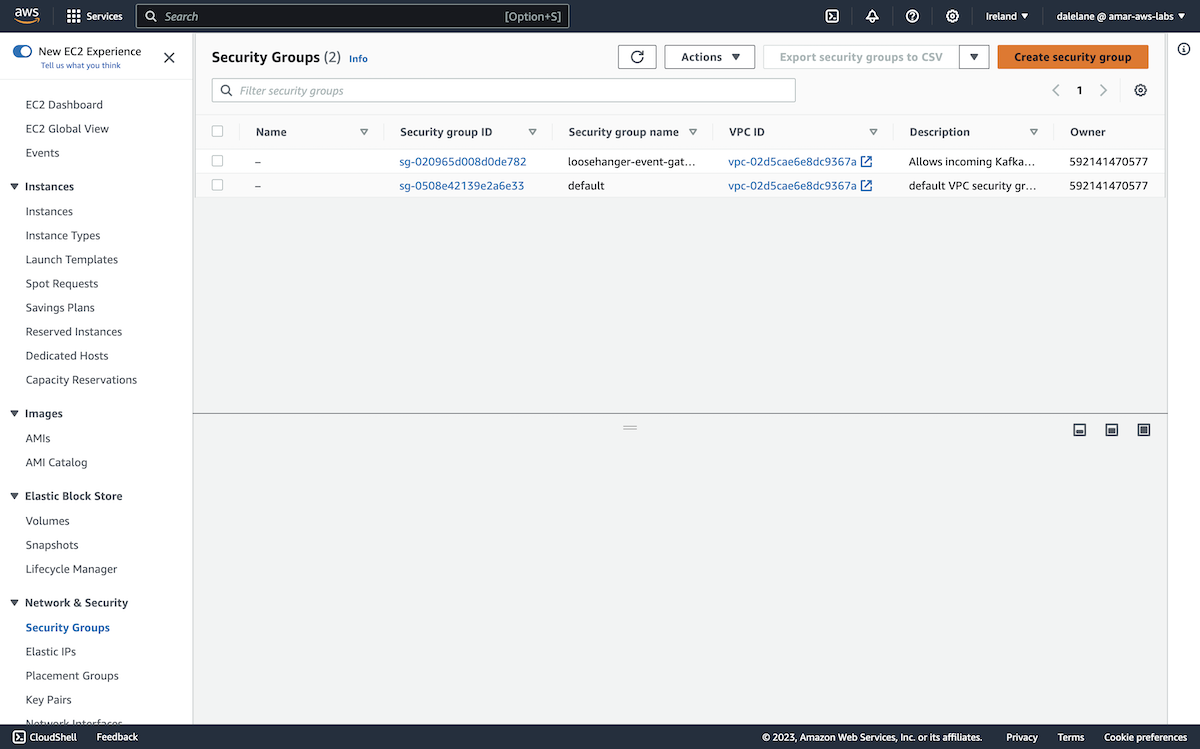

The final networking step option is to create security groups. The default option here was fine for our purposes, so we left this as-is.

We clicked Next to move onto the next step.

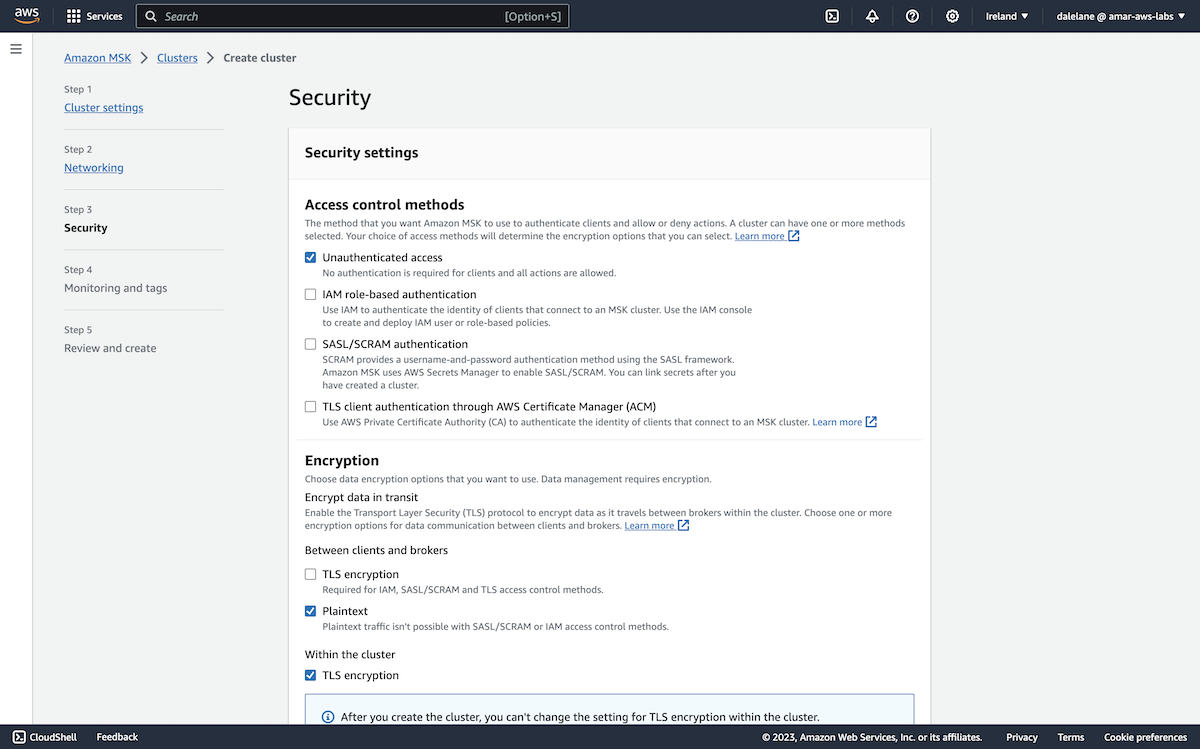

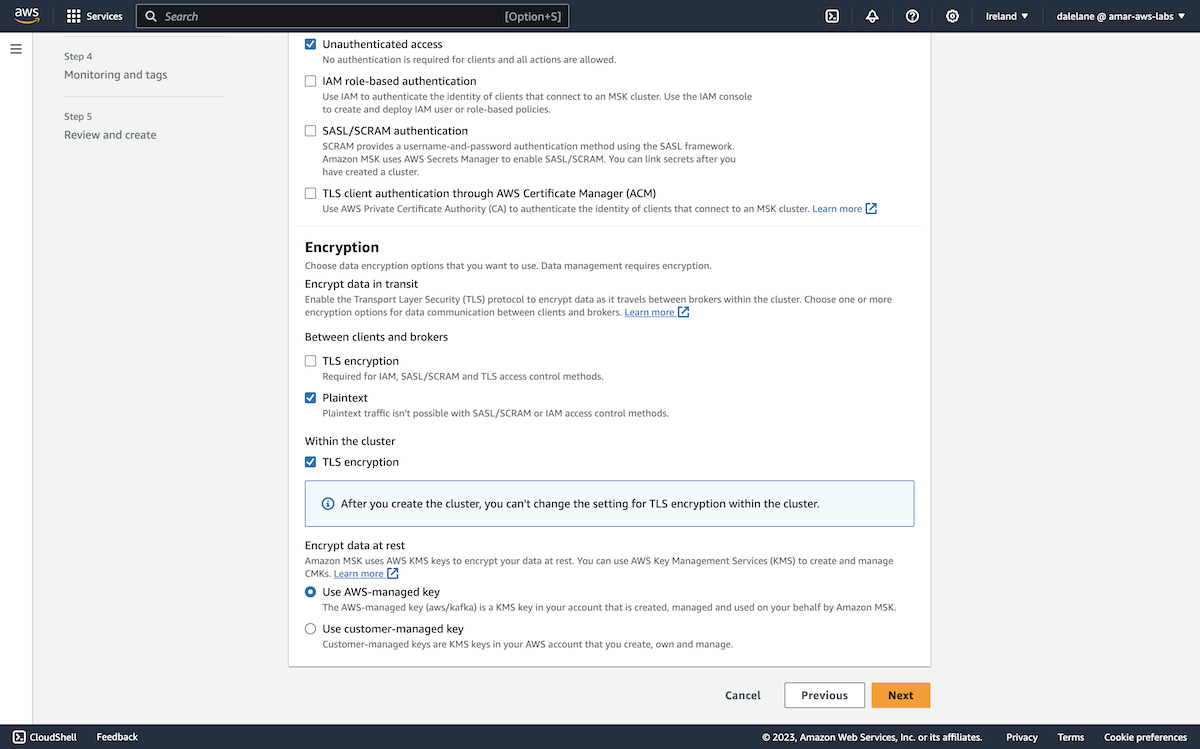

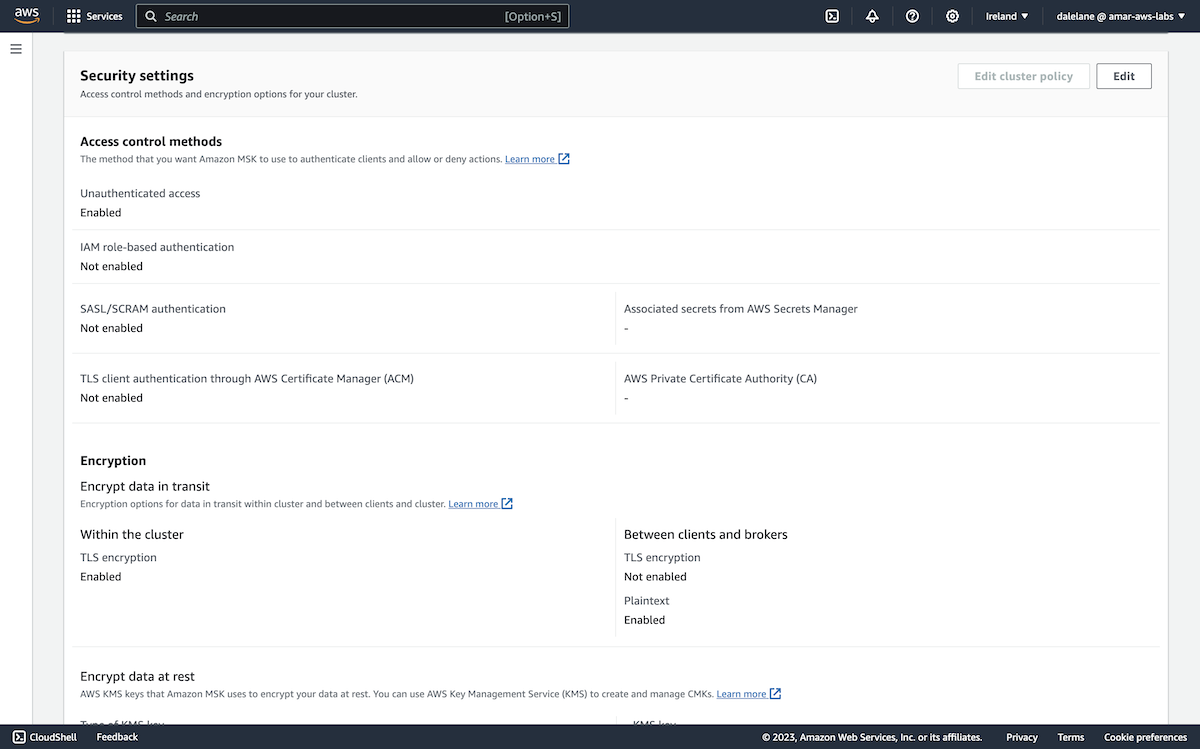

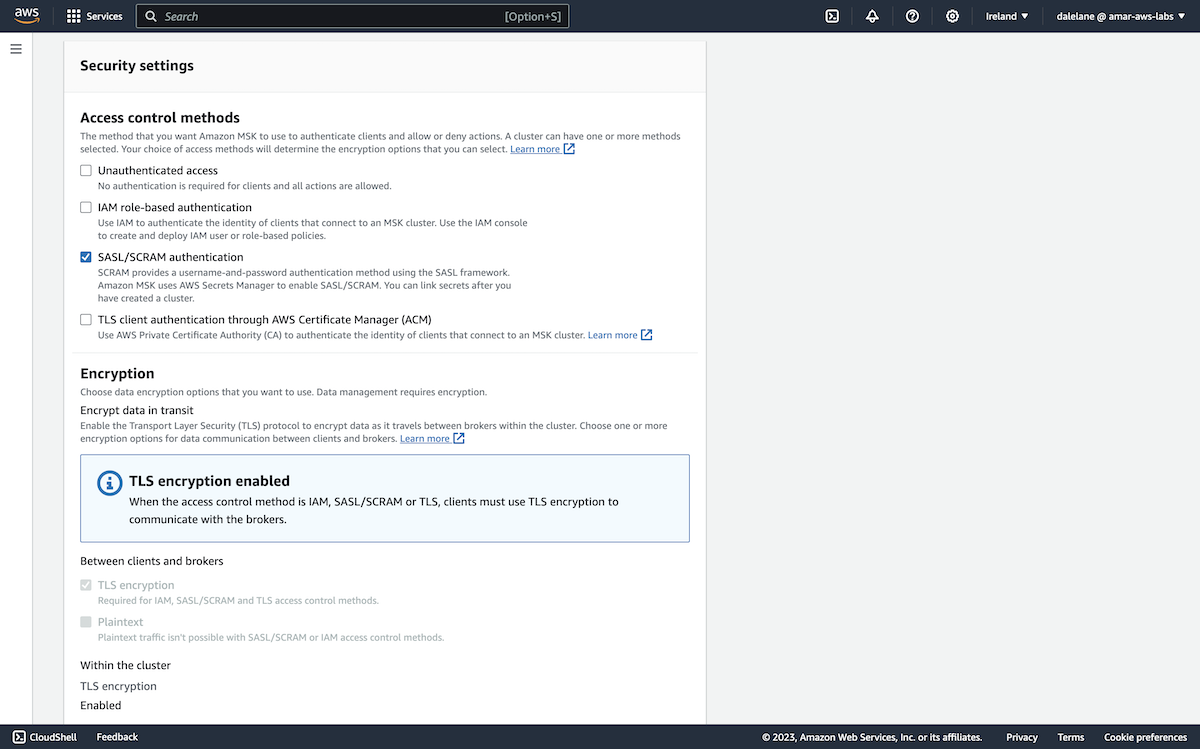

The next step was to configure the security options for the MSK cluster.

We started with disabling auth, as unauthenticated access would make it easy for us to set up our cluster and topics. We enabled auth after we had the cluster the way we wanted it.

For the same reason, we also left client TLS disabled as well. We turned this on later when we enabled public access to the cluster.

The default encryption key was fine for encrypting the broker storage, so we left this as-is and clicked Next.

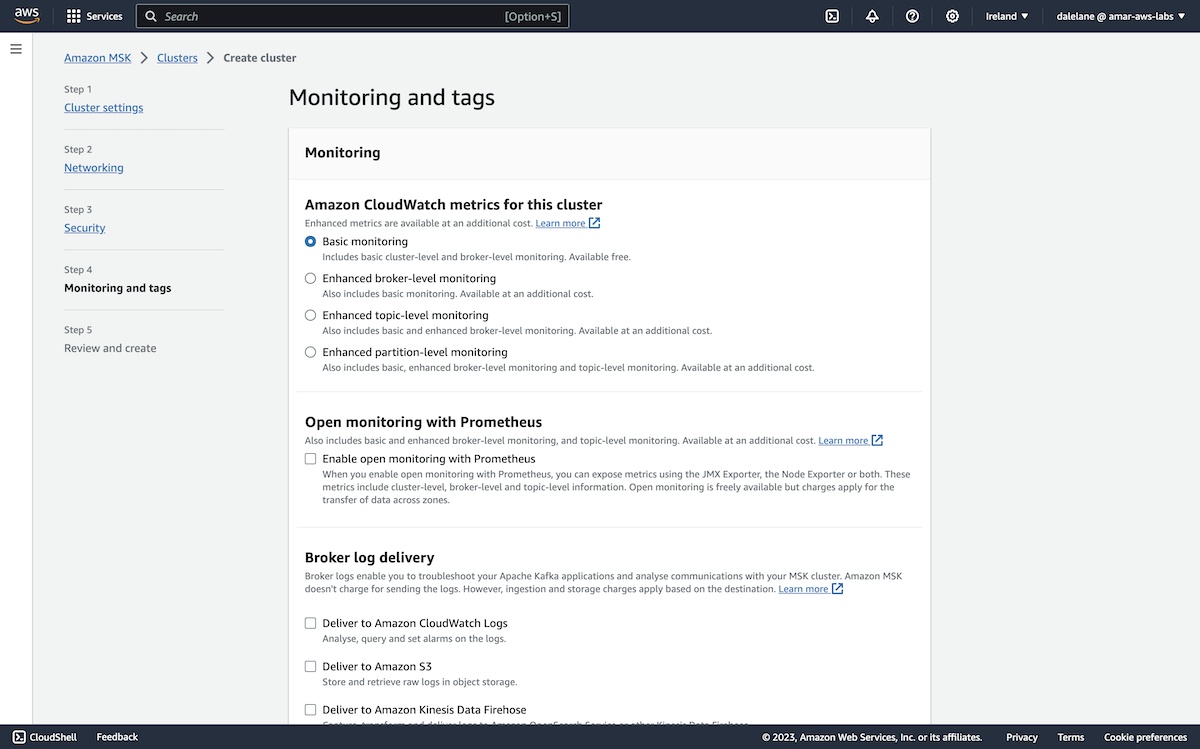

The next step is to configure monitoring. As a short-lived demo cluster, we didn't have monitoring requirements, so we left this on the default basic option and clicked Next.

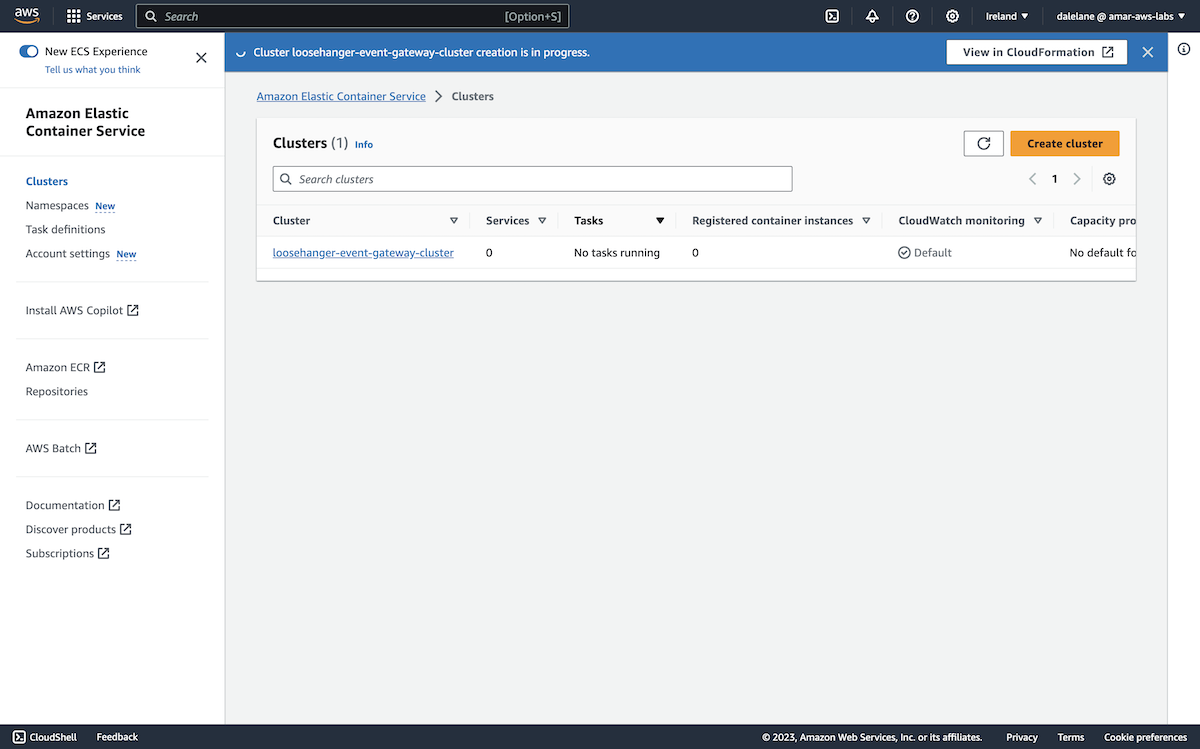

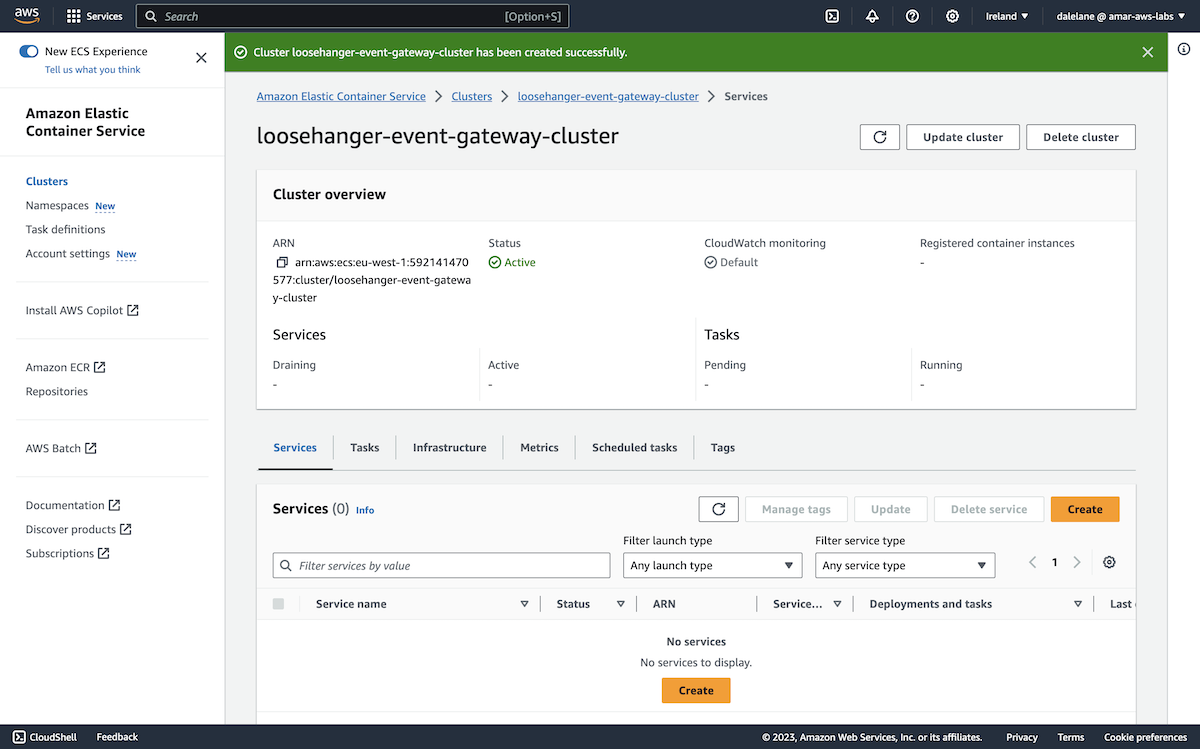

Our MSK cluster specification was ready to go, so we clicked Create.

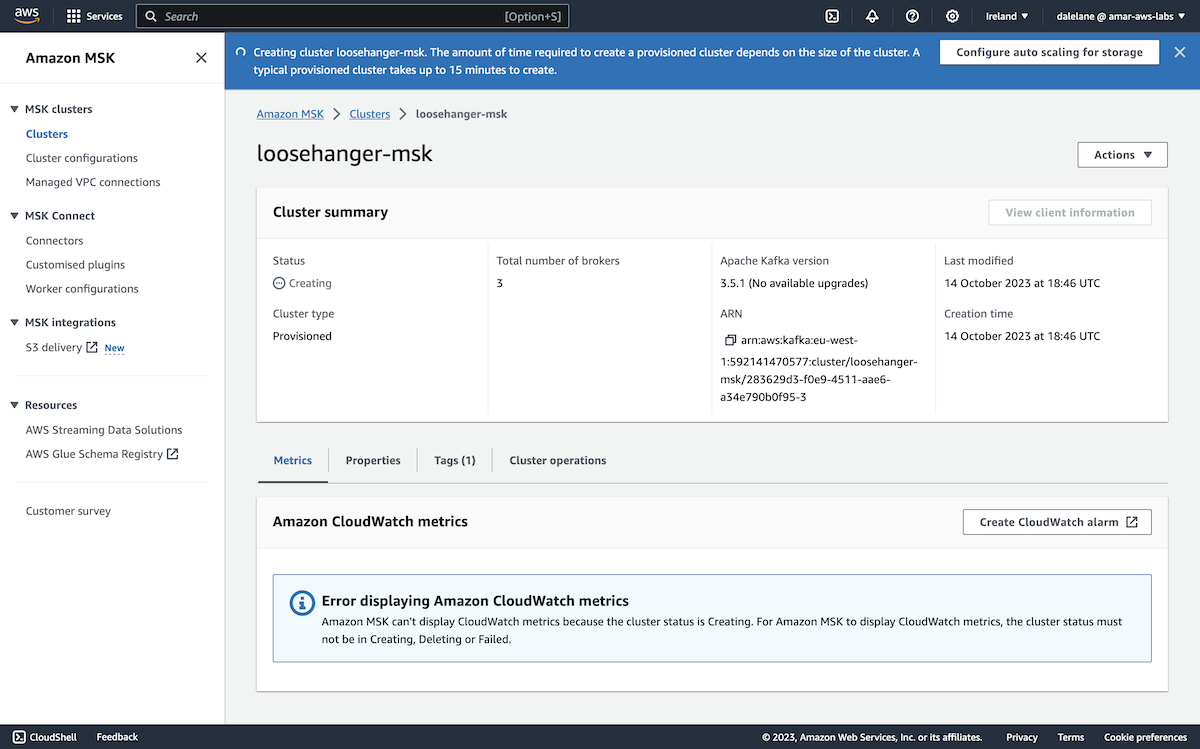

We had to wait for the MSK cluster to provision.

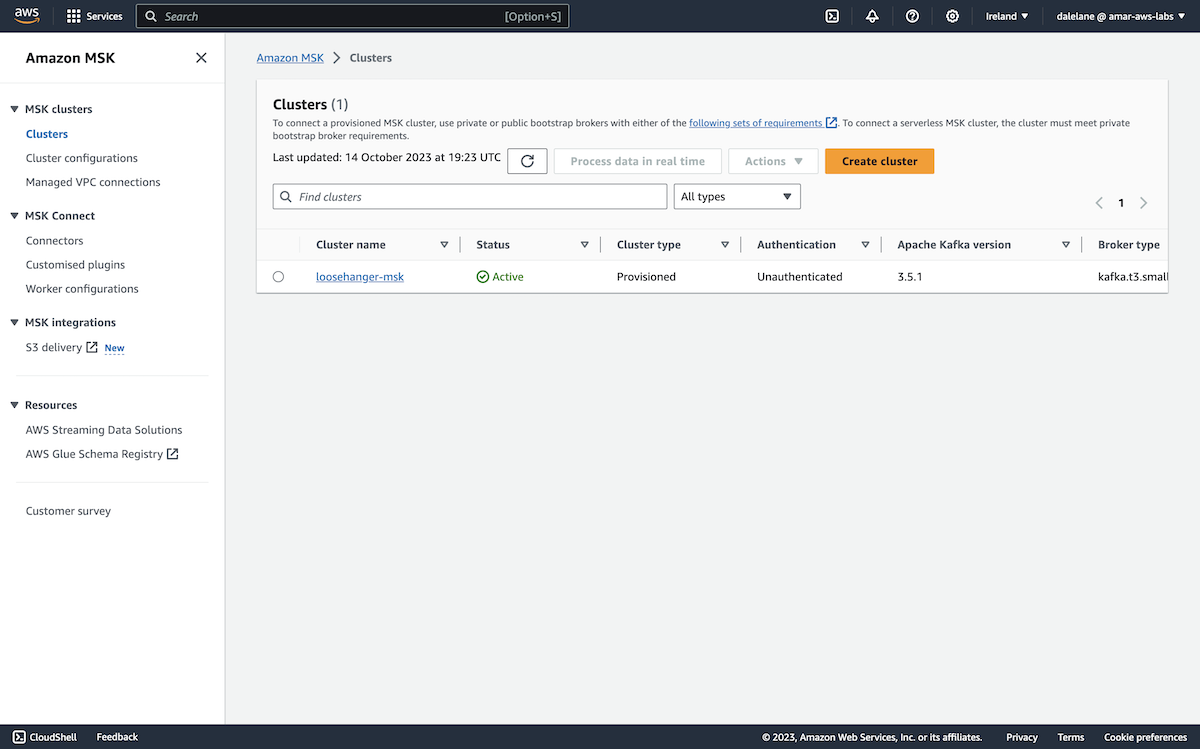

Once it was ready, we could move on to the next stage which was to create topics.

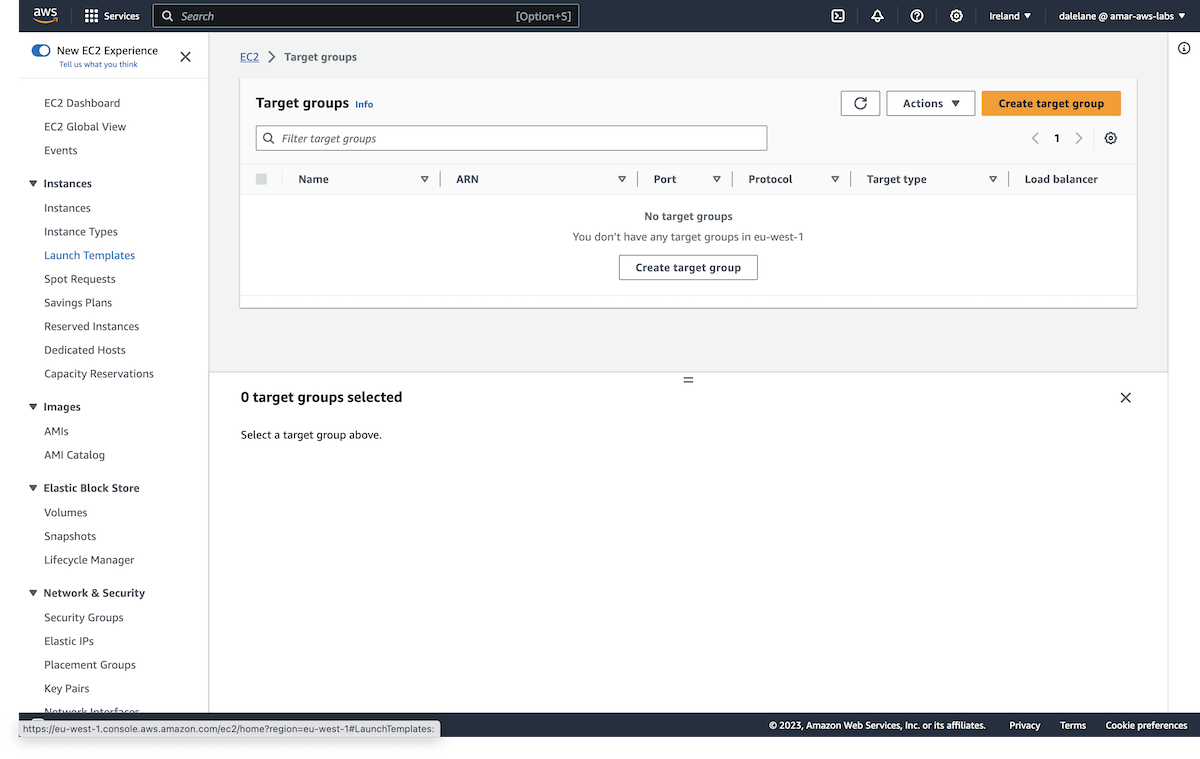

Next, we created some Kafka topics that we would use with Event Automation, and set up some credentials for accessing them. ▶

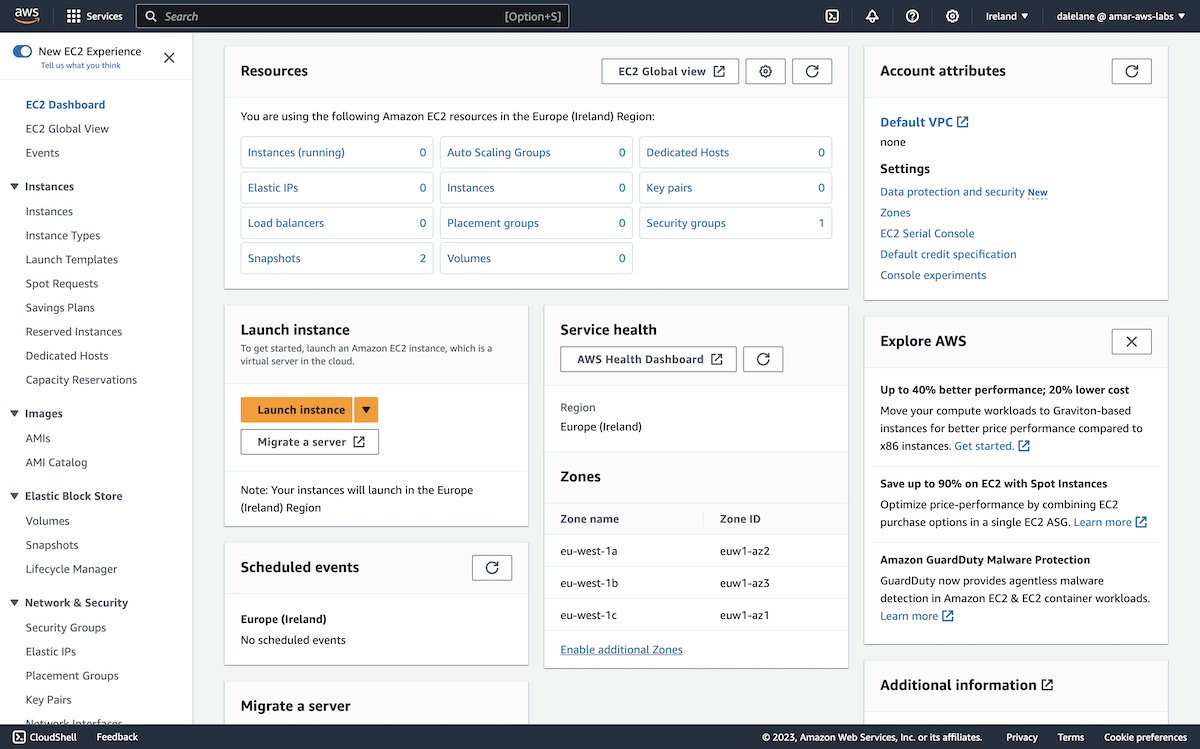

Amazon MSK doesn't offer admin controls for the Kafka cluster, so we needed somewhere that we could run a Kafka admin client.

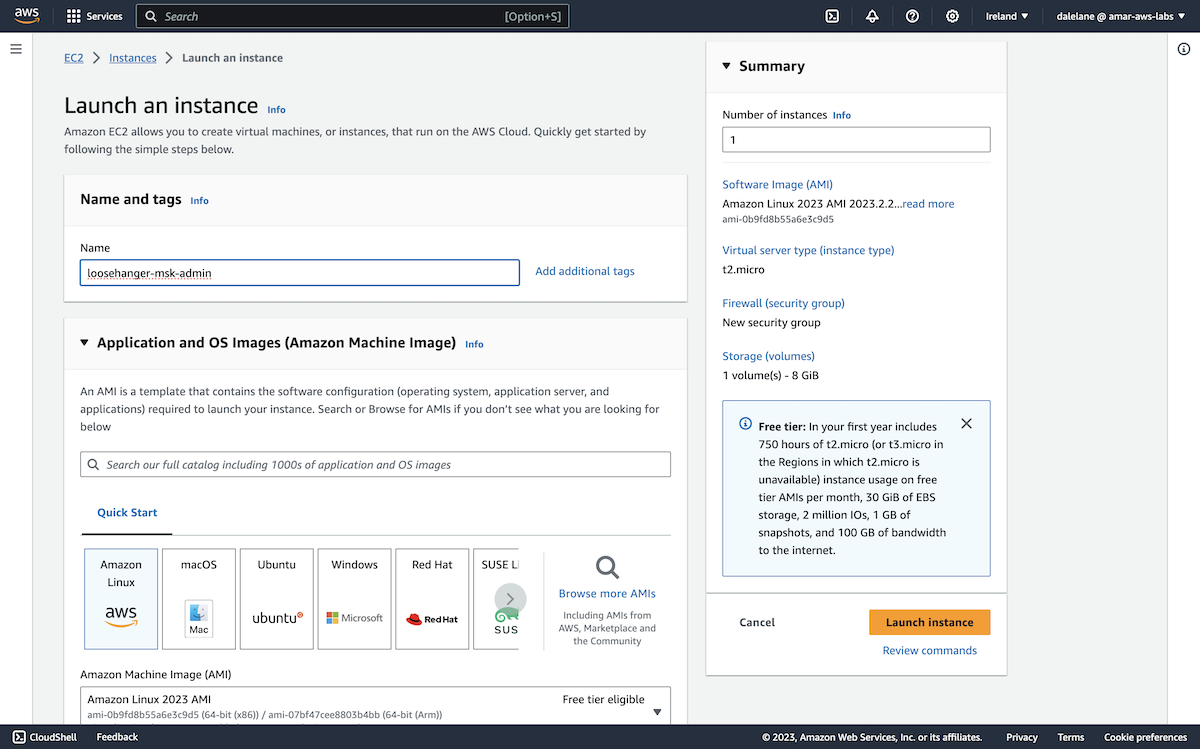

The simplest option was to create an EC2 server where we could run Kafka admin commands from. We went to the EC2 service within AWS, and clicked Launch instance.

We kept with our naming theme of "Loosehanger" to represent our fictional clothes retailer.

This would only be a short-lived server, that we would keep around long enough to run a few Kafka admin commands, so quick and simple was the priority. We went with the Amazon Linux quick start option.

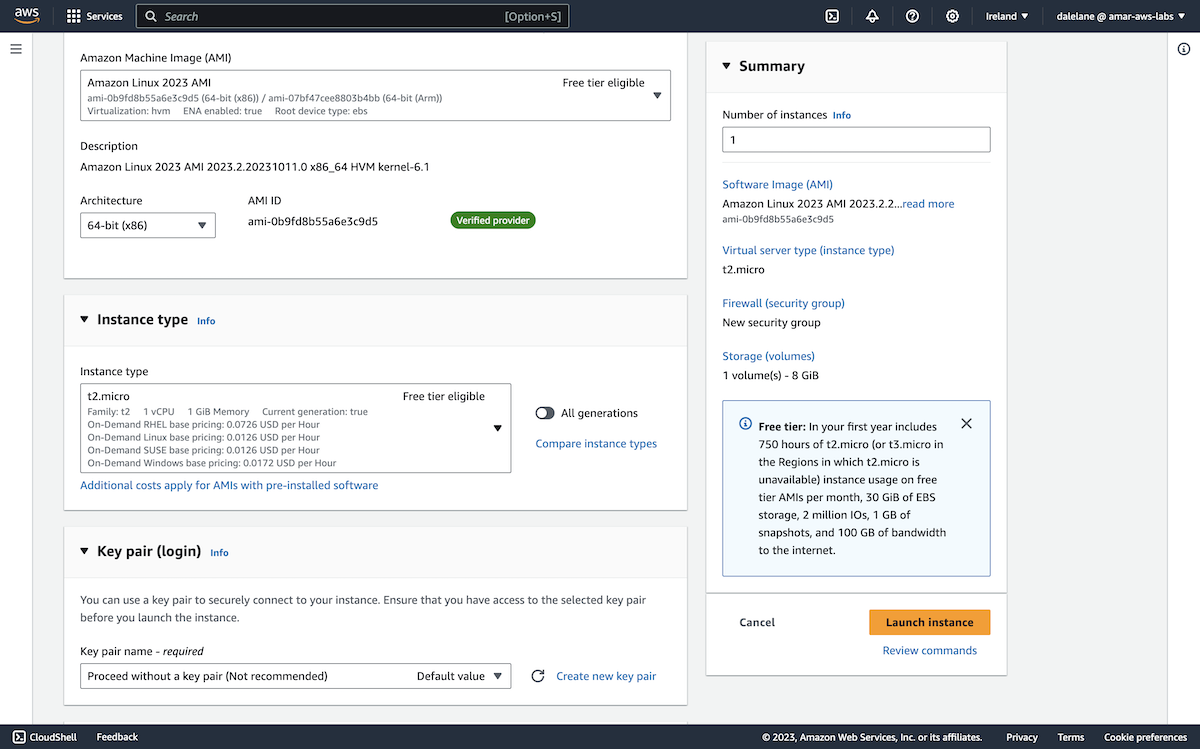

Again, as this would only be a short-lived server running a small number of command-line scripts, a small, free-tier-eligible instance type was fit for our needs.

We didn't need to create a key pair as we weren't planning to make any external connections to the EC2 server.

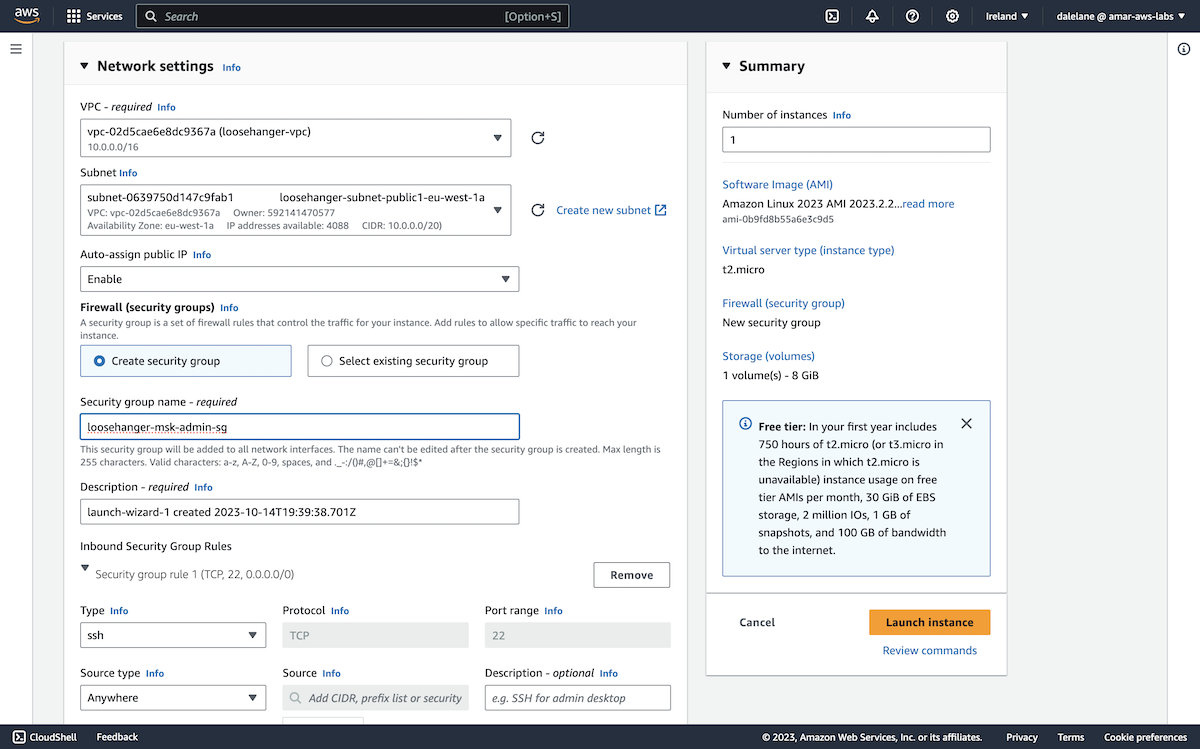

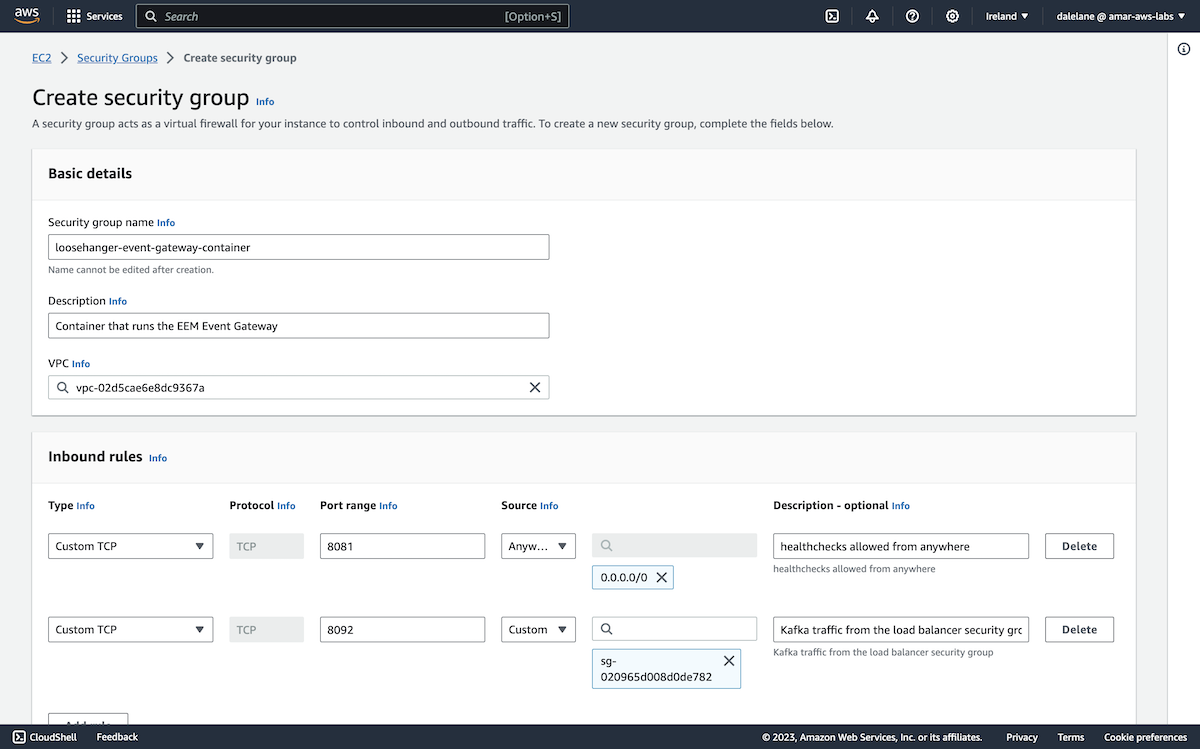

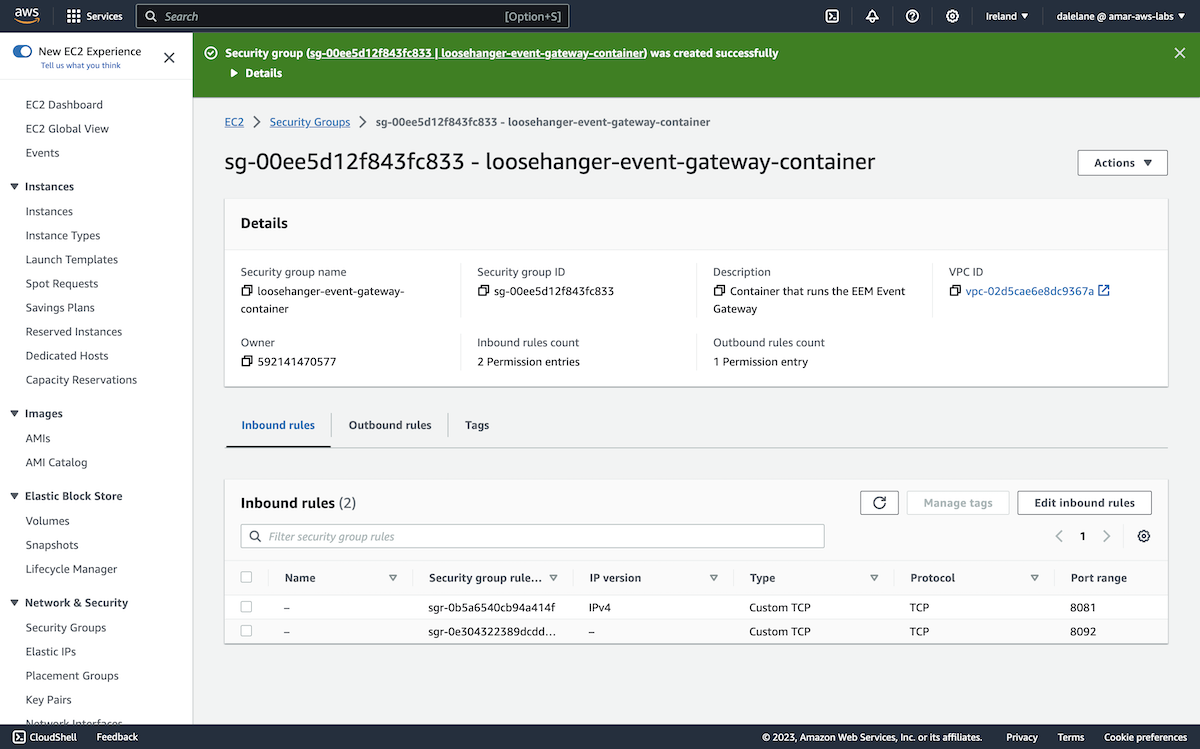

We did need to create a new security group, in order to be able to enable terminal access to the server.

For the same reason, we needed to assign a public IP address to the server, as that is needed for web console access to the server's shell.

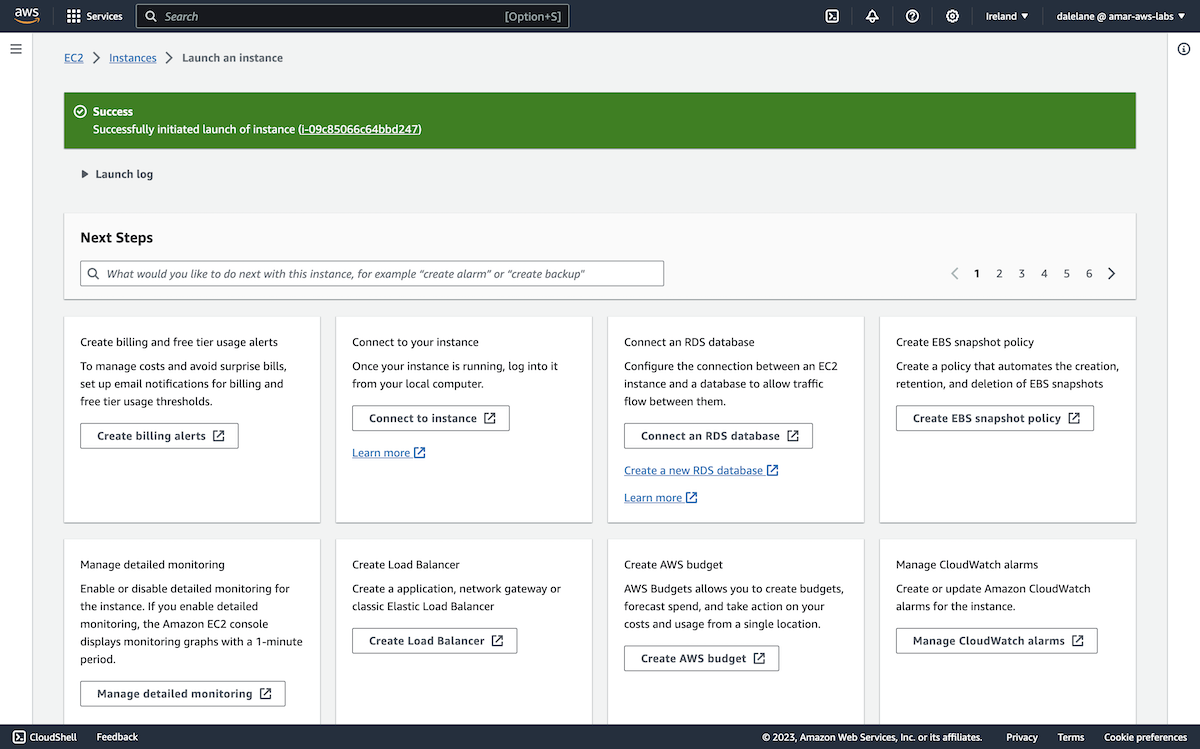

With this configured, we clicked Launch instance.

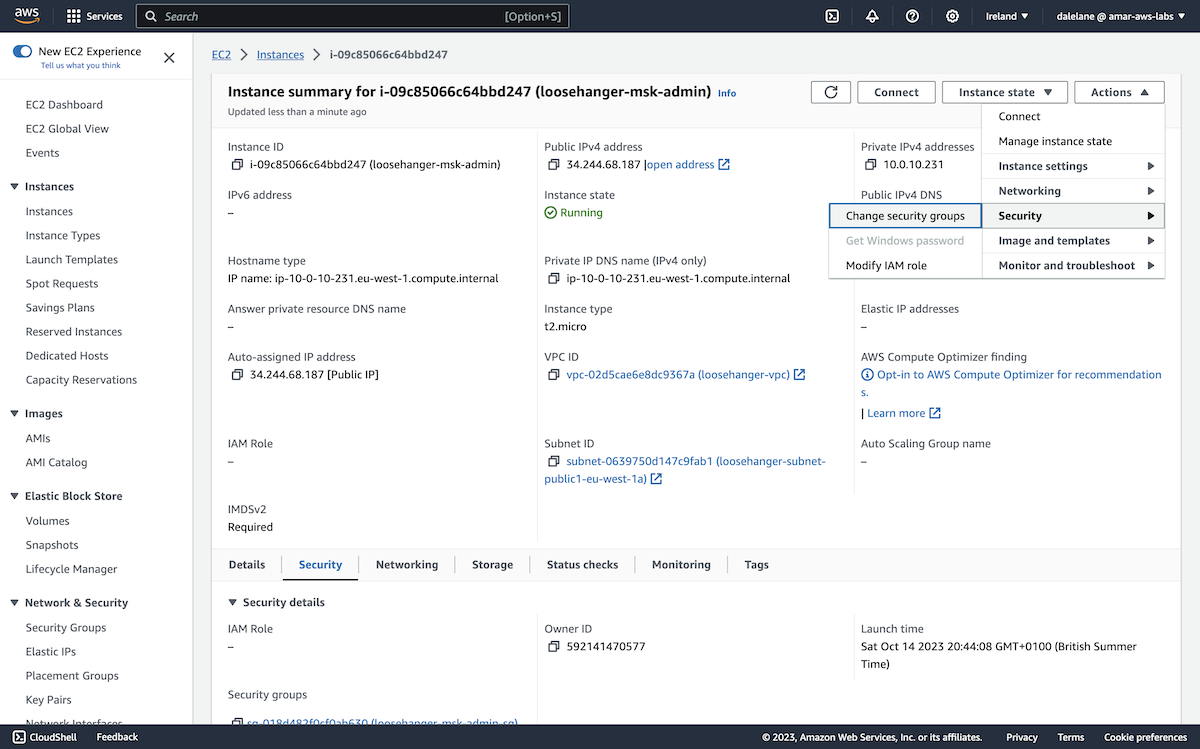

There was one more modification we needed to make to the server, so we clicked on the instance name at the top of the page to access the server instance details.

We needed to modify the firewall rules to allow access from the EC2 instance to our MSK cluster, so we needed to modify the security groups.

In the top-right menu, we navigated to Change security groups.

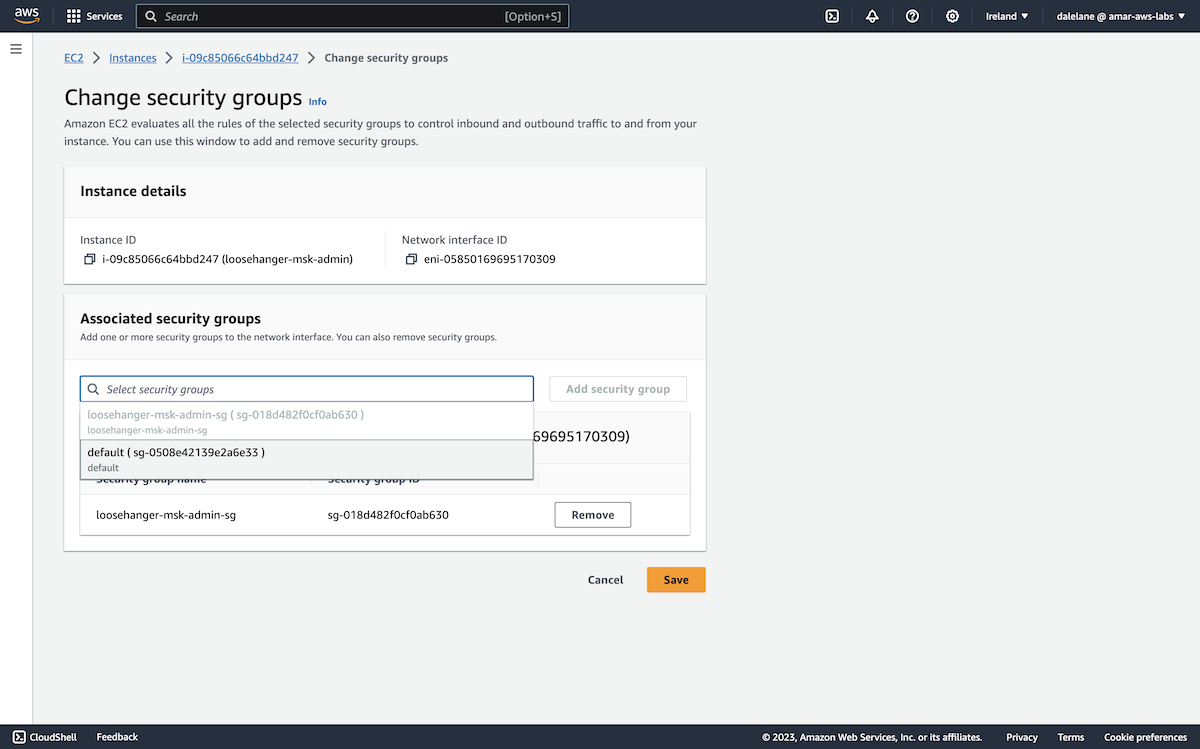

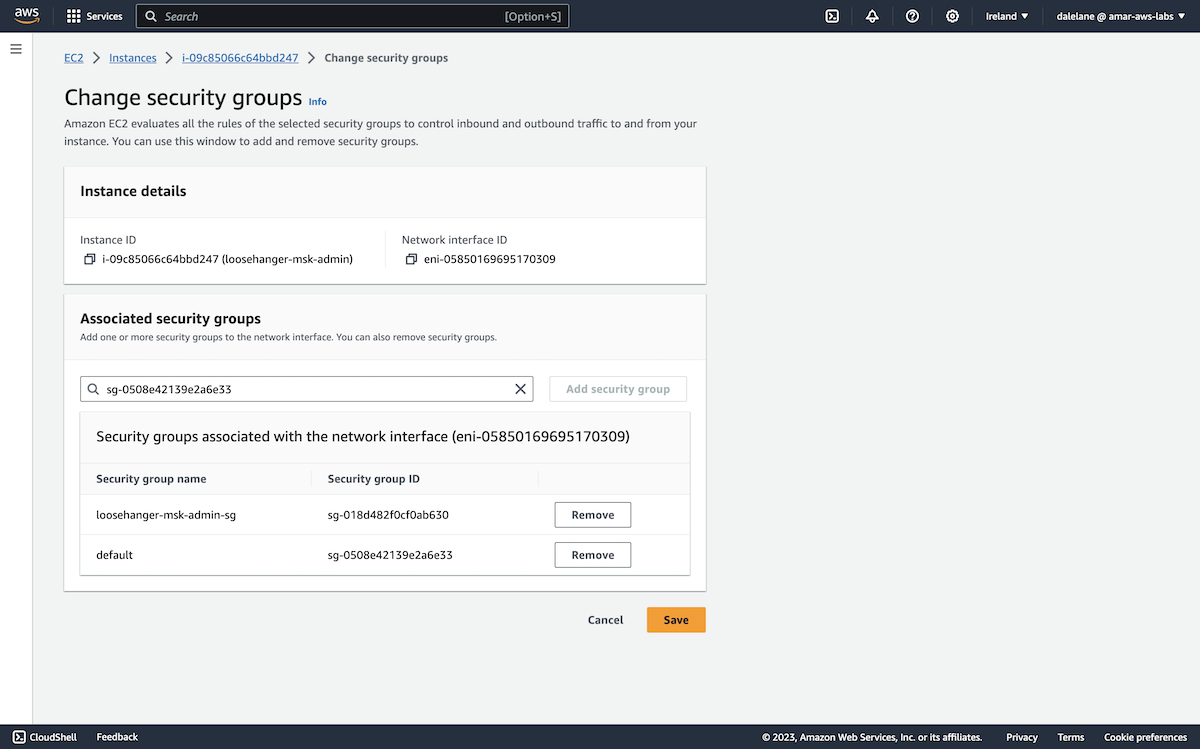

We chose the security group that was created for the MSK cluster.

Having both security groups gave our EC2 instance the permissions needed to let us connect from a web console, as well the permissions needed for it to connect to Kafka.

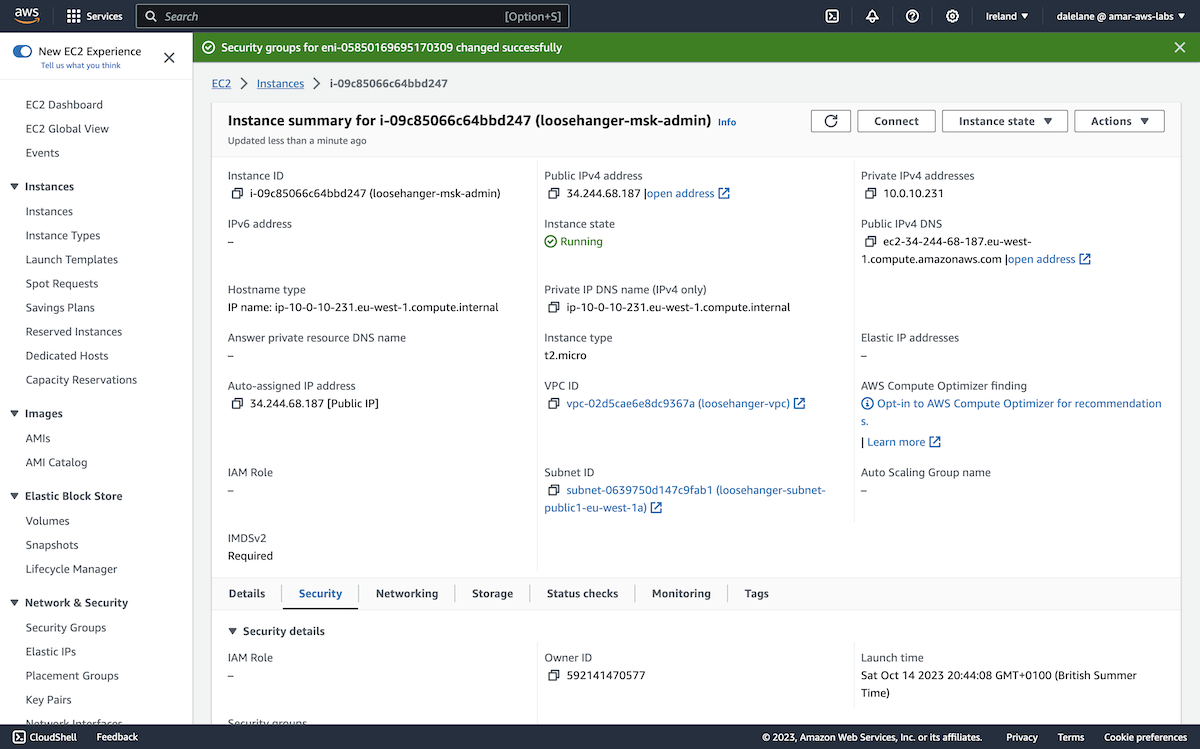

Next, we clicked Save.

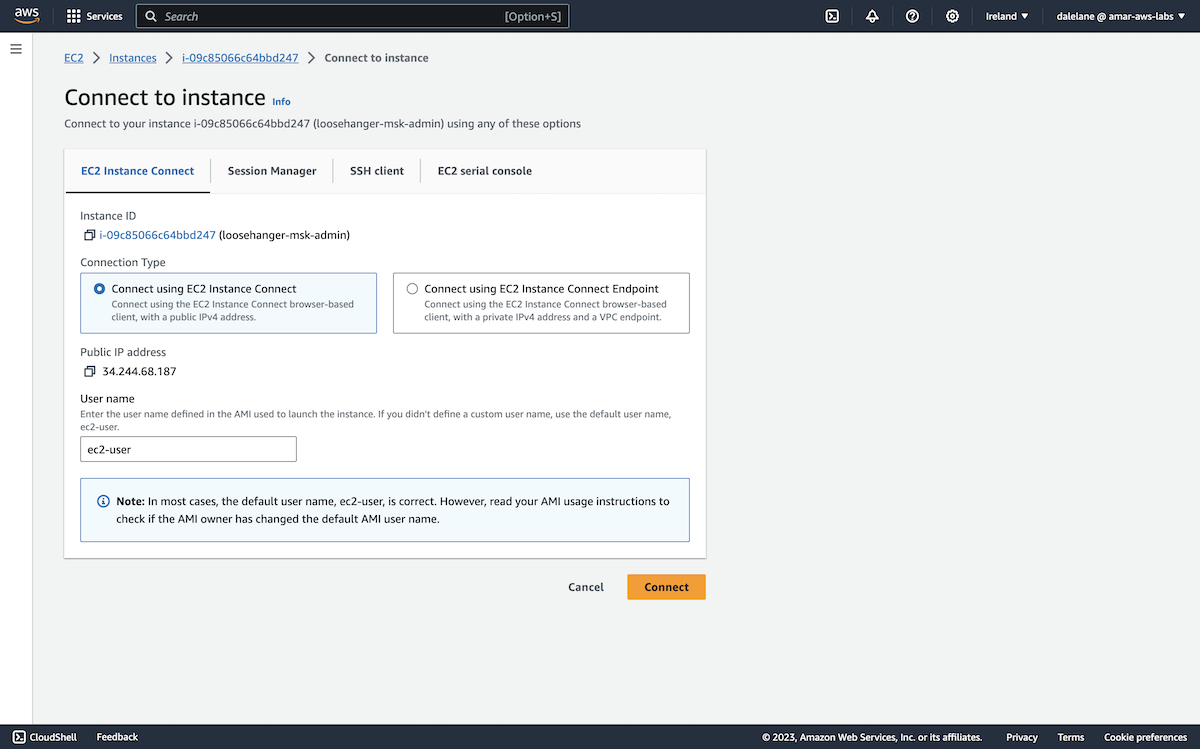

We were now ready to access the instance, so we clicked Connect.

We went with EC2 Instance Connect as it offers a web-based terminal console with nothing to install.

To open the console, we clicked Connect.

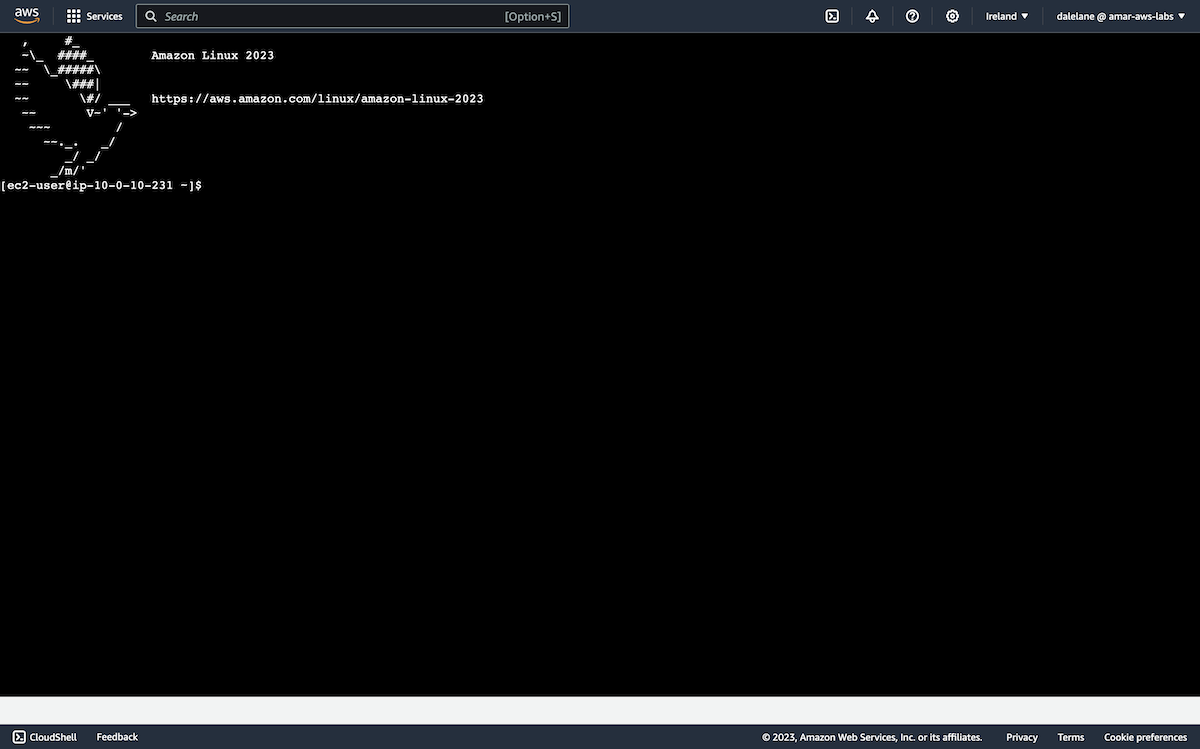

This gave us a shell in our Amazon Linux server.

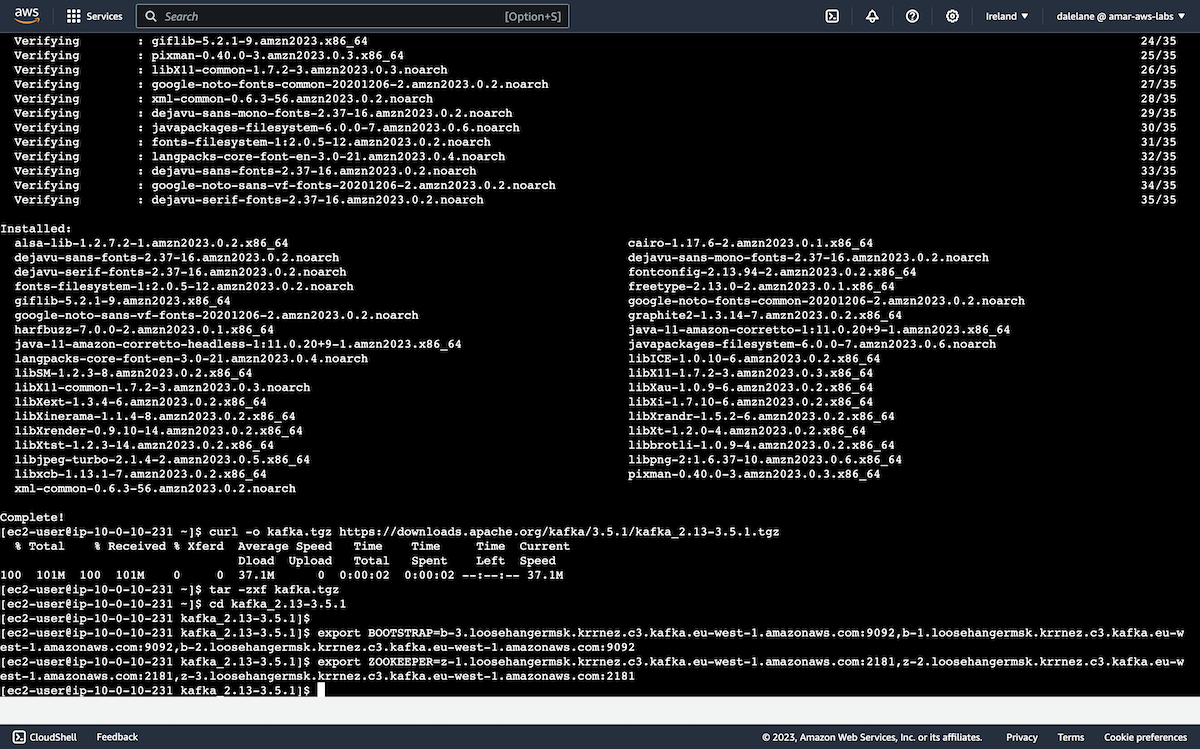

We started by installing Java, as this is required for running the Kafka admin tools.

sudo yum -y install java-11

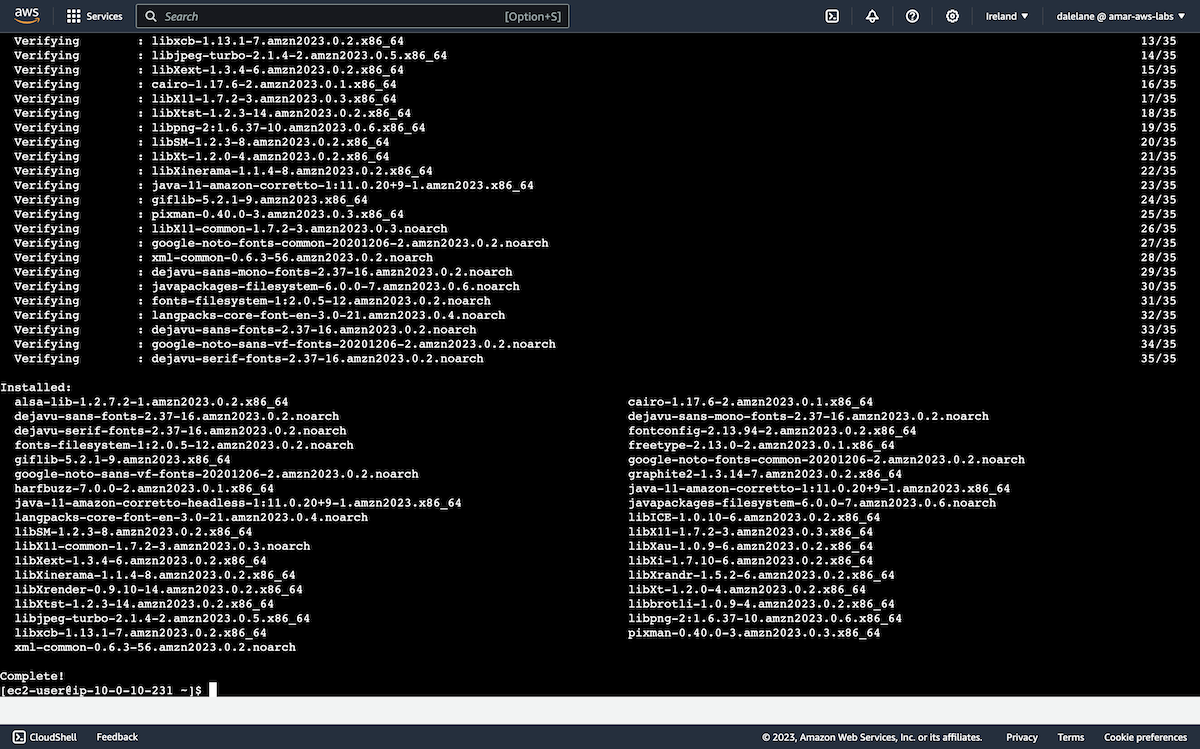

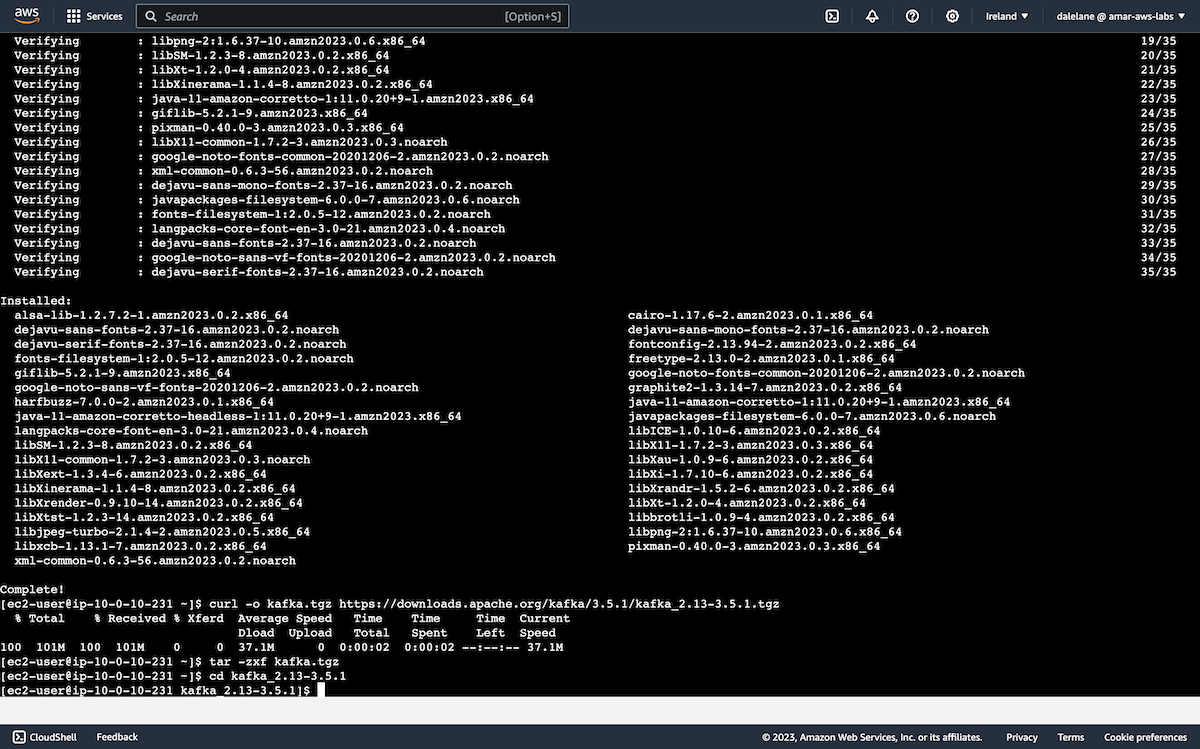

Next, we downloaded Kafka.

curl -o kafka.tgz https://downloads.apache.org/kafka/3.5.1/kafka_2.13-3.5.1.tgz tar -zxf kafka.tgz cd kafka_2.13-3.5.1

We matched the version of the MSK cluster (but it would have still worked if we had picked a different version).

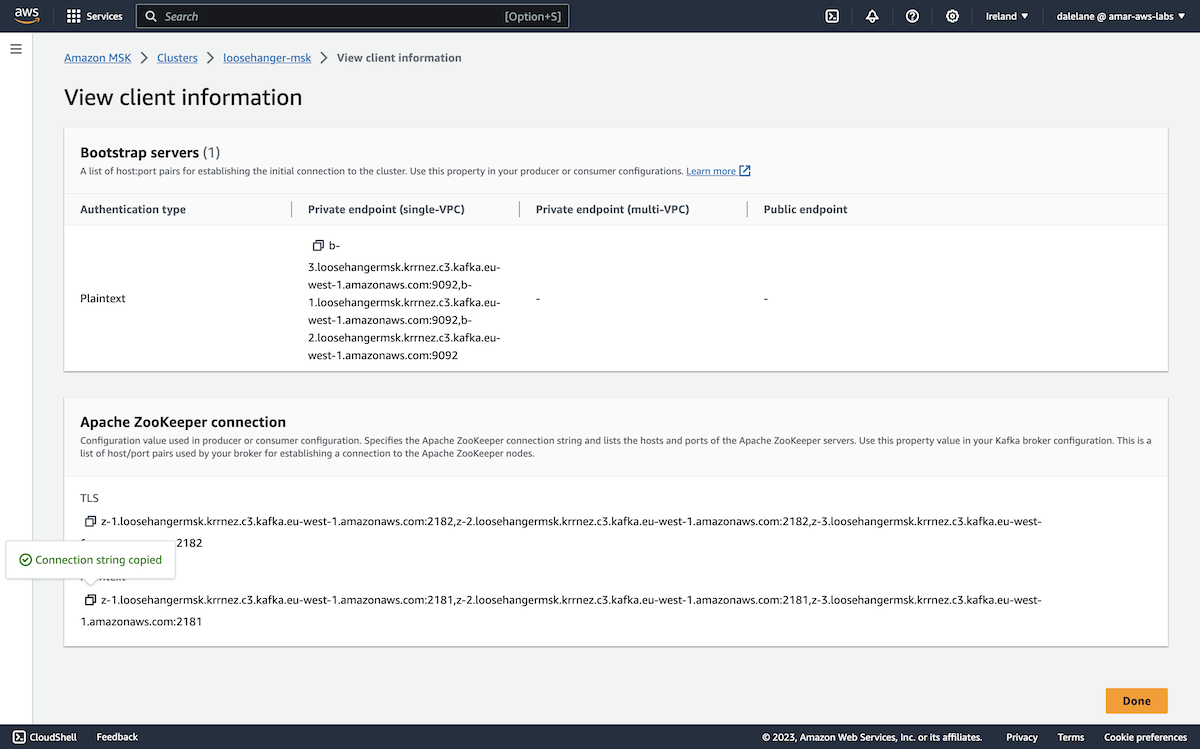

To run the Kafka admin tools, we first needed to get connection details for the MSK cluster.

From the MSK cluster page, we clicked View client information.

We copied both the bootstrap address (labelled as the Private endpoint in the Bootstrap servers section) and the Plaintext ZooKeeper address.

Notice that as we hadn't yet enabled public access, these were both private DNS addresses, but our EC2 server (running in the same VPC) would be able to access them.

We created BOOTSTRAP and ZOOKEEPER environment variables using our copied values.

export BOOTSTRAP=b-3.loosehangermsk.krrnez.c3.kafka.eu-west-1.amazonaws.com:9092,b-1.loosehangermsk.krrnez.c3.kafka.eu-west-1.amazonaws.com:9092,b-2.loosehangermsk.krrnez.c3.kafka.eu-west-1.amazonaws.com:9092 export ZOOKEEPER=z-1.loosehangermsk.krrnez.c3.kafka.eu-west-1.amazonaws.com:2181,z-2.loosehangermsk.krrnez.c3.kafka.eu-west-1.amazonaws.com:2181,z-3.loosehangermsk.krrnez.c3.kafka.eu-west-1.amazonaws.com:2181

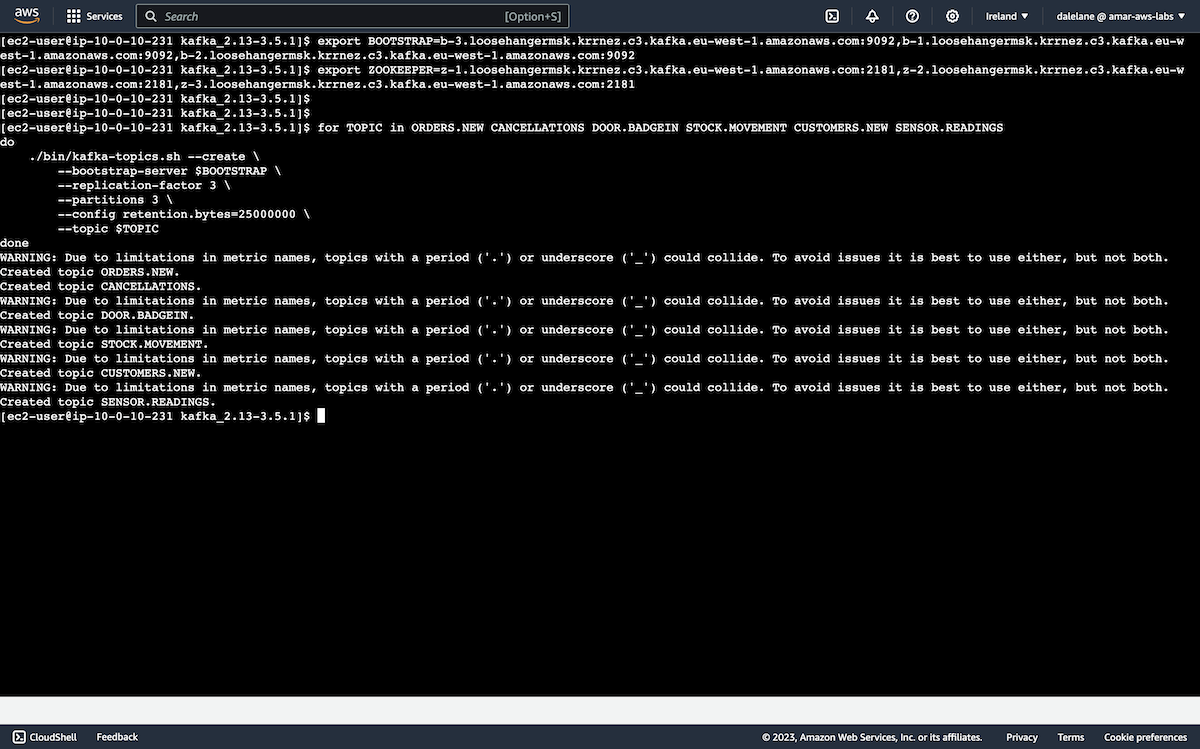

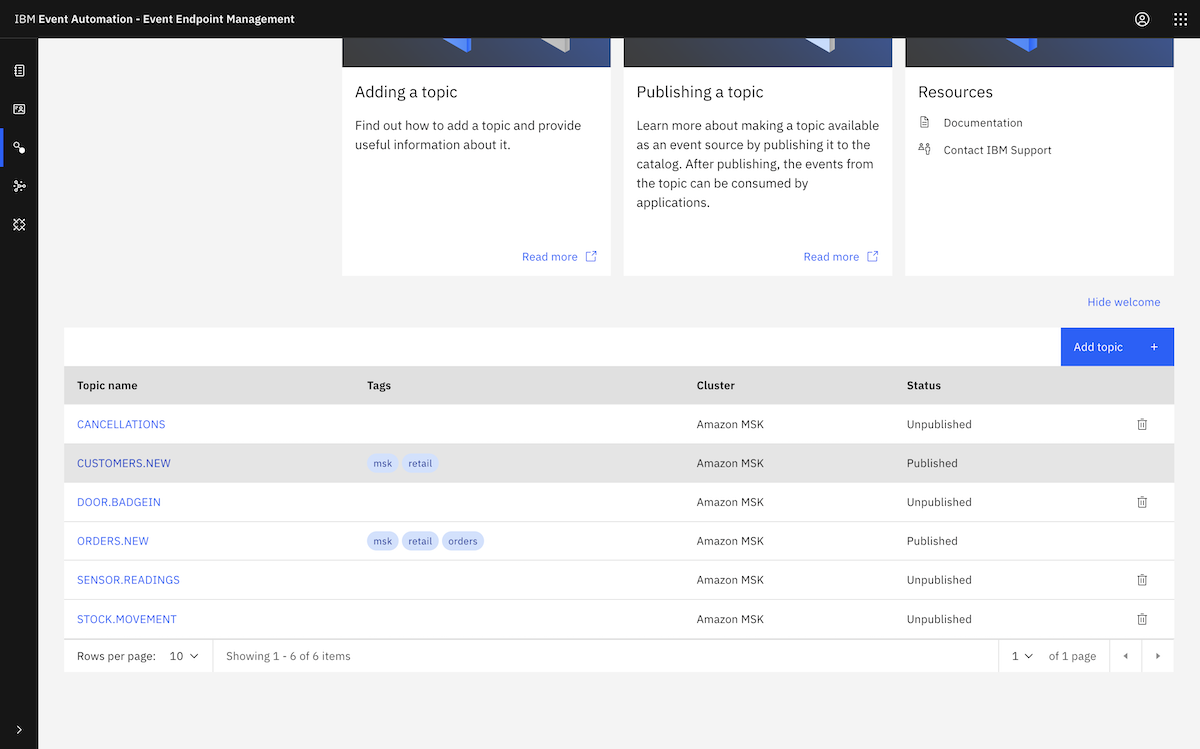

This was enough to let us create the topics we needed for our demonstration. We created a set of topics that are needed for the initial set of IBM Event Automation tutorials.

for TOPIC in ORDERS.NEW CANCELLATIONS DOOR.BADGEIN STOCK.MOVEMENT CUSTOMERS.NEW SENSOR.READINGS

do

./bin/kafka-topics.sh --create \

--bootstrap-server $BOOTSTRAP \

--replication-factor 3 \

--partitions 3 \

--config retention.bytes=25000000 \

--topic $TOPIC

done

You can see a list of these topics, together with a description of the events that we would be producing to them, in the documentation for the data generator we use for these demos.

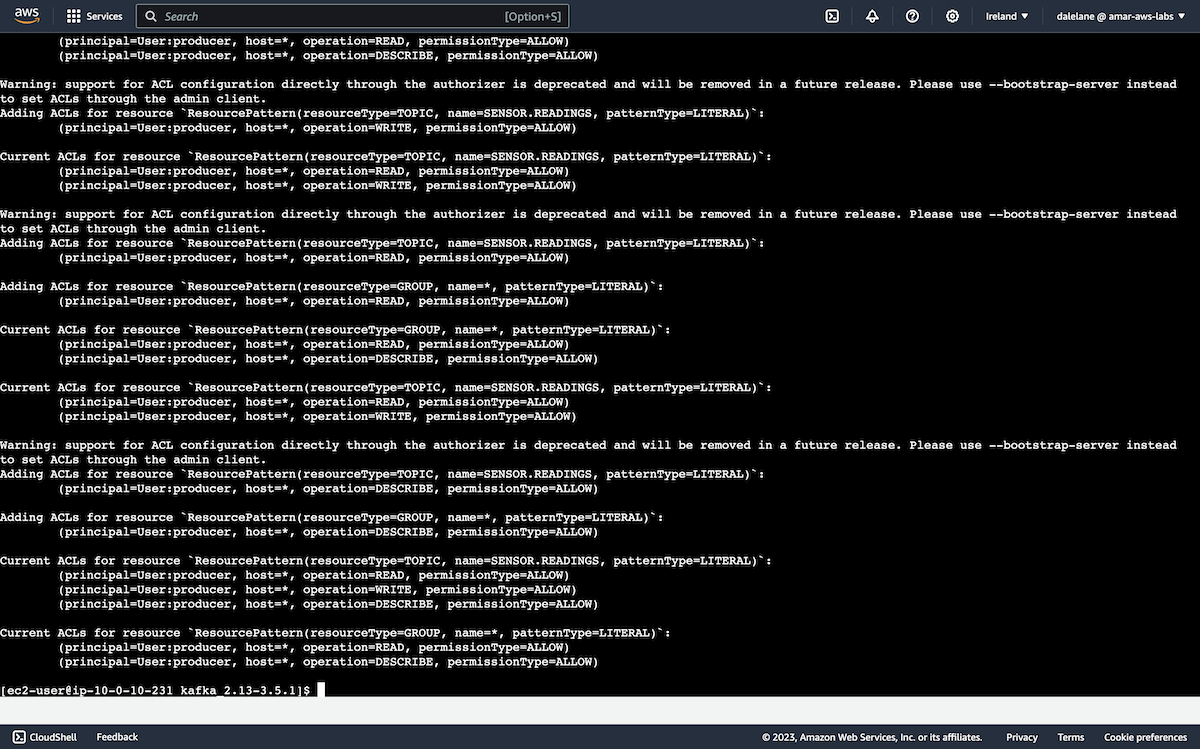

With the topics created, it was time to start setting up authentication.

We started by setting up permissions for a user able to produce and consume events to all of our topics.

We named this user producer.

for TOPIC in ORDERS.NEW CANCELLATIONS DOOR.BADGEIN STOCK.MOVEMENT CUSTOMERS.NEW SENSOR.READINGS

do

./bin/kafka-acls.sh --add \

--authorizer-properties zookeeper.connect=$ZOOKEEPER \

--allow-principal "User:producer" \

--operation Write \

--topic $TOPIC

./bin/kafka-acls.sh --add \

--authorizer-properties zookeeper.connect=$ZOOKEEPER \

--allow-principal "User:producer" \

--operation Read \

--group="*" \

--topic $TOPIC

./bin/kafka-acls.sh --add \

--authorizer-properties zookeeper.connect=$ZOOKEEPER \

--allow-principal "User:producer" \

--operation Describe \

--group="*" \

--topic $TOPIC

done

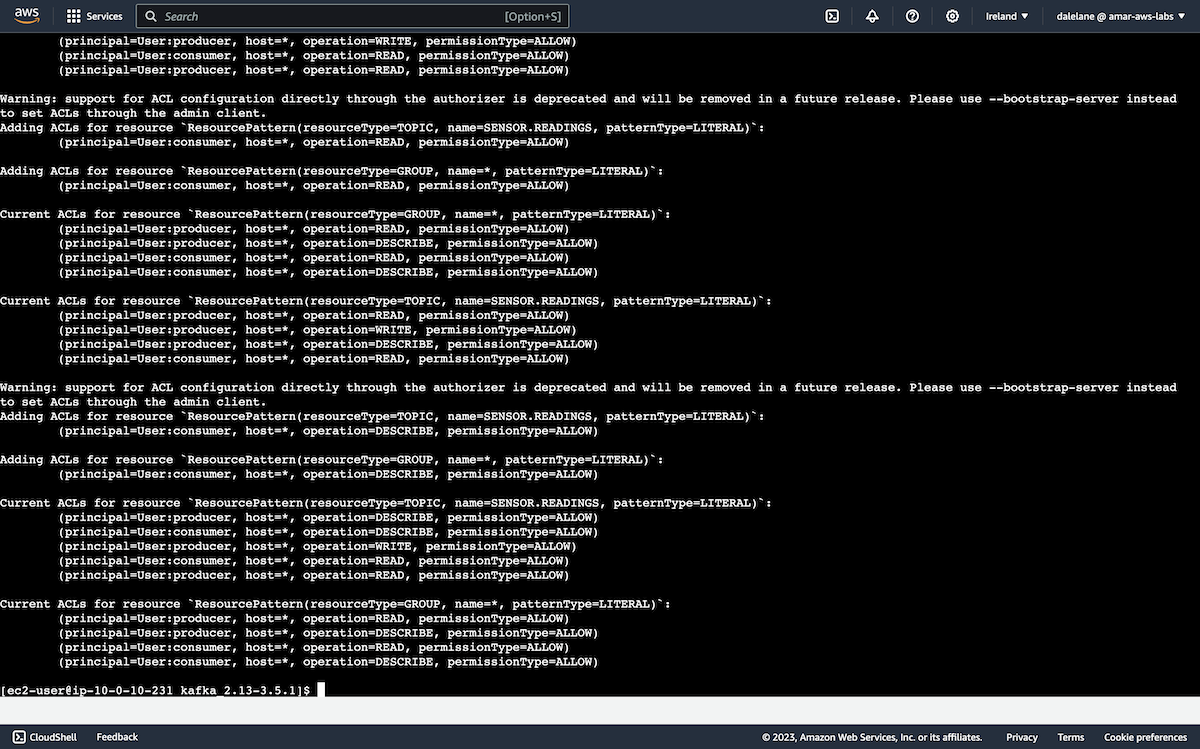

Next, we set up more limited permissions, for a second user that would only be allowed to consume events from our topics.

We named this user consumer.

for TOPIC in ORDERS.NEW CANCELLATIONS DOOR.BADGEIN STOCK.MOVEMENT CUSTOMERS.NEW SENSOR.READINGS

do

./bin/kafka-acls.sh --add \

--authorizer-properties zookeeper.connect=$ZOOKEEPER \

--allow-principal "User:consumer" \

--operation Read \

--group="*" \

--topic $TOPIC

./bin/kafka-acls.sh --add \

--authorizer-properties zookeeper.connect=$ZOOKEEPER \

--allow-principal "User:consumer" \

--operation Describe \

--group="*" \

--topic $TOPIC

done

We didn't have any Kafka administration left to do, so we were finished with this EC2 admin instance. In the interests of cleaning up, and keeping the demo running costs down, we terminated the EC2 instance and deleted the new security group we created for it.

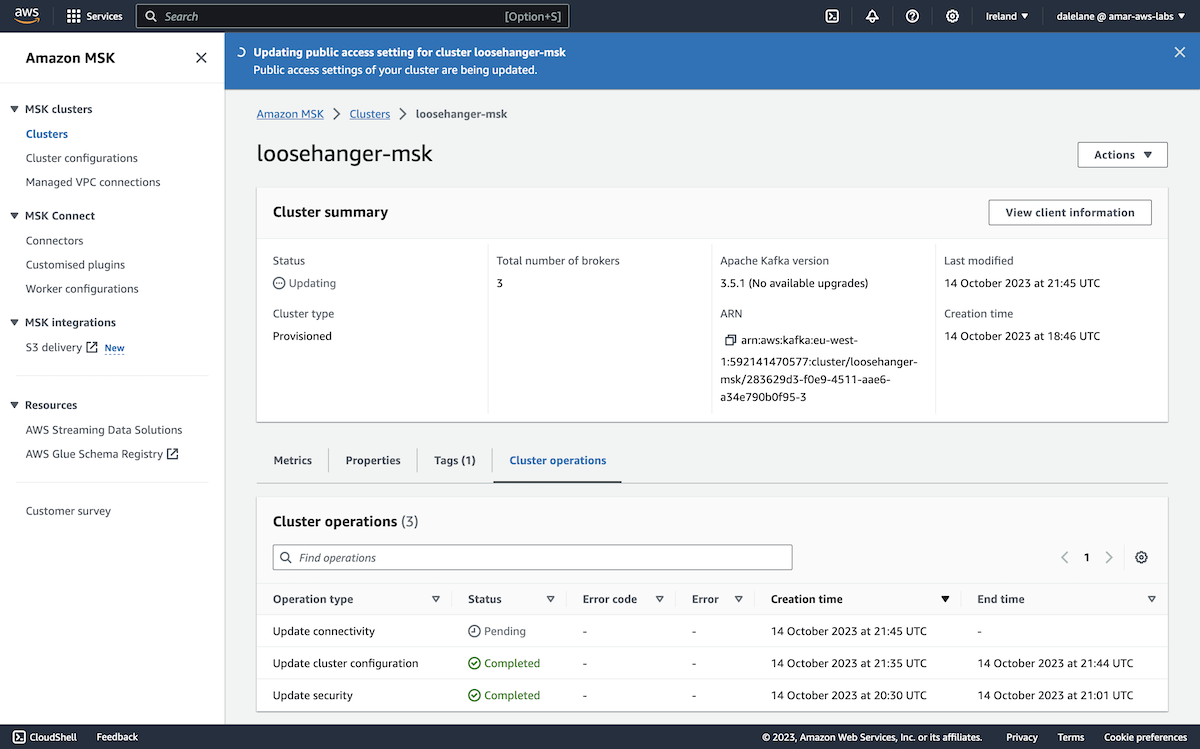

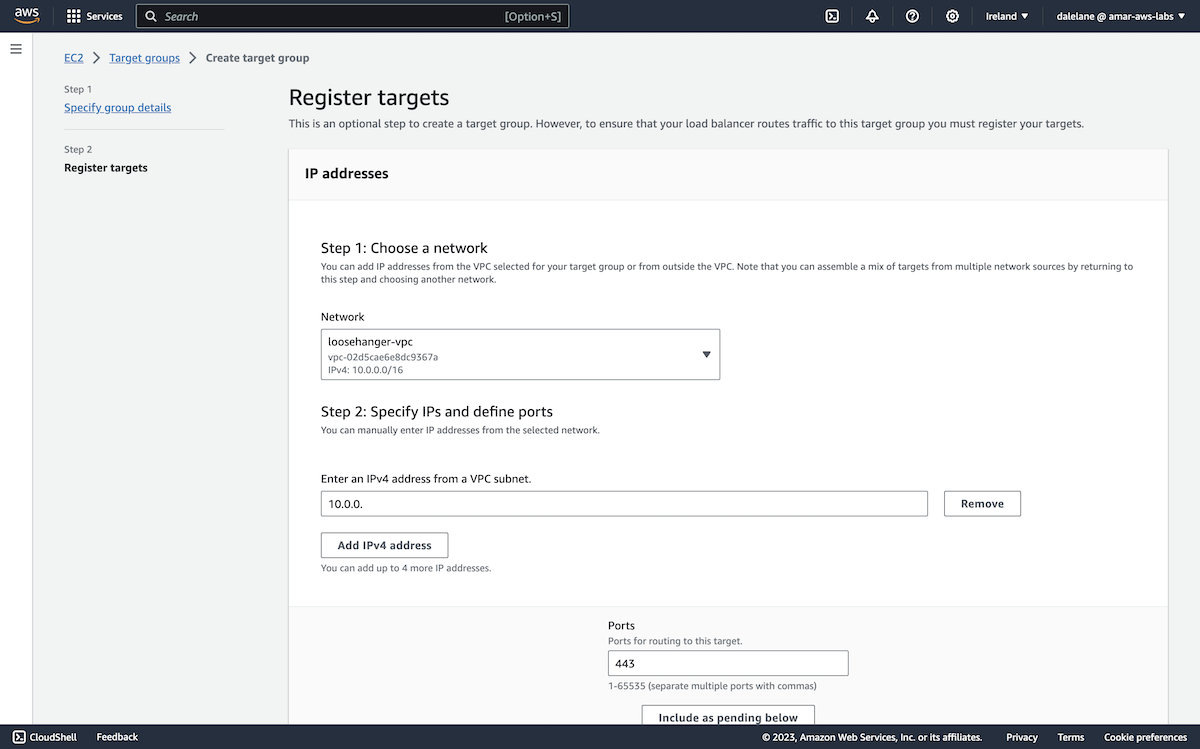

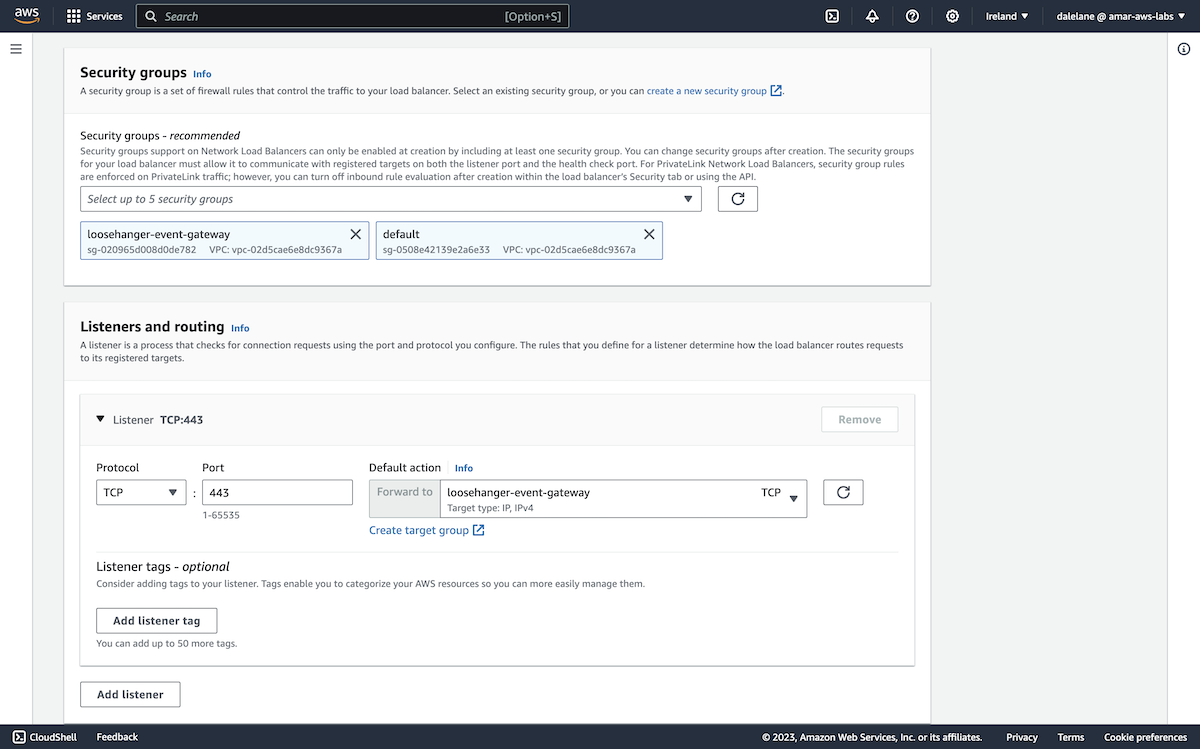

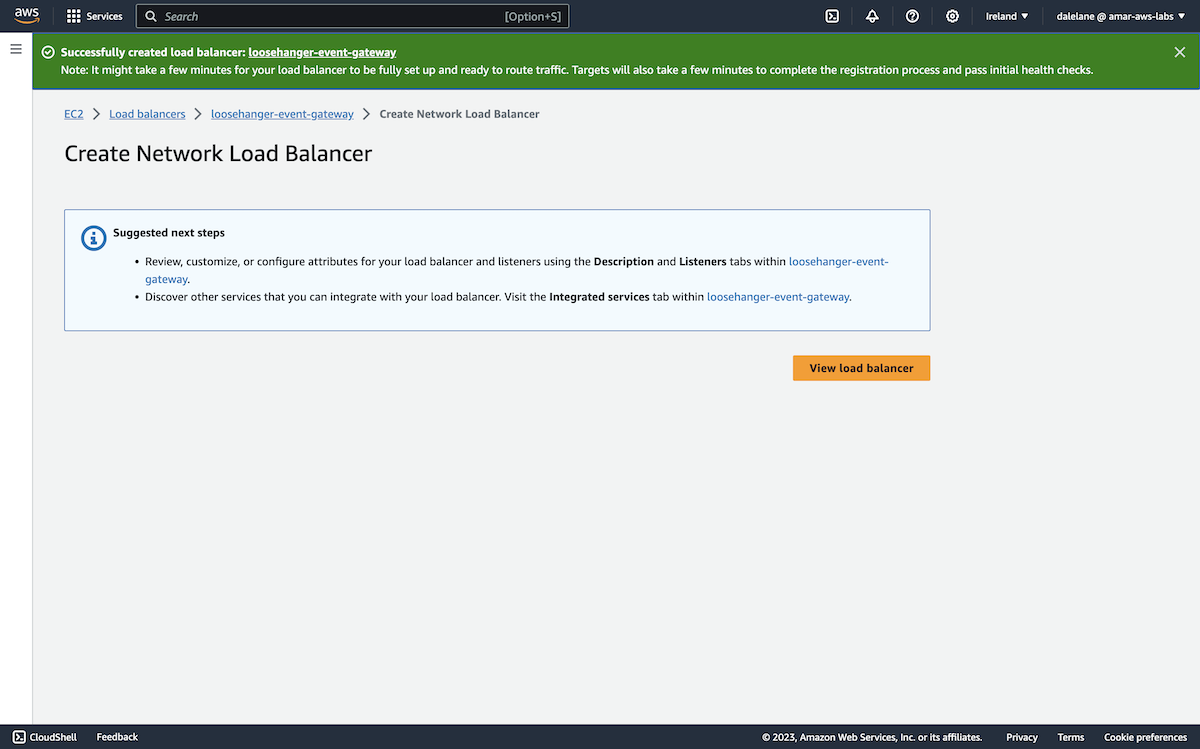

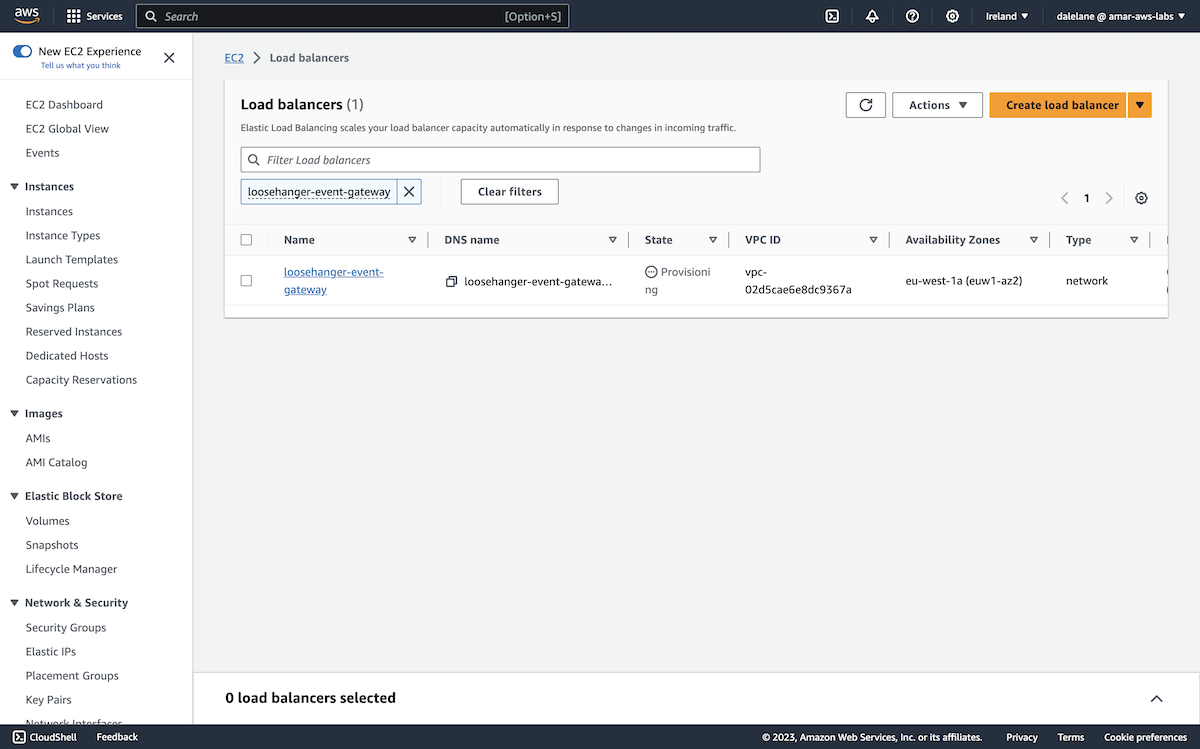

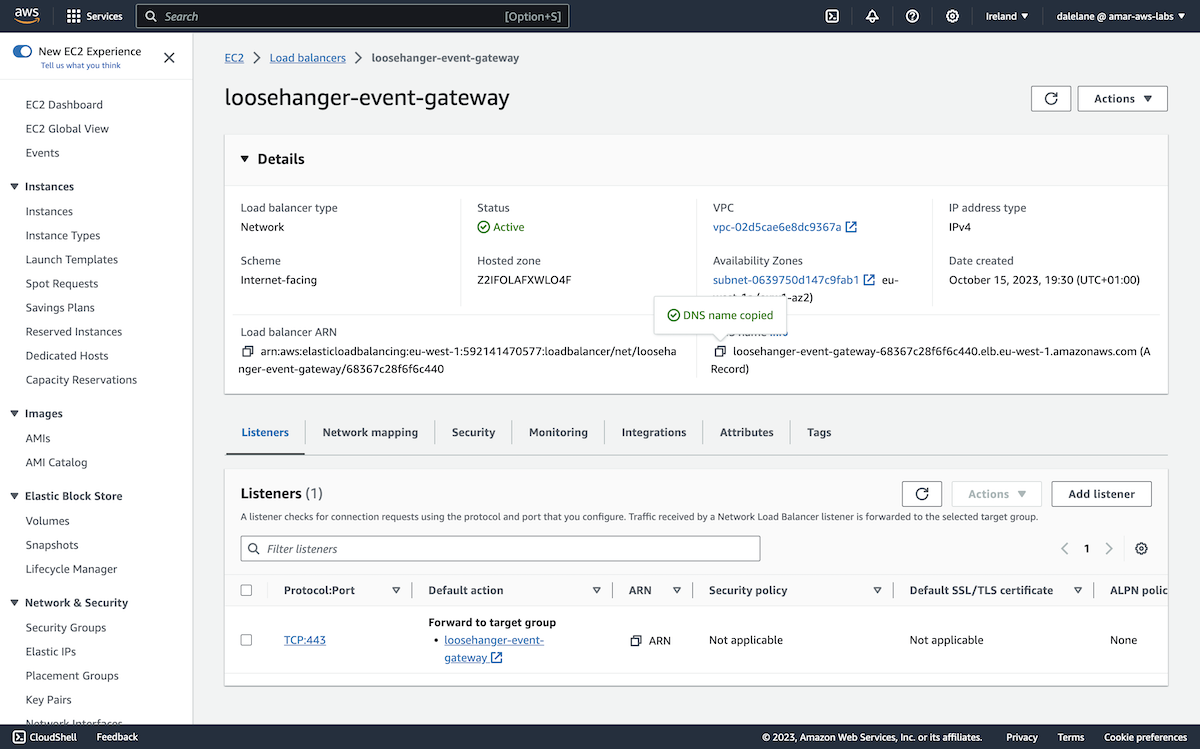

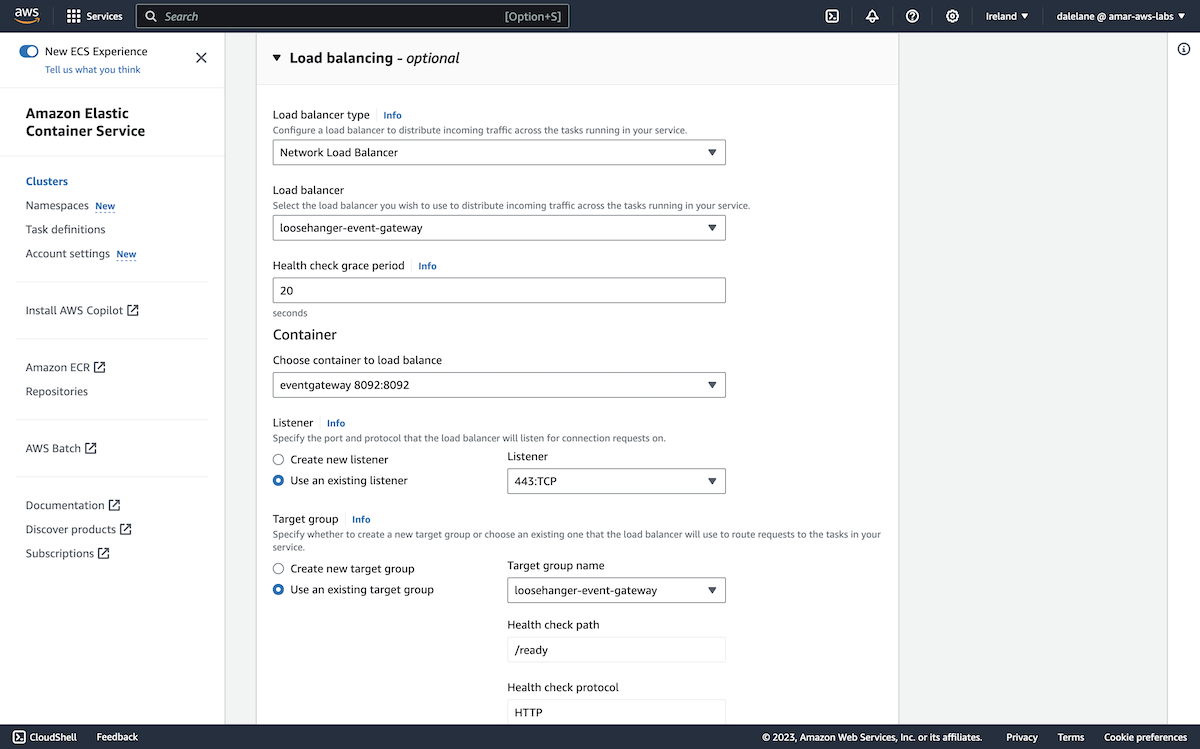

Then we enabled public access to our MSK cluster. ▶

We started by turning on security. We went back to the MSK cluster instance page, and clicked the Properties tab.

We scrolled to the Security settings section and clicked Edit.

We enabled SASL/SCRAM authentication. This automatically enabled client TLS encryption as well.

We clicked Save changes.

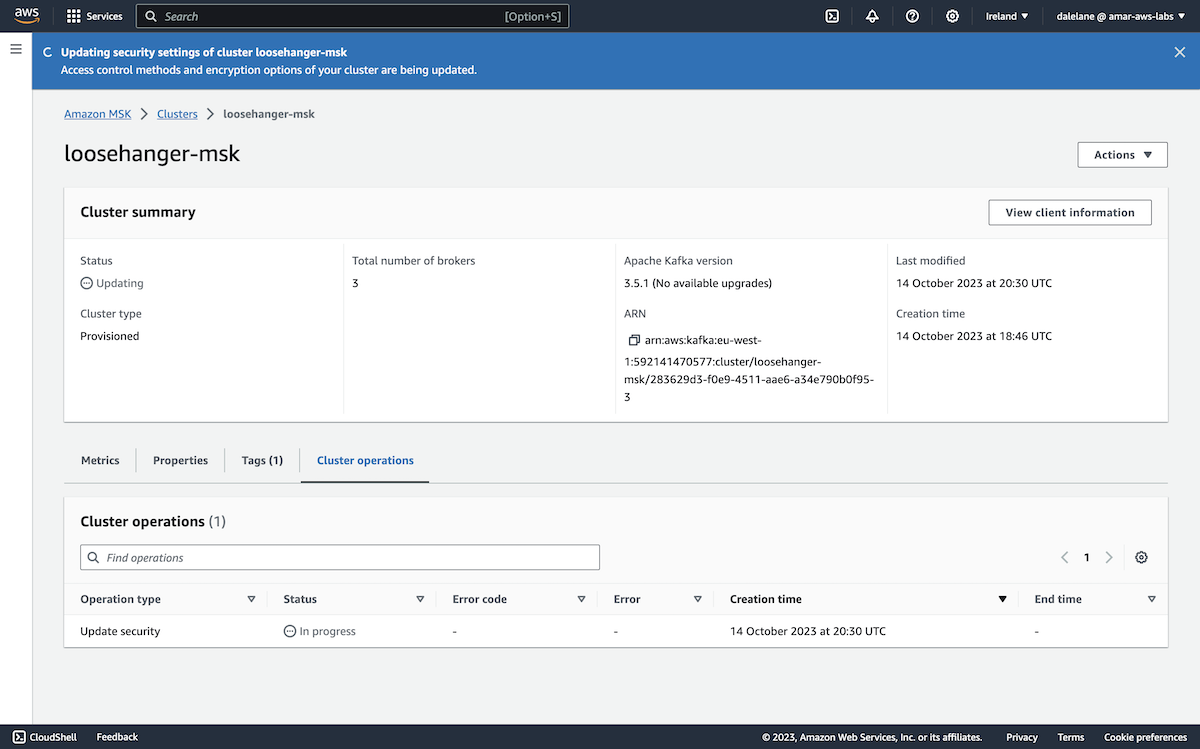

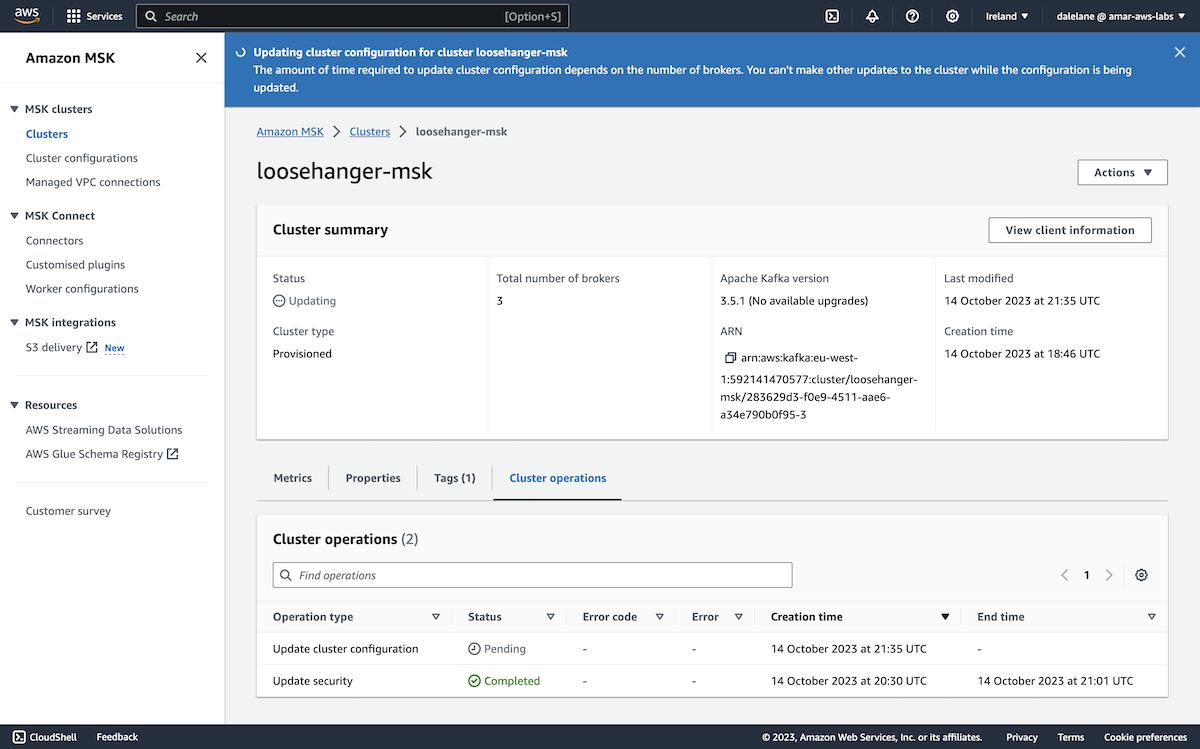

We needed to wait for the cluster to update.

(This took over thirty minutes, so this was a good point to take a break!)

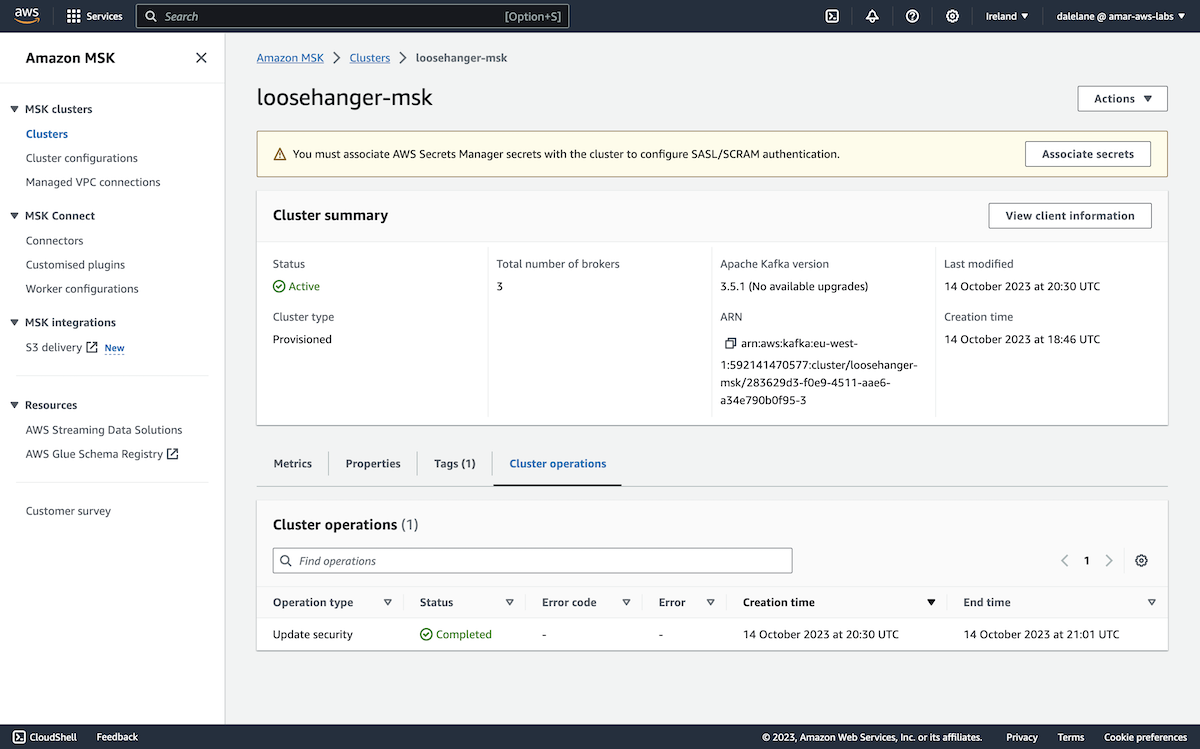

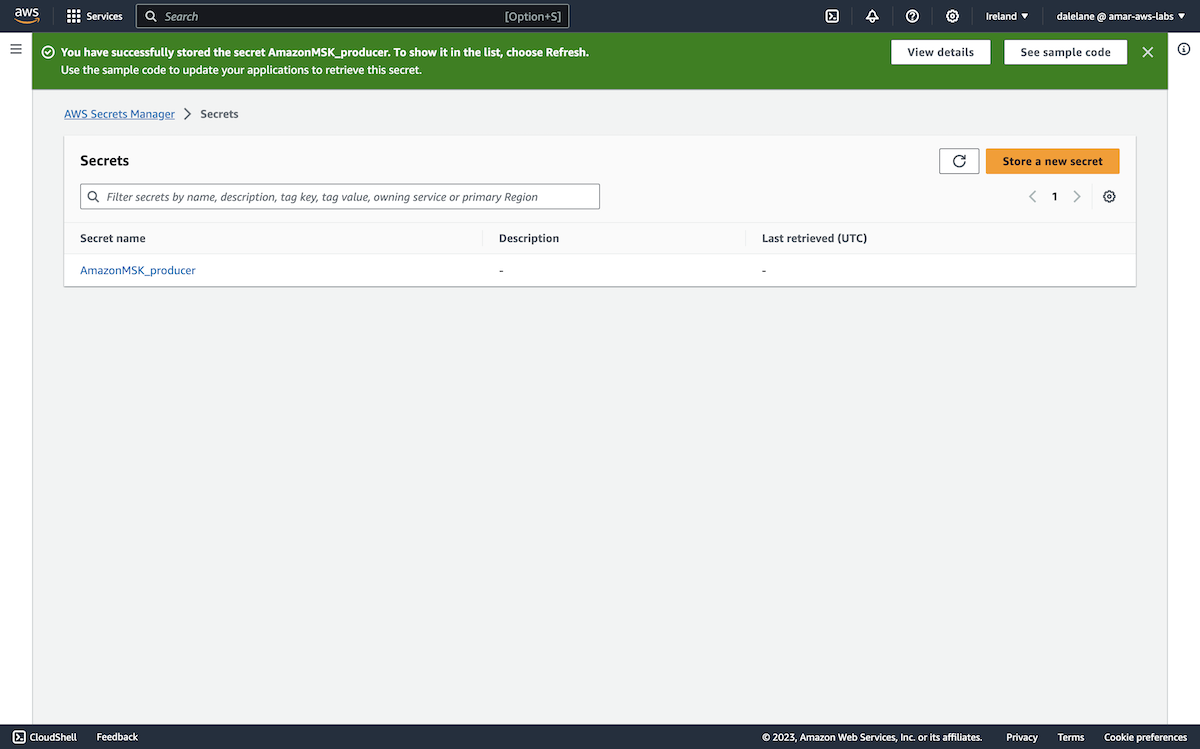

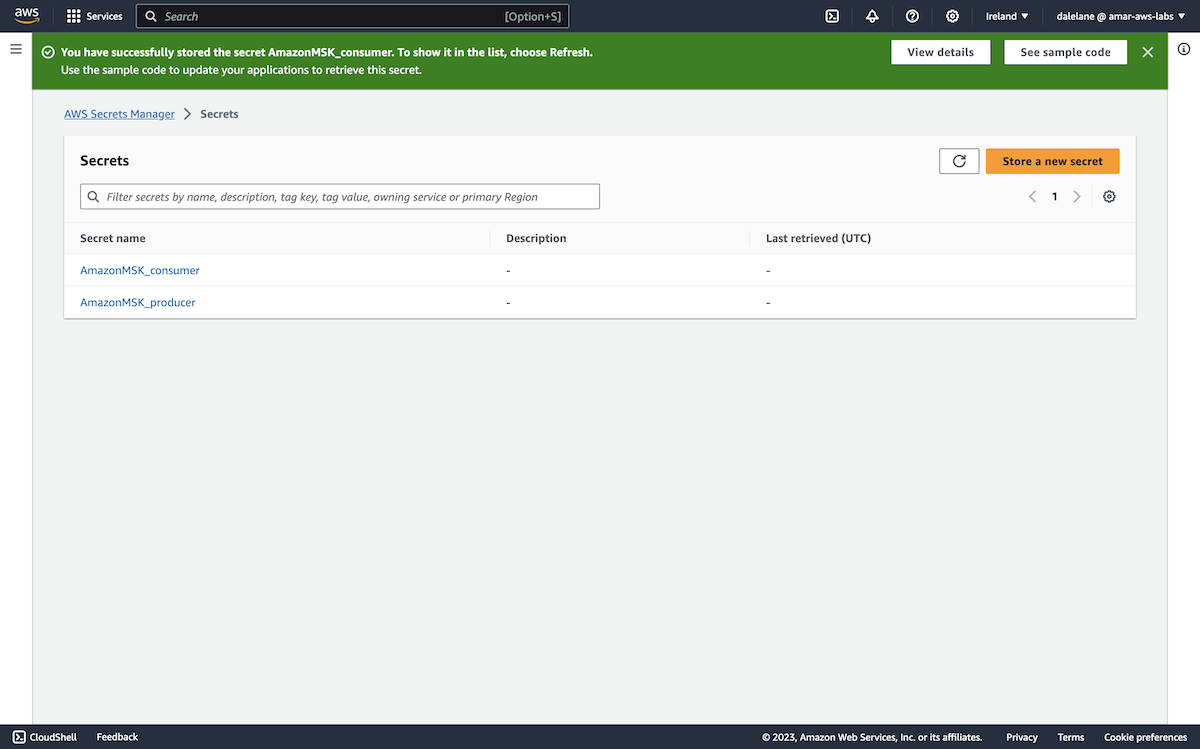

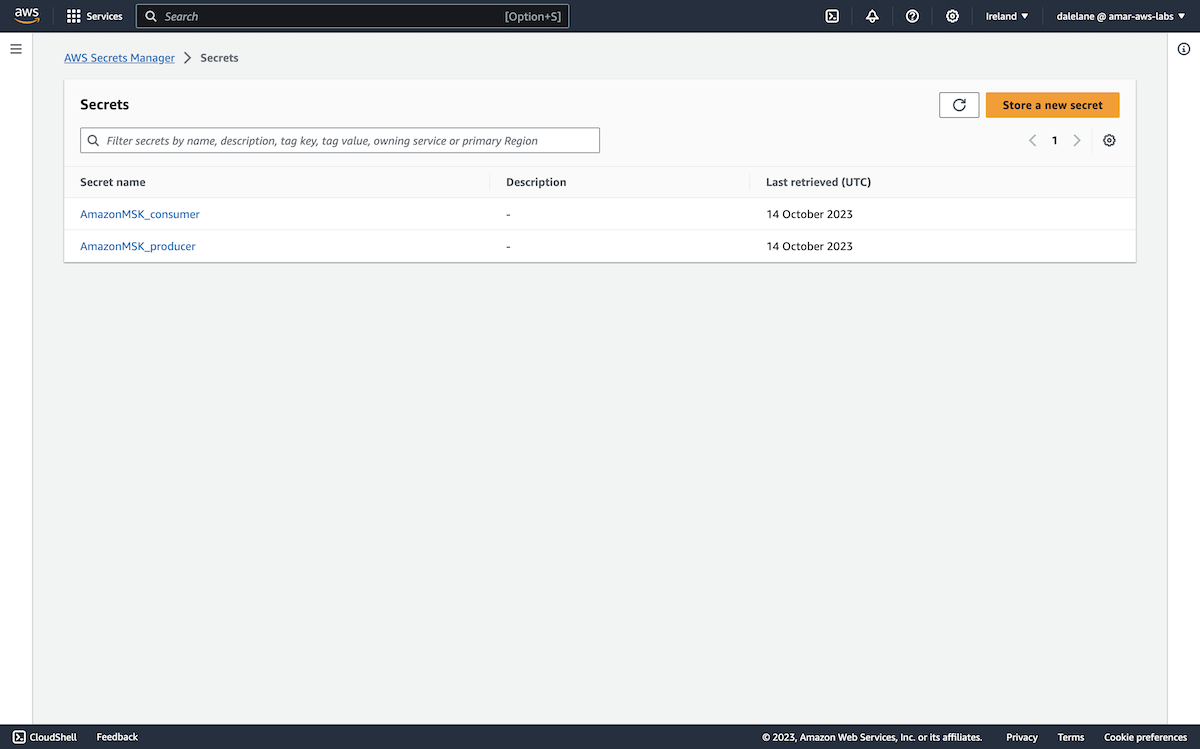

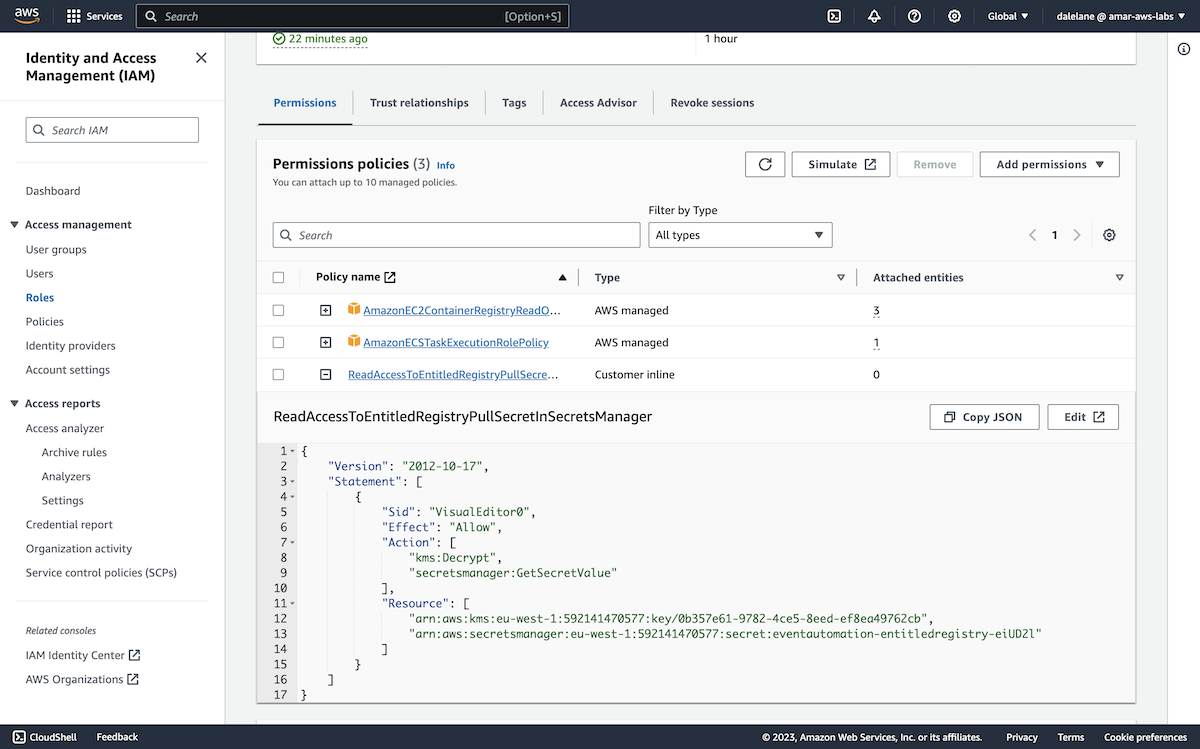

The prompt at the top of the MSK instance page showed that the next step was to set up secrets with username/passwords for Kafka clients to use.

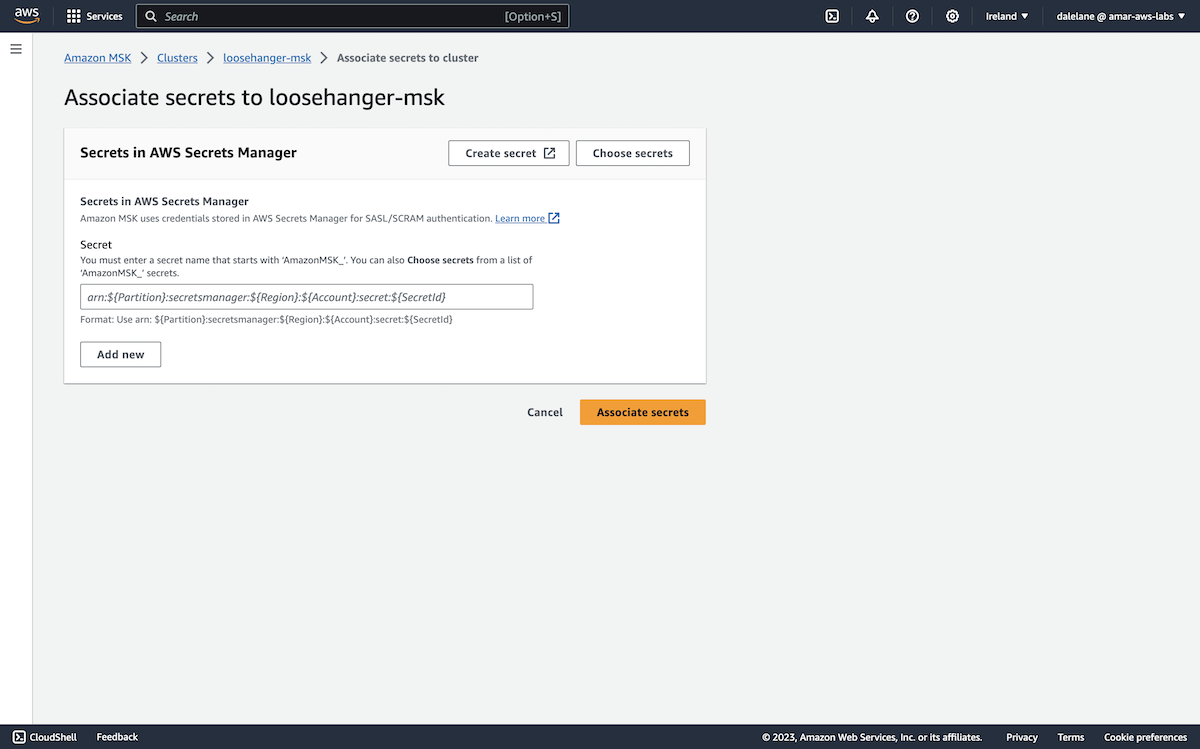

We clicked Associate secrets.

We wanted two secrets: one for our producer user, the other for consumer user - each containing the username and password.

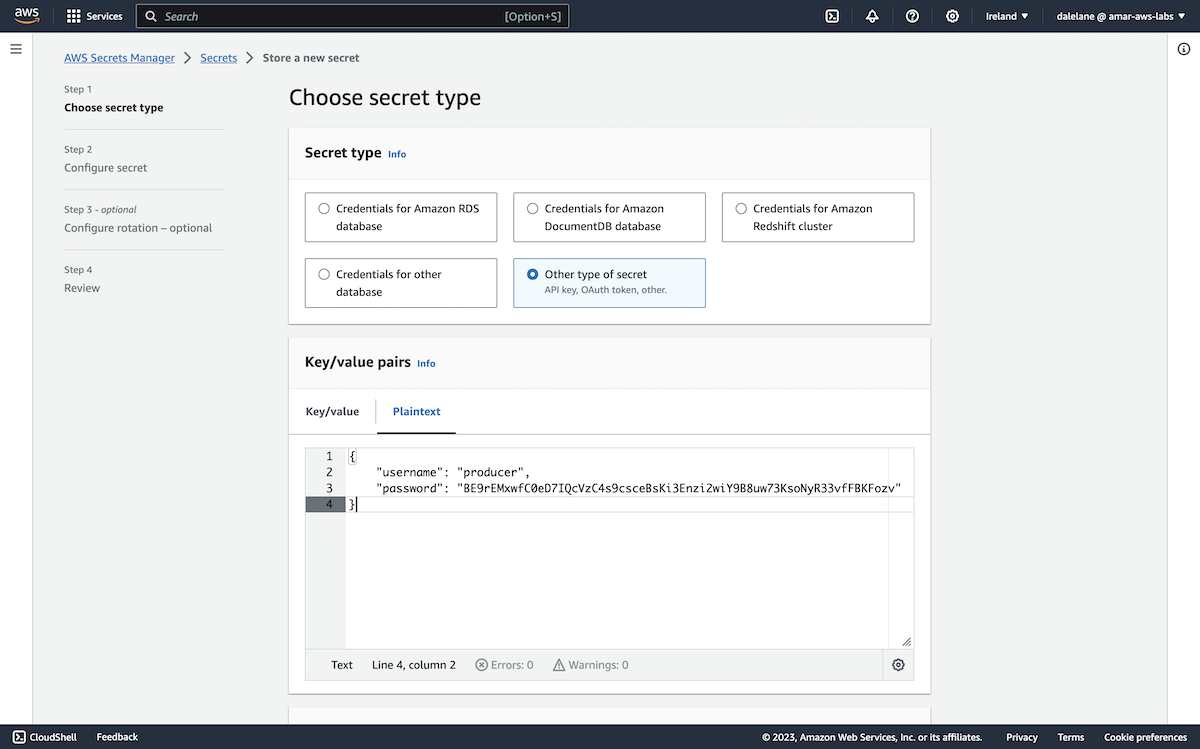

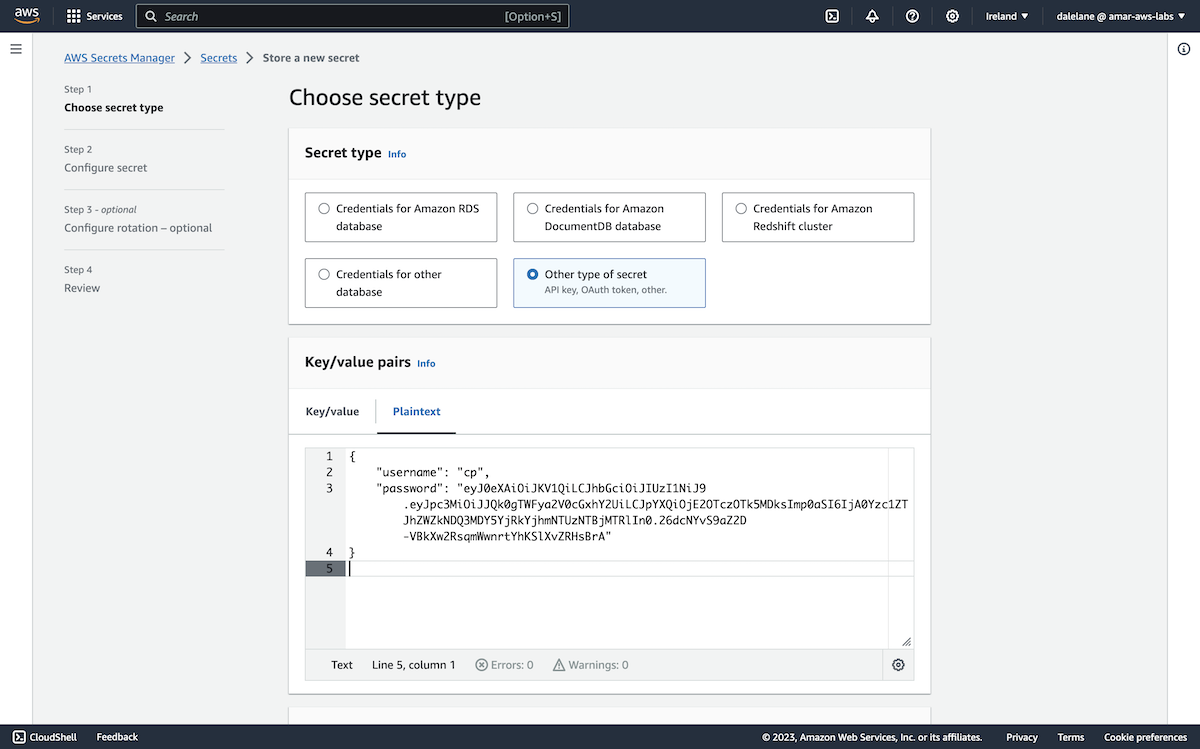

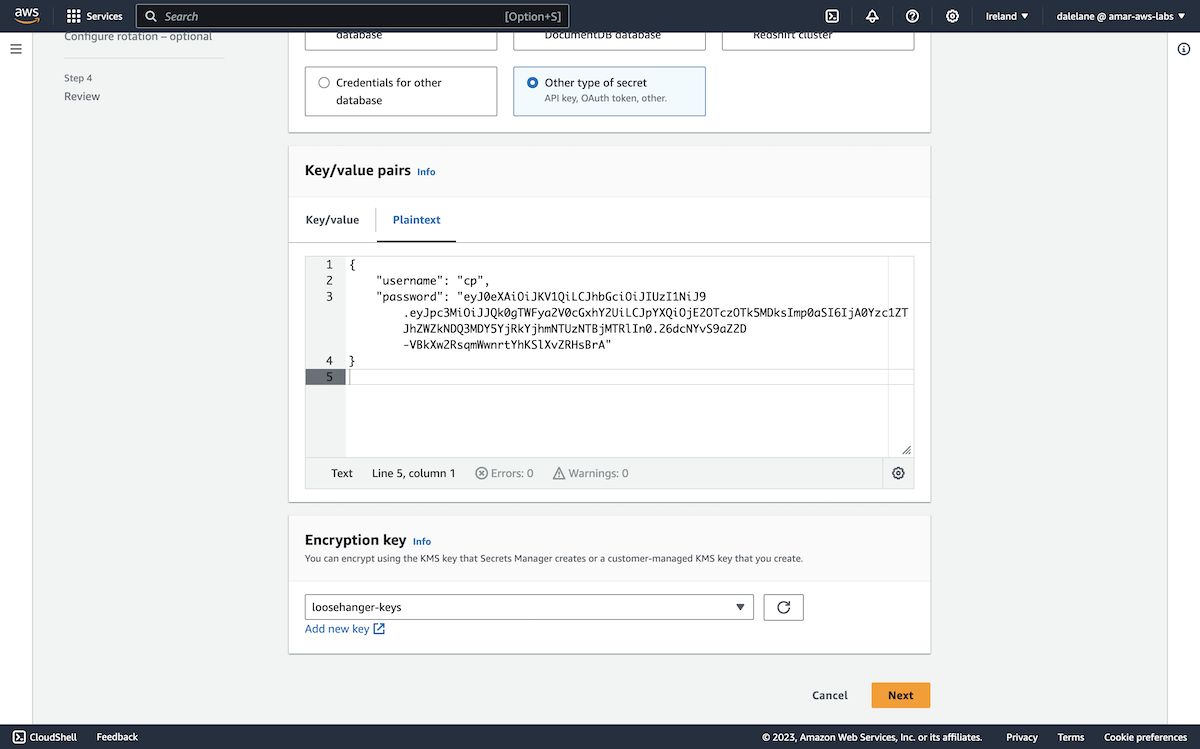

We clicked Create secret to get started.

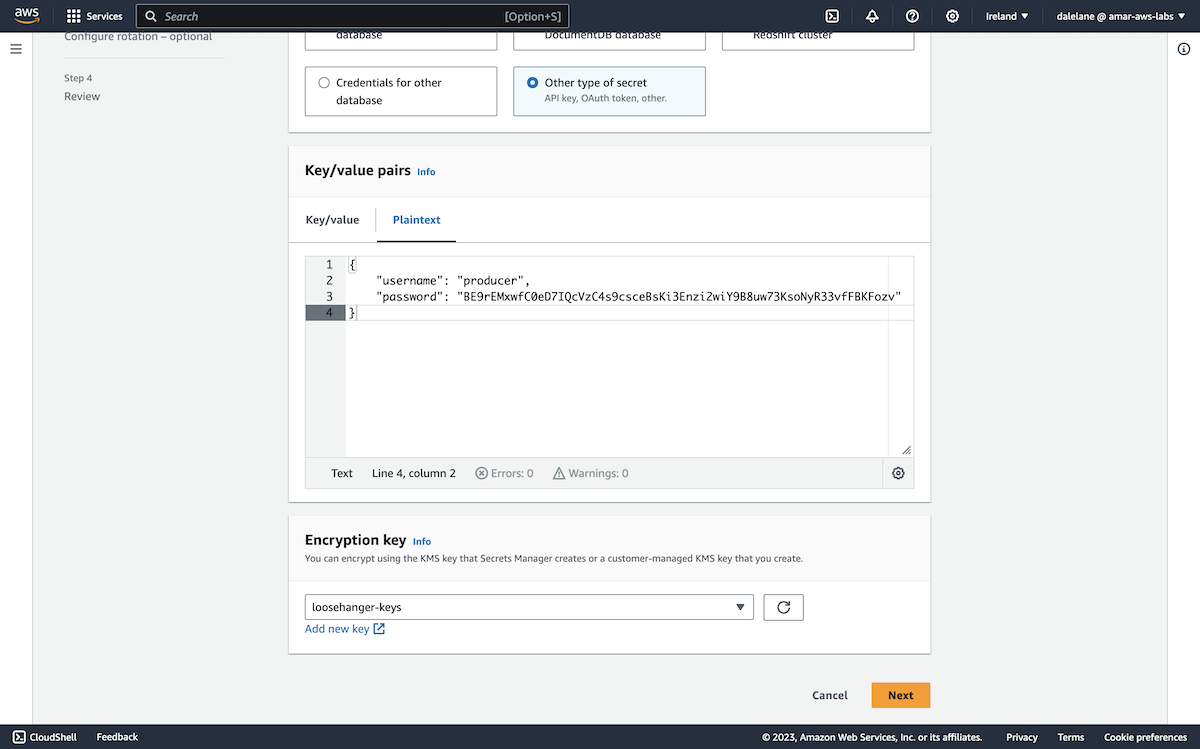

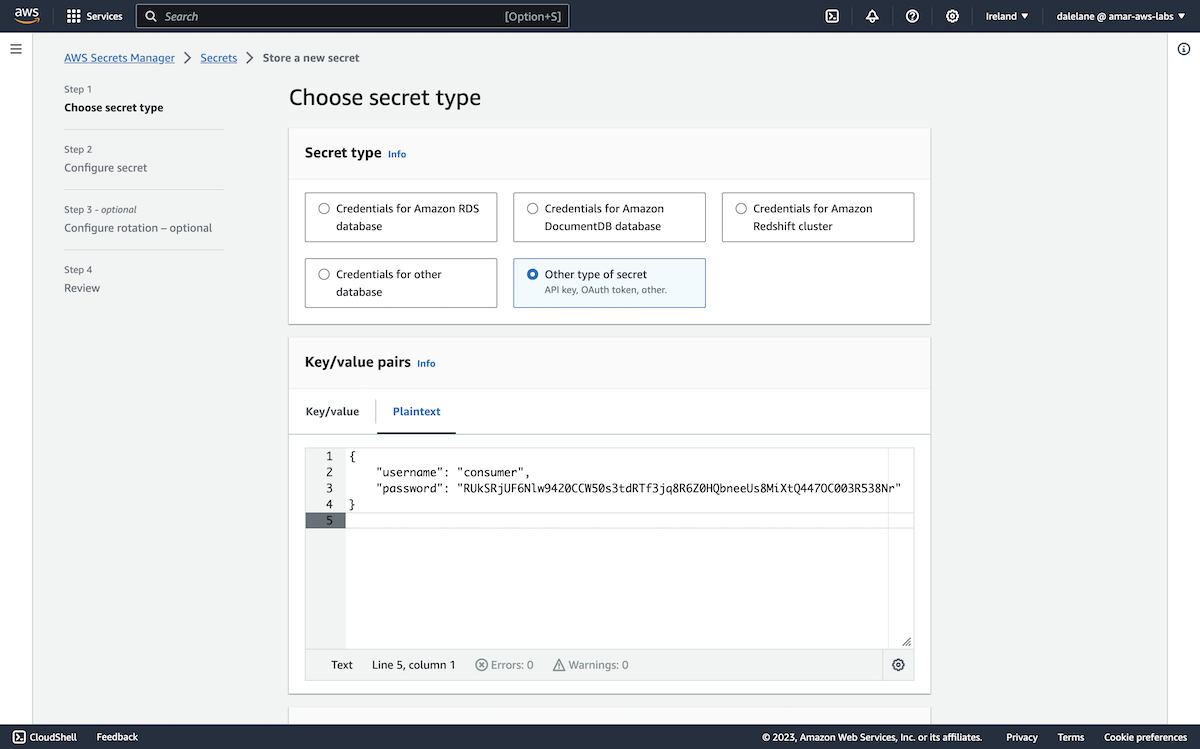

We chose the Other type of secret, and used the Plaintext tab to create a JSON payload with a random password we generated for the producer user.

{

"username": "producer",

"password": "BE9rEMxwfC0eD7IQcVzC4s9csceBsKi3Enzi2wiY9B8uw73KsoNyR33vfFBKFozv"

}

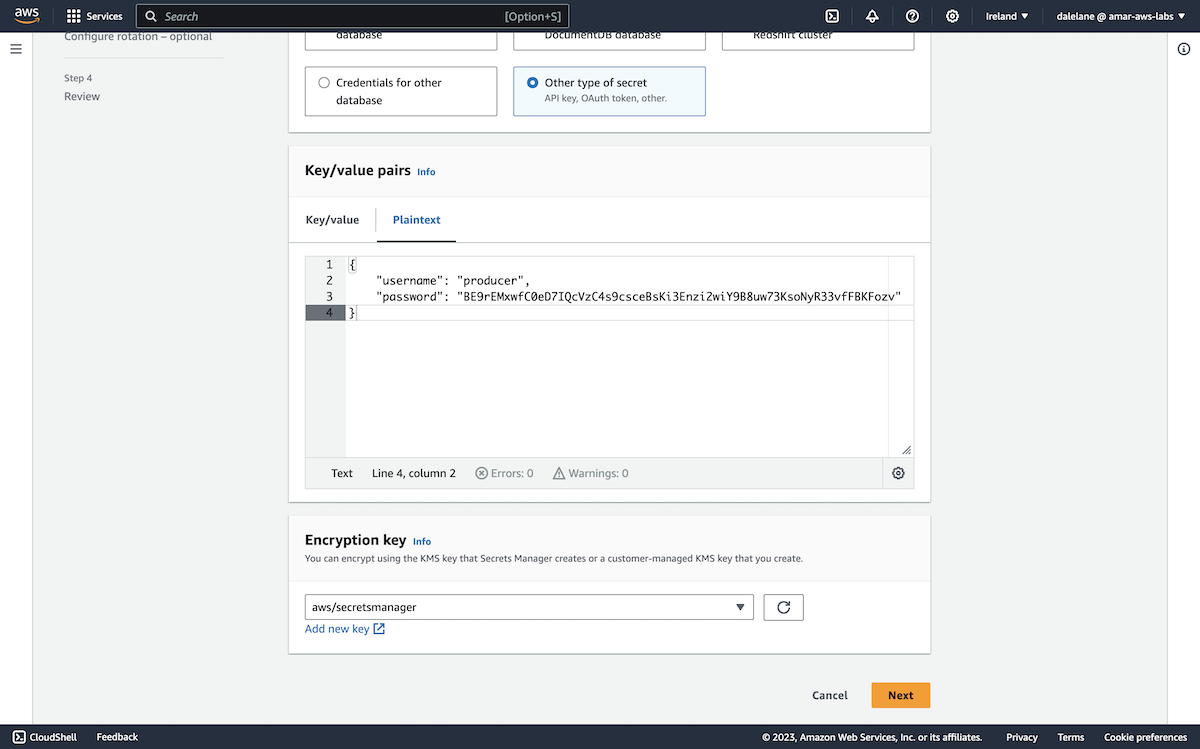

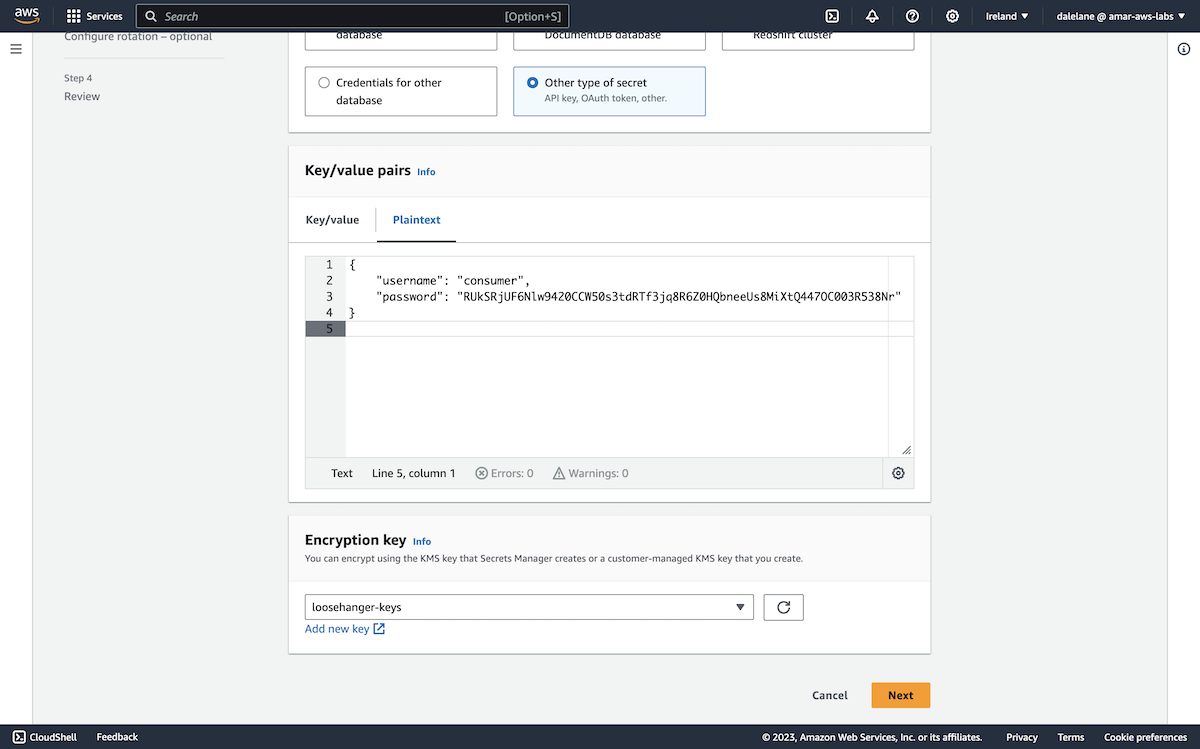

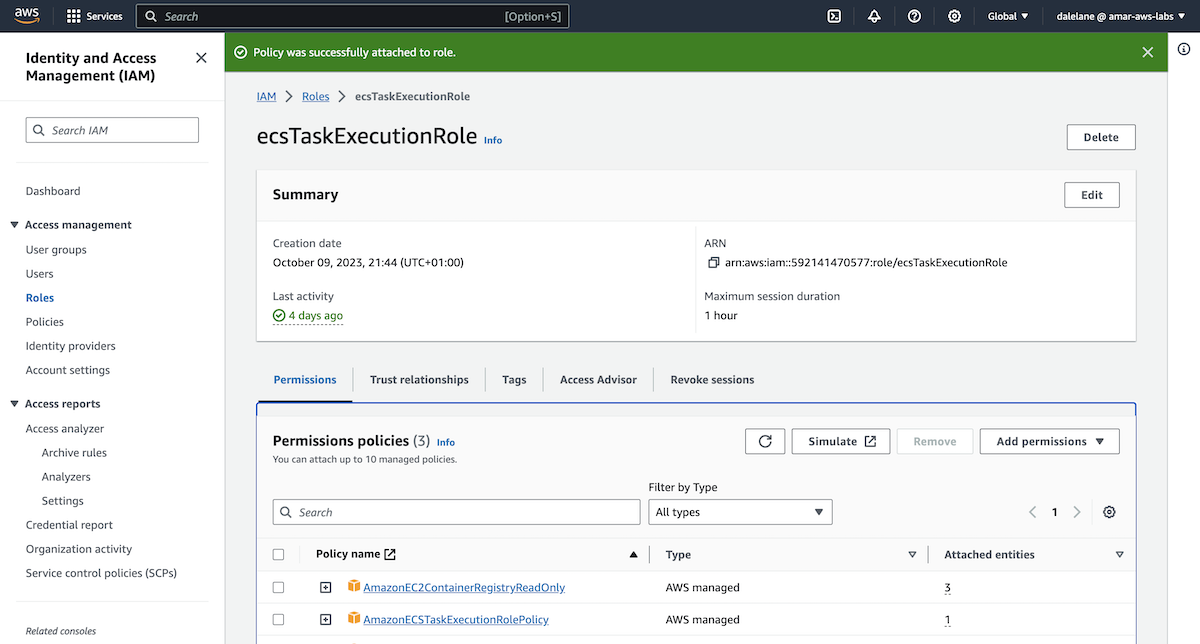

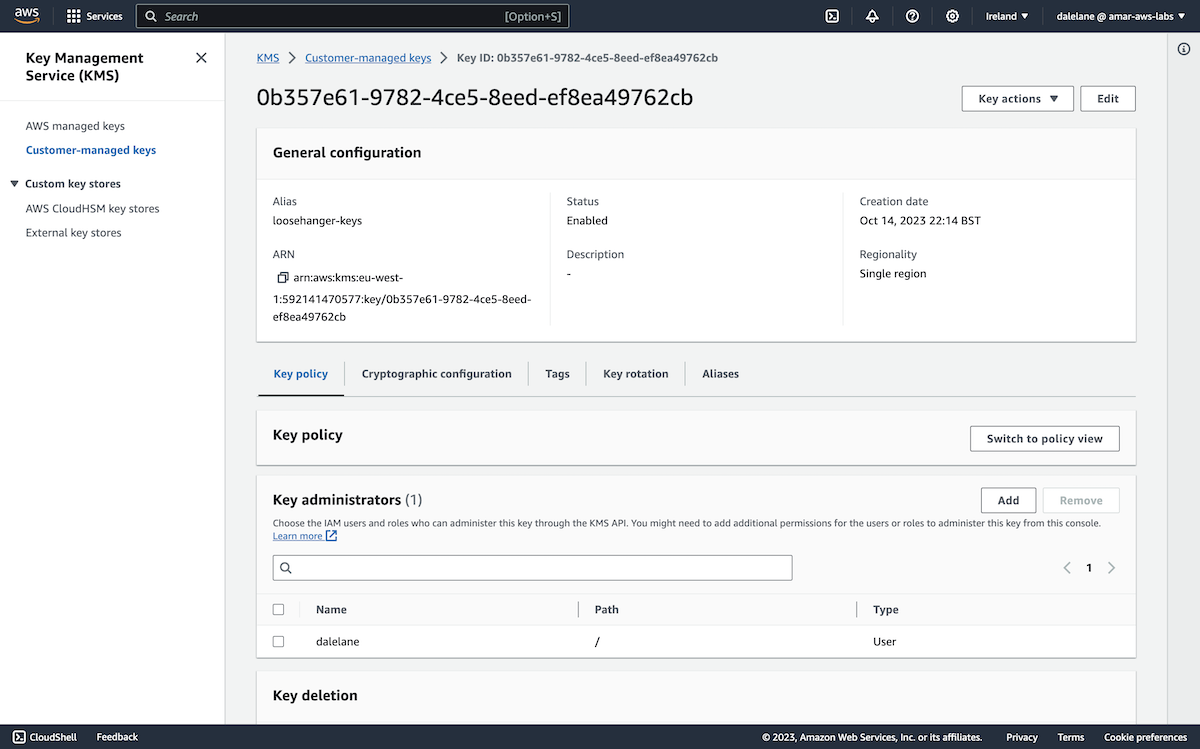

We clicked Add new key to create a custom encryption key for this demo.

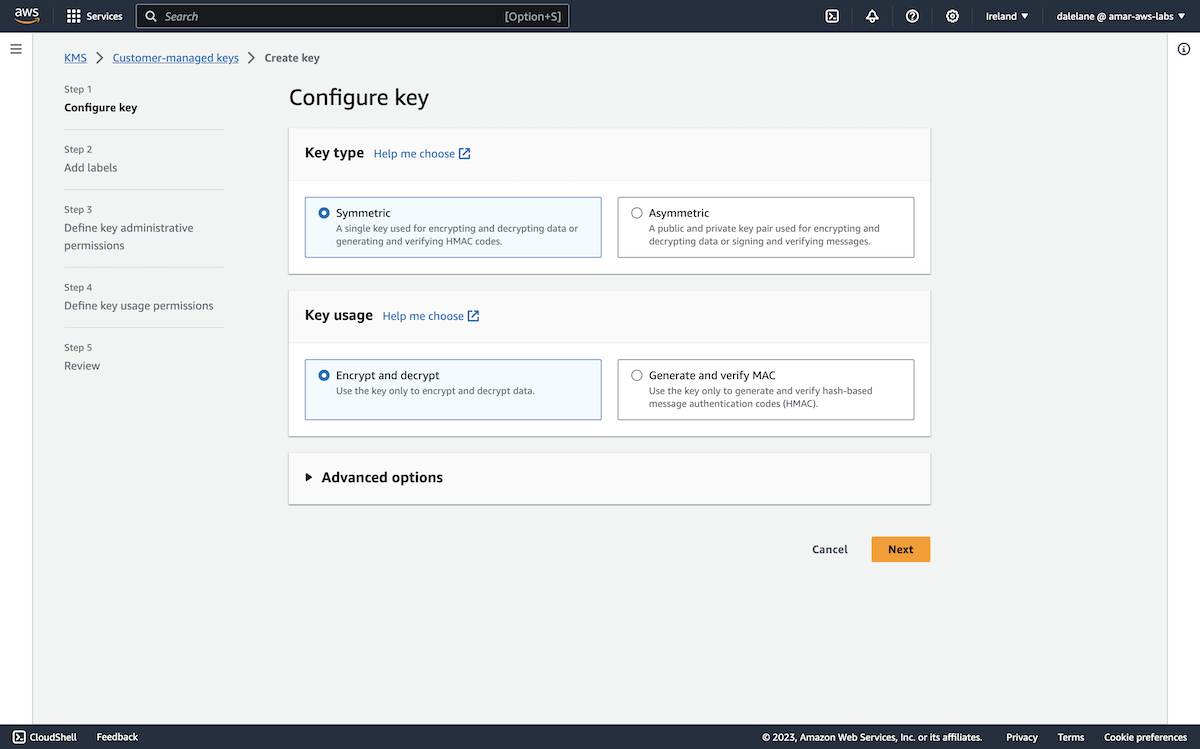

The default key type and usage were fine for our needs.

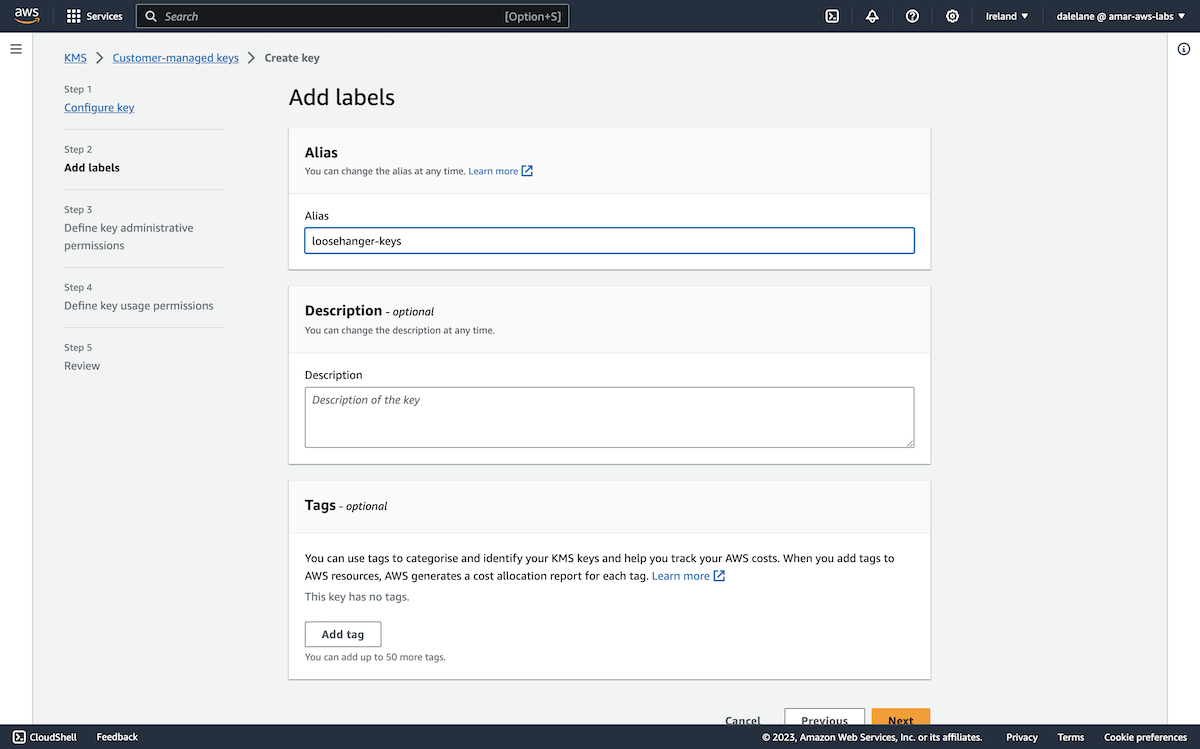

We gave it a name, again keeping with our "Loosehanger" clothing retailer scenario.

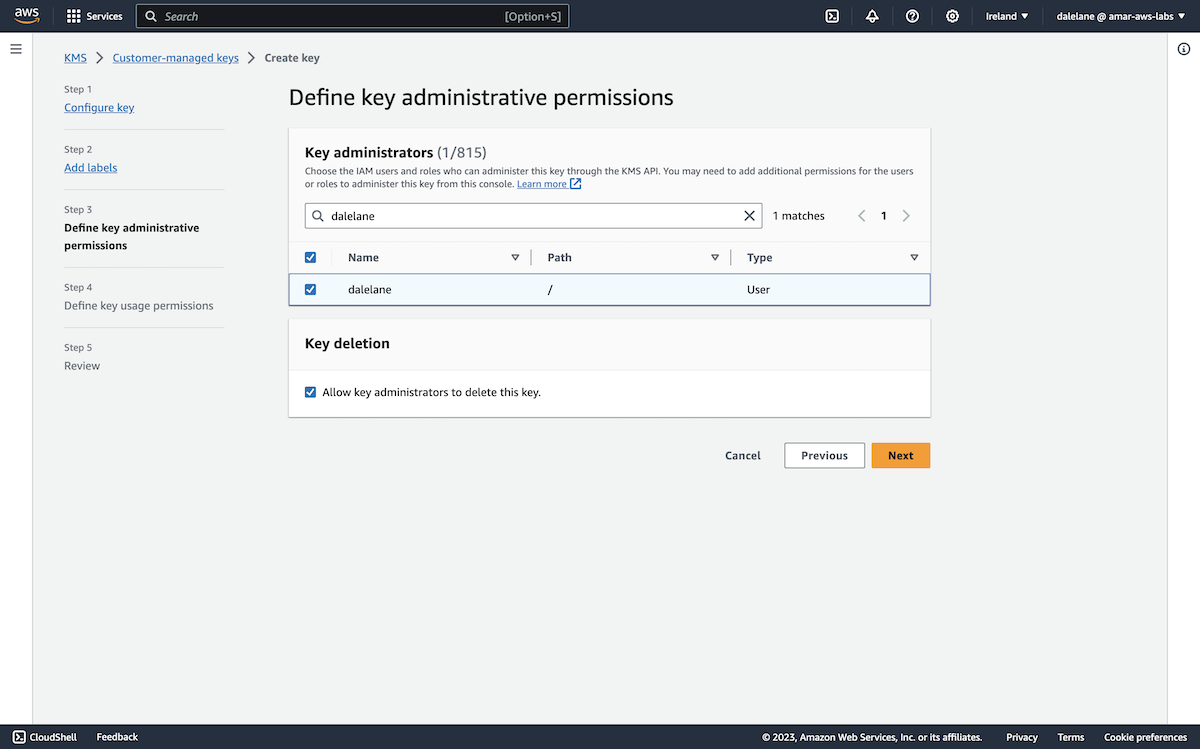

We set the permissions to make me the administrator of the key.

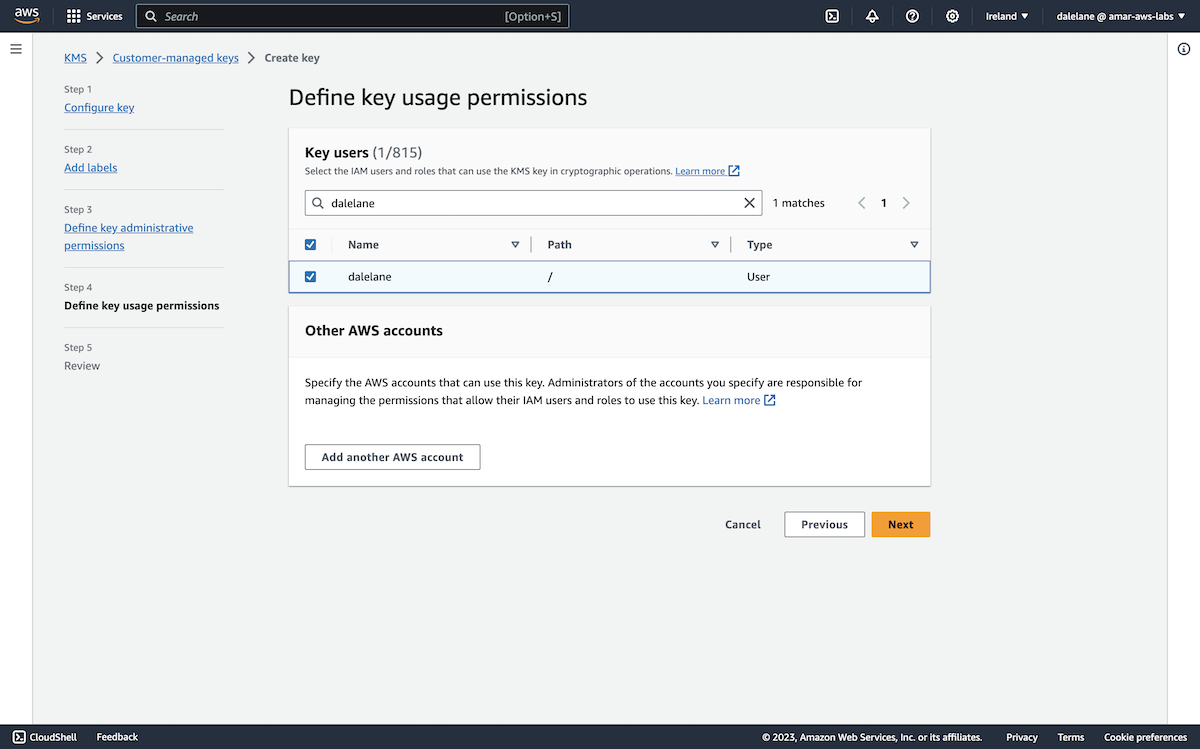

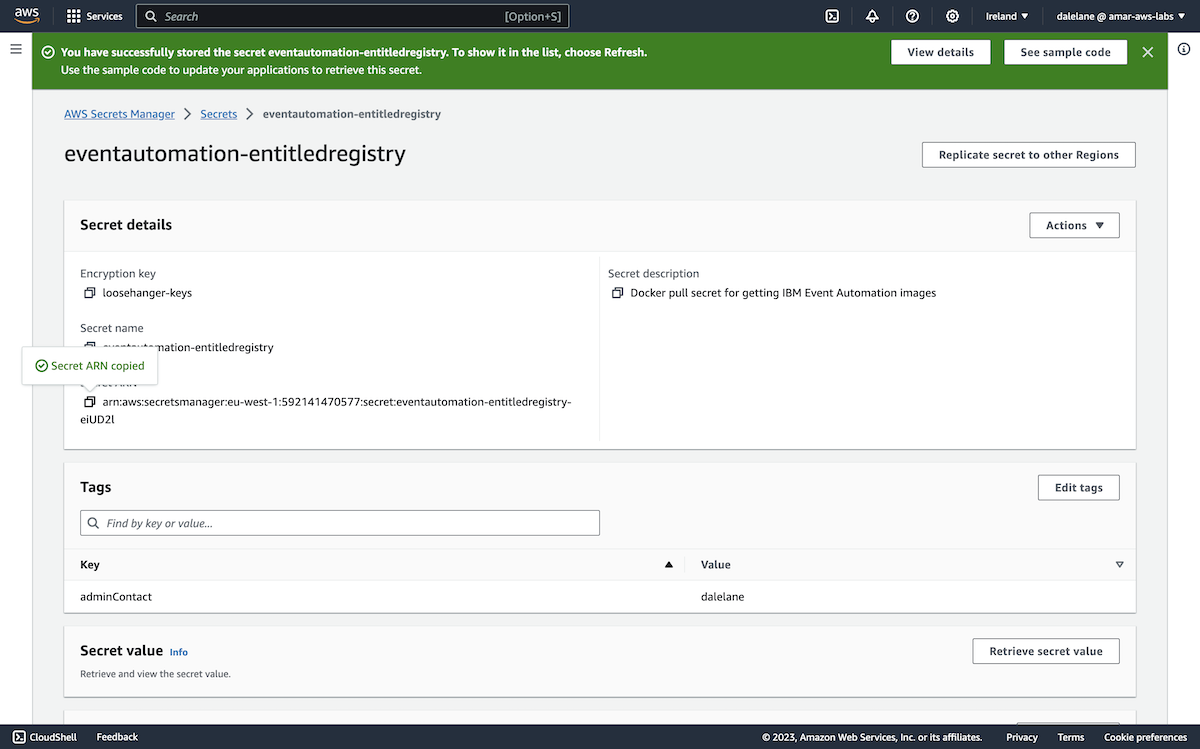

With the new key created, we could choose it to use this new encryption key for the secret with our new producer credentials.

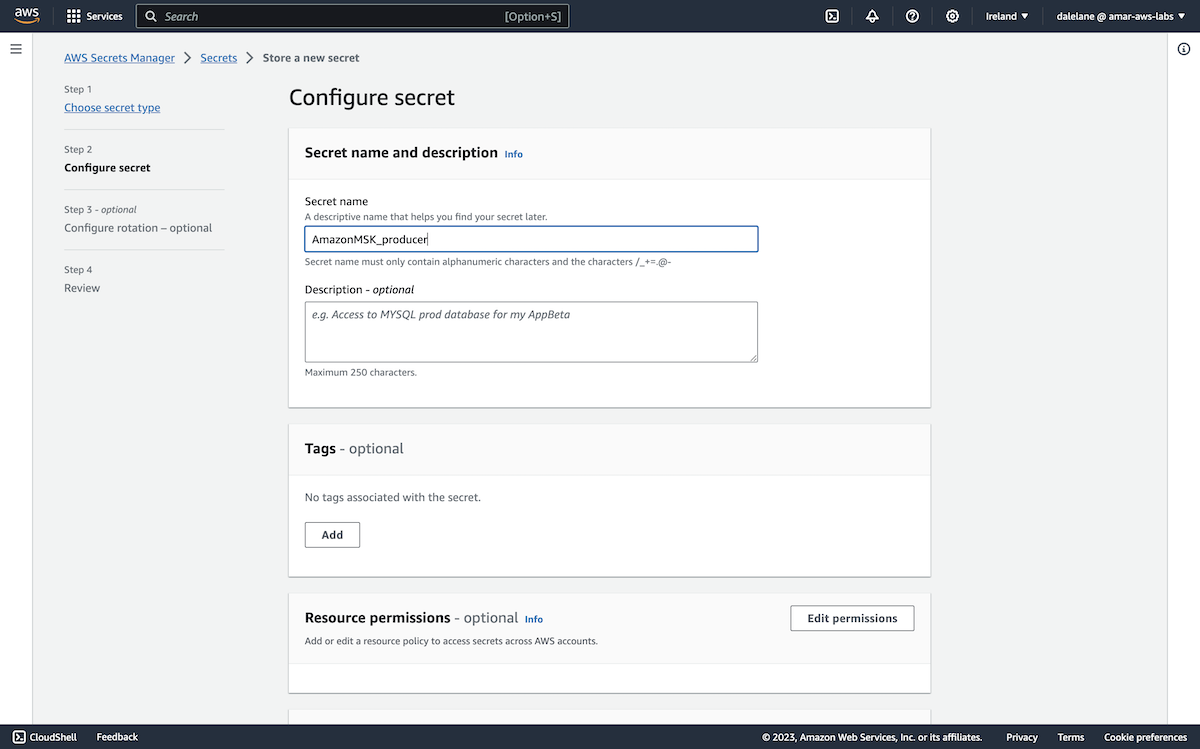

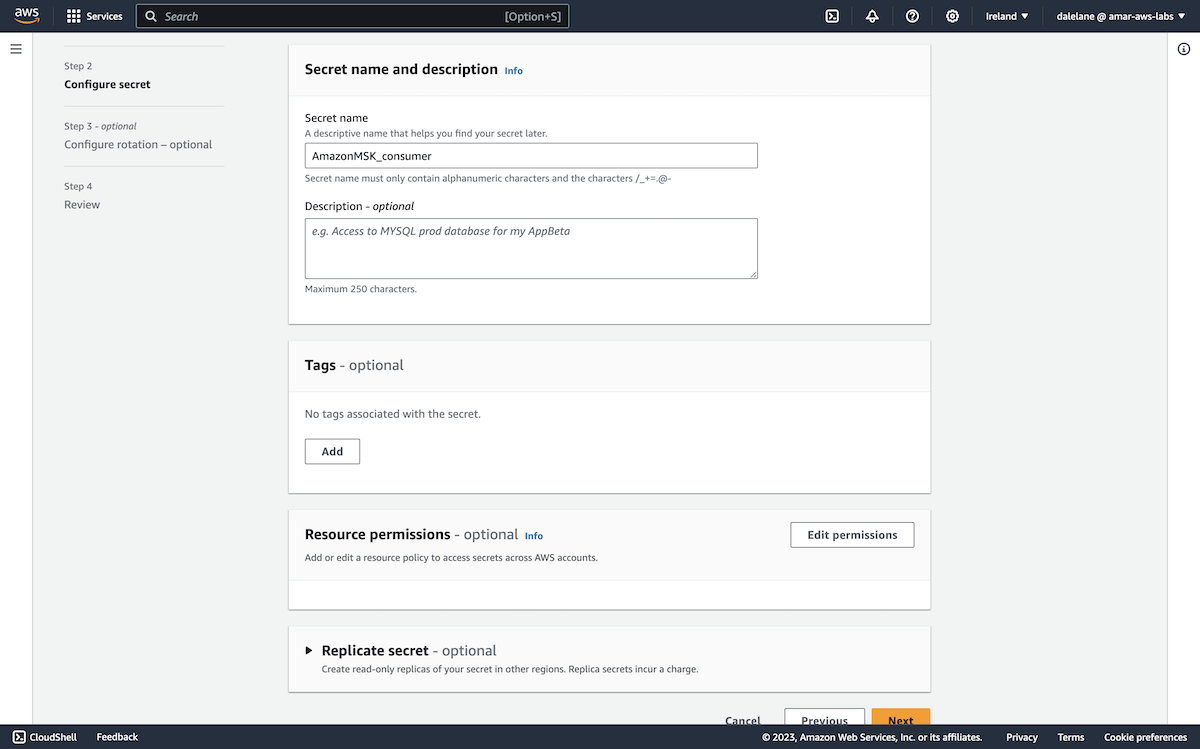

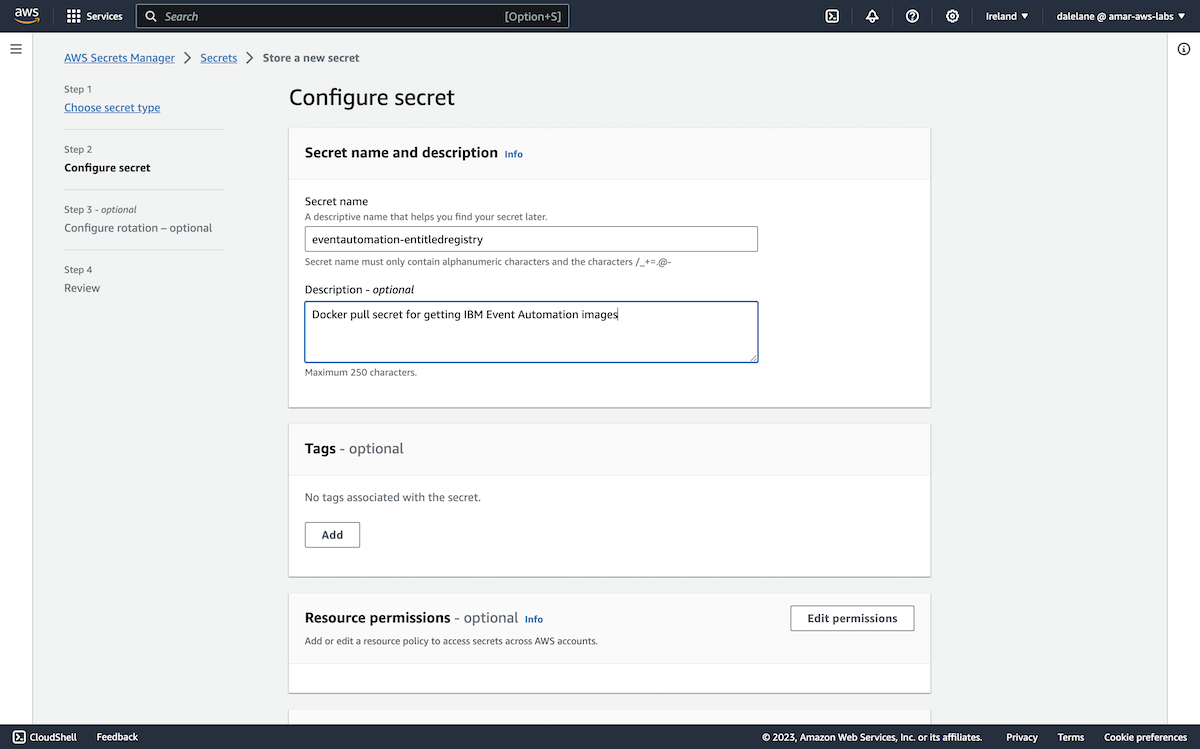

To use the secret for Amazon MSK credentials, we needed to give the secret a name starting with AmazonMSK_.

We went with AmazonMSK_producer.

We clicked through the remaining steps until we could click Store.

We needed to repeat this to create a secret for our consumer user.

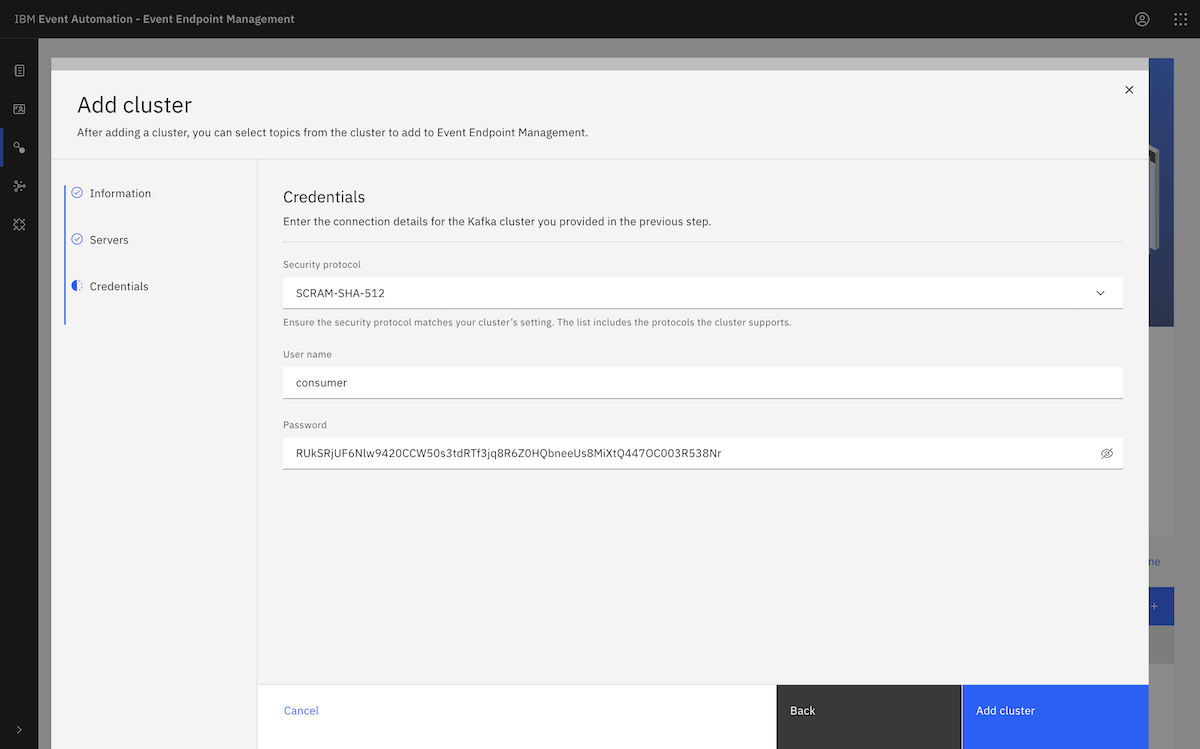

Again, we generated a long random password to use for clients that can only consume from our topics.

{

"username": "consumer",

"password": "RUkSRjUF6Nlw9420CCW50s3tdRTf3jq8R6Z0HQbneeUs8MiXtQ447OC003R538Nr"

}

We used the same new encryption key that we created for the consumer secret.

We had to give it a name with the same AmazonMSK_ prefix, so we went with the name AmazonMSK_consumer.

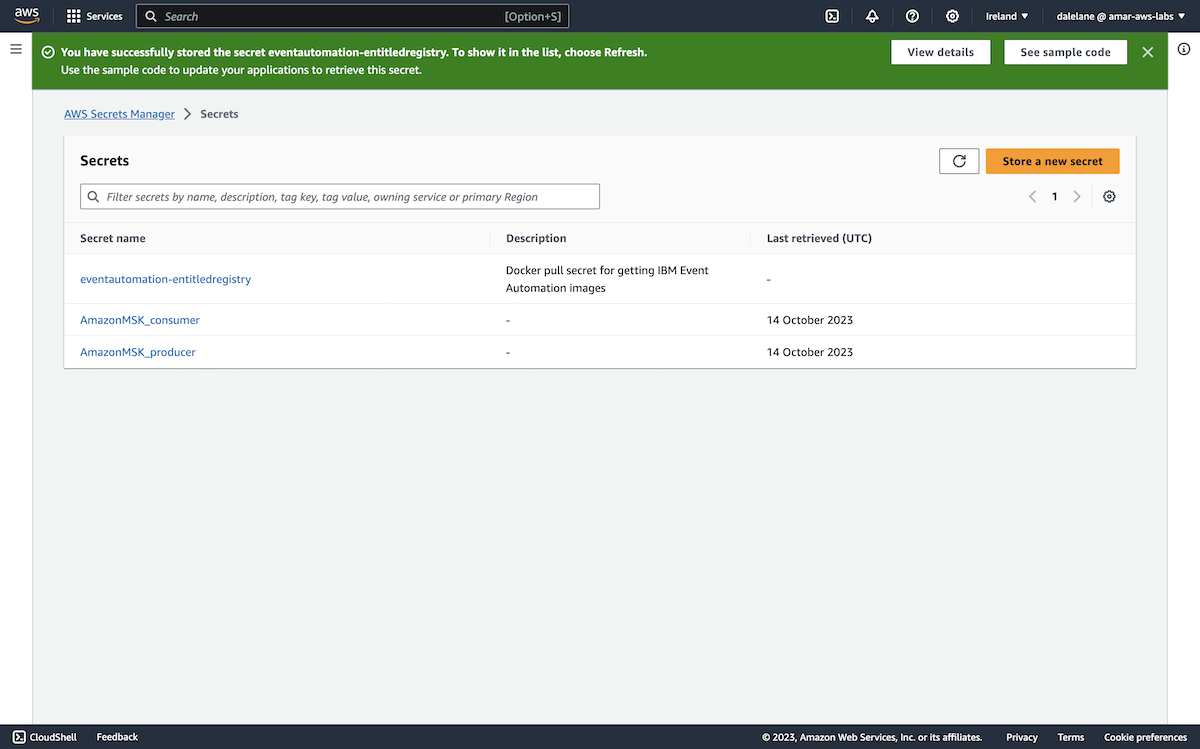

With these two secrets, our credentials were now ready.

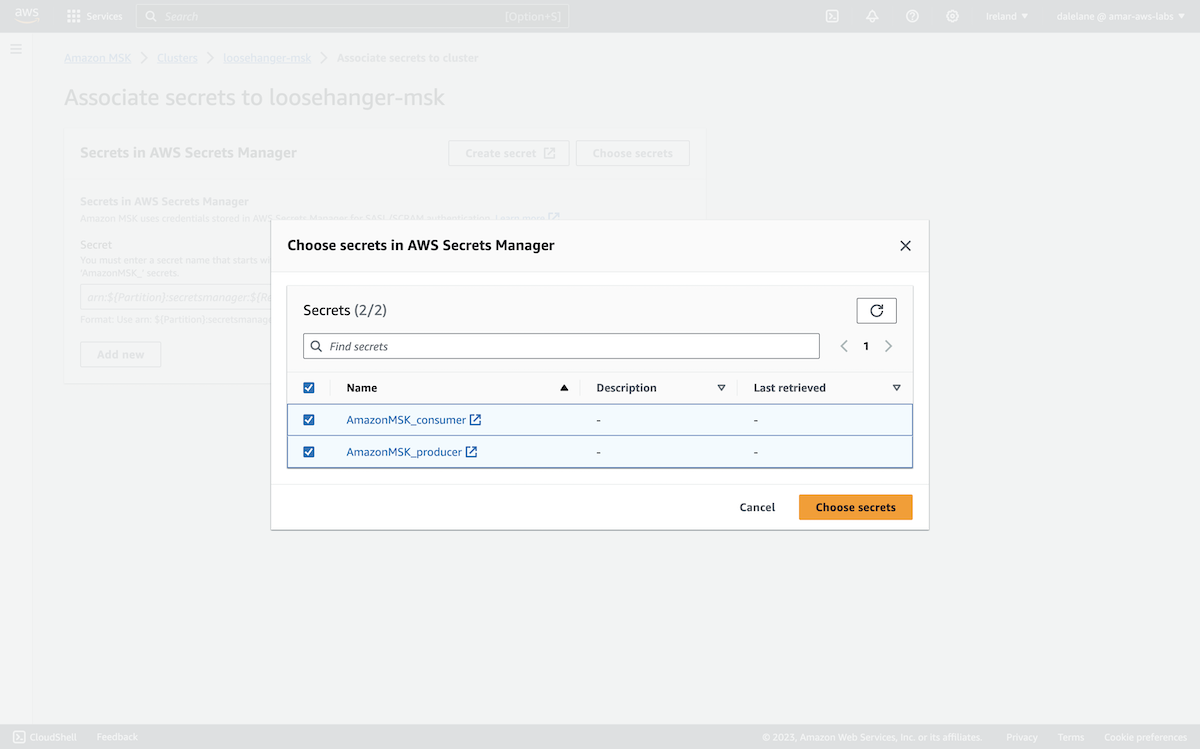

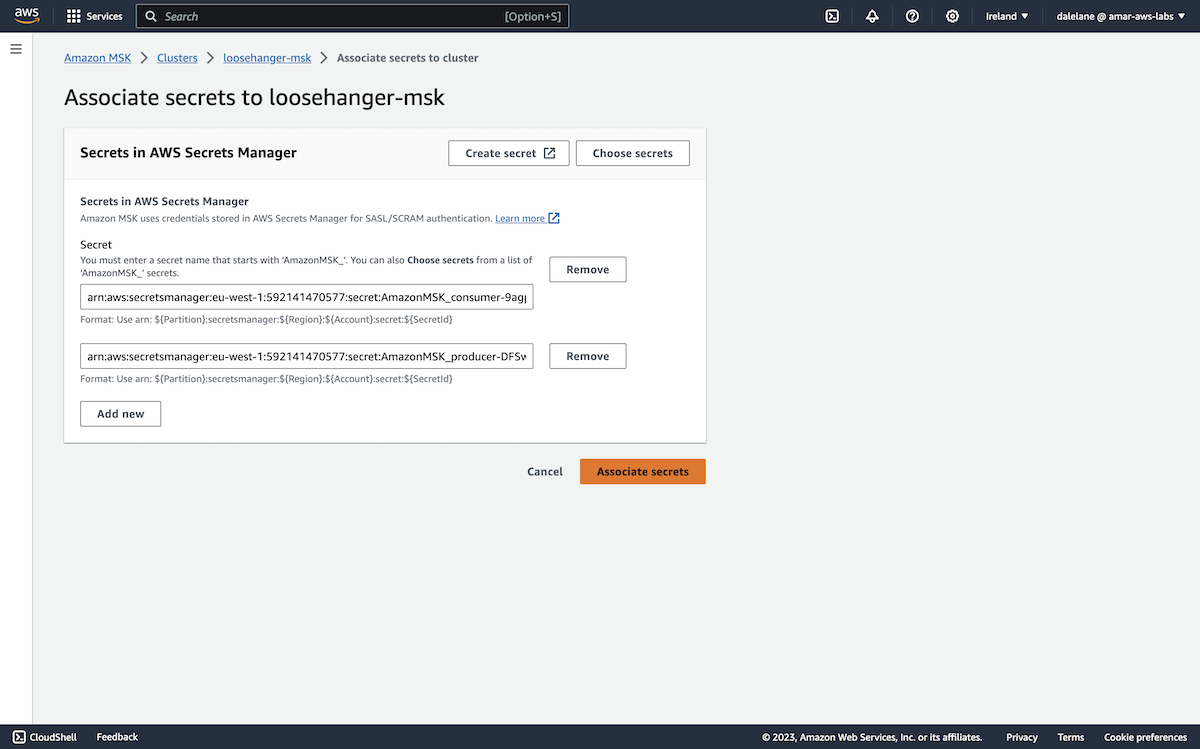

We associated both of them with our MSK cluster.

We clicked Associate secrets.

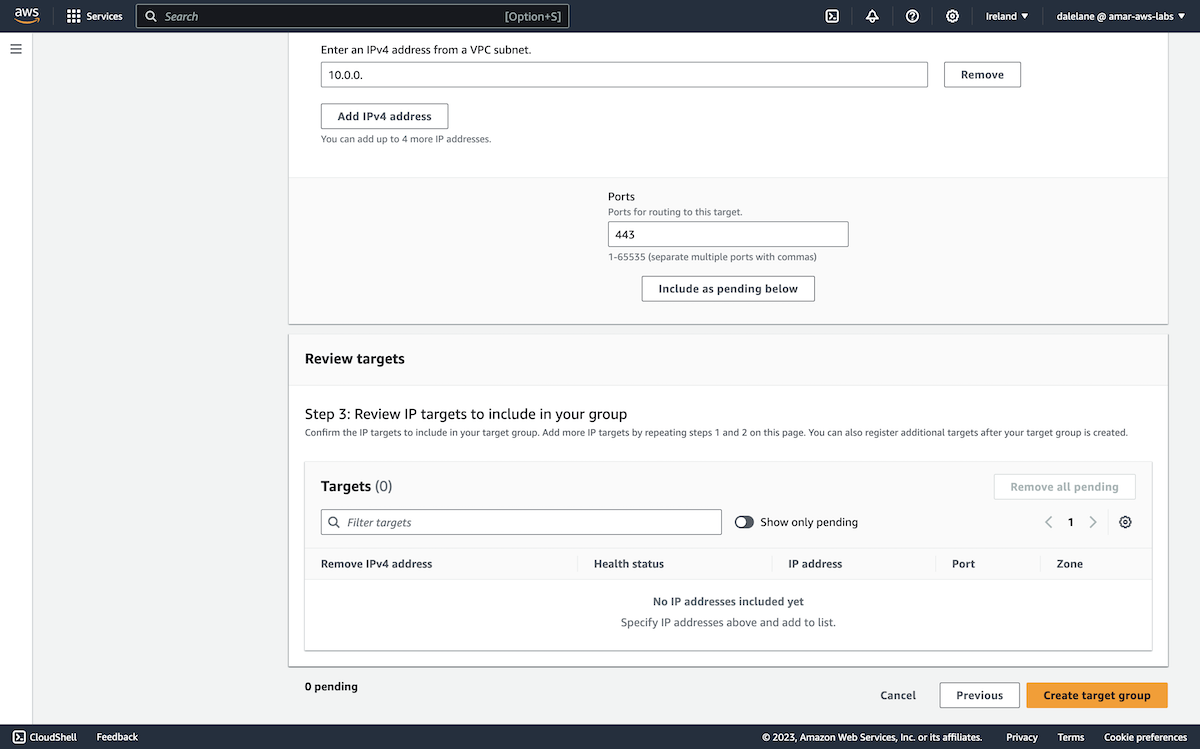

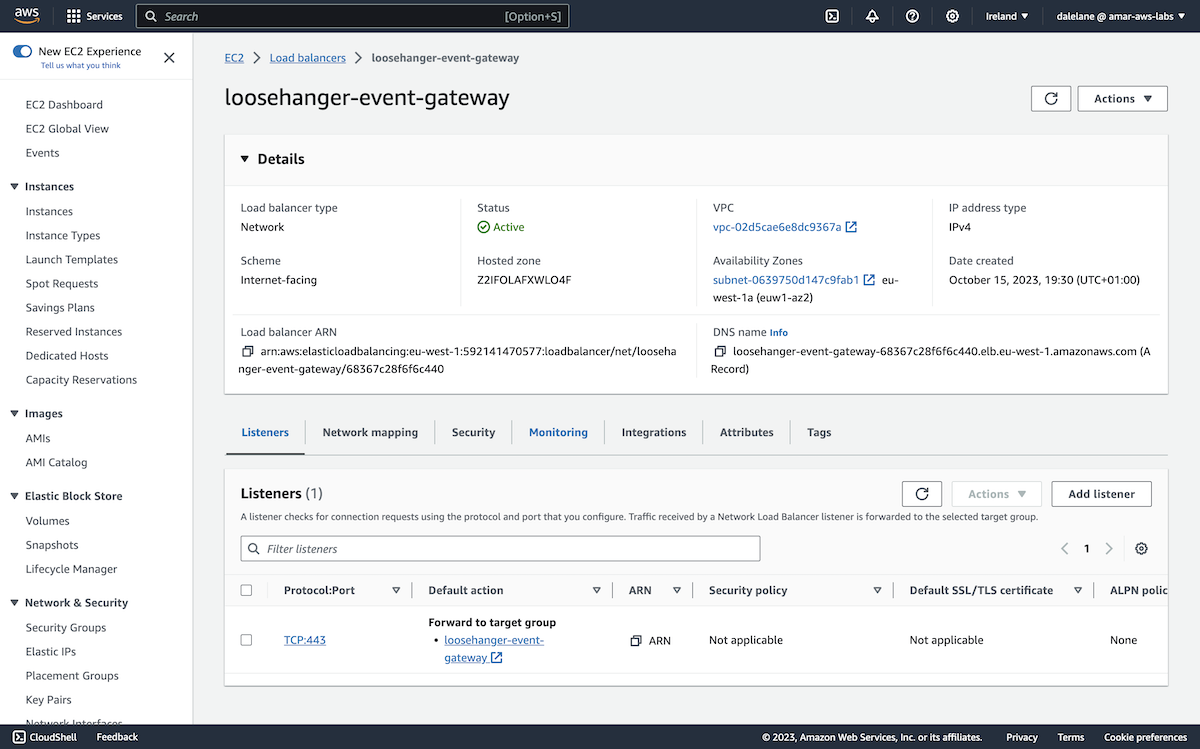

With authentication prepared, we were ready to modify the MSK configuration to allow public access to the Kafka cluster.

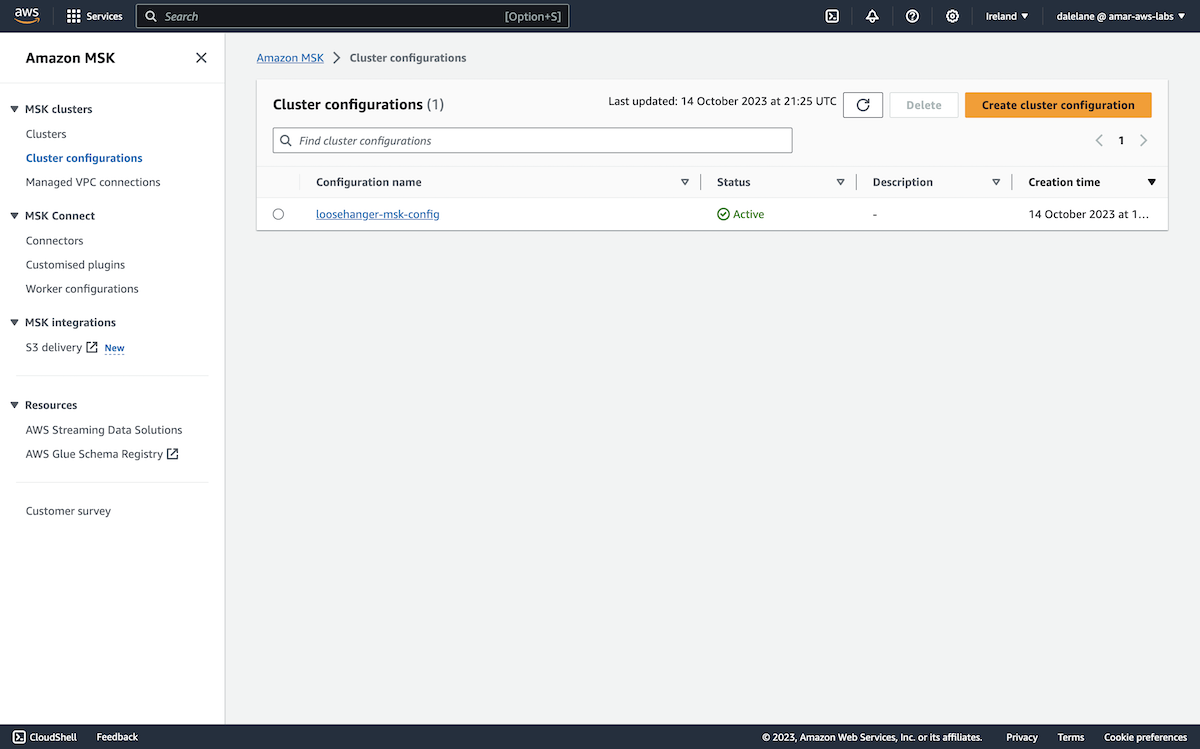

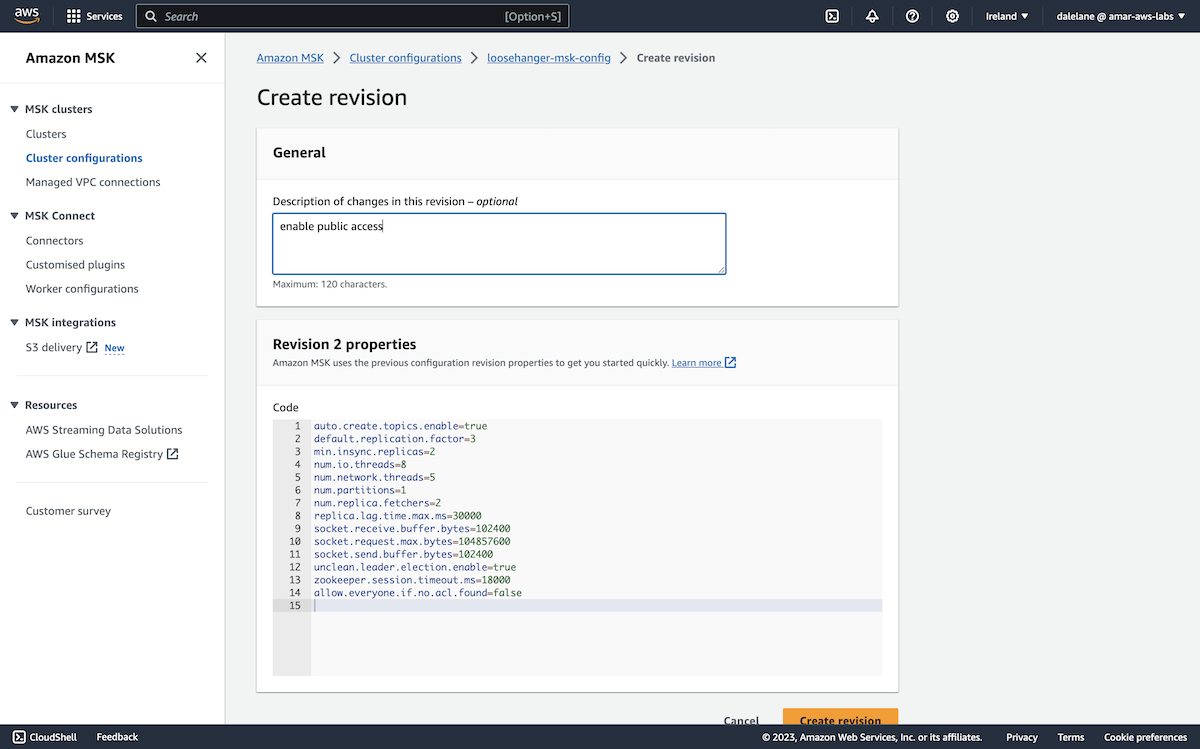

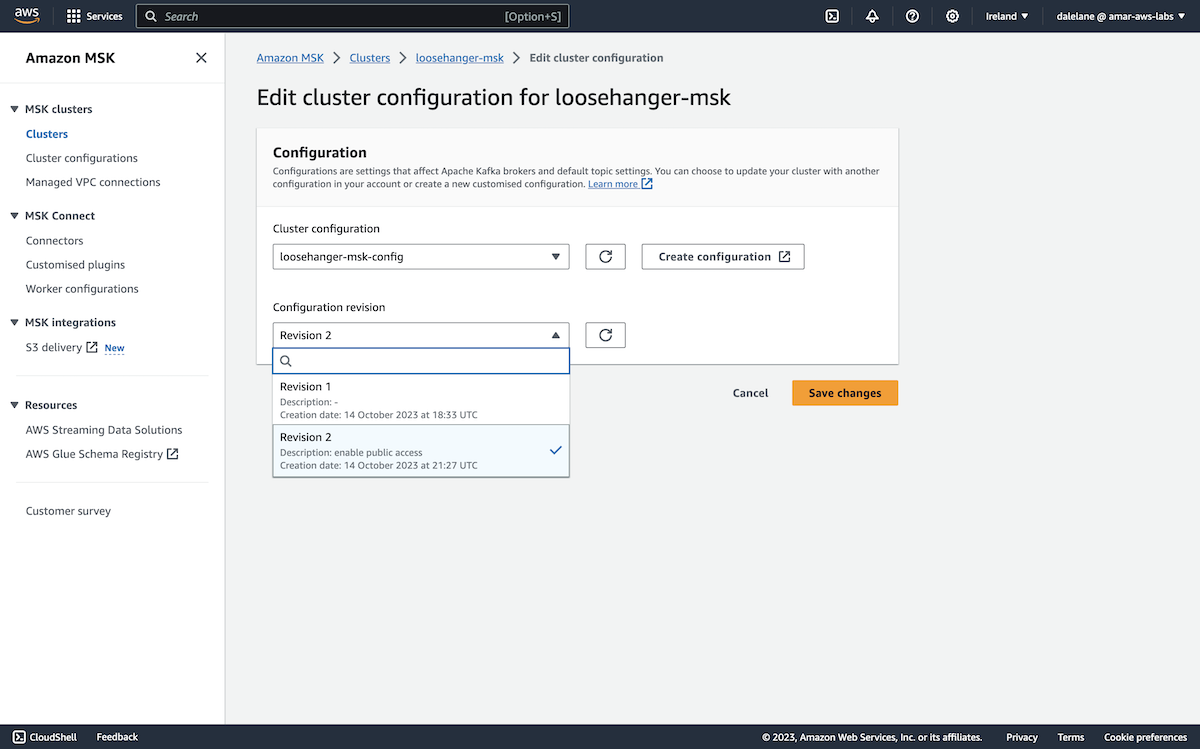

First, we needed to modify the cluster configuration to set a property that Amazon requires for public access. We went to the Amazon MSK Cluster Configurations, clicked on our config name, then clicked Create revision.

We modified the following value:

allow.everyone.if.no.acl.found=false

And then clicked Create revision.

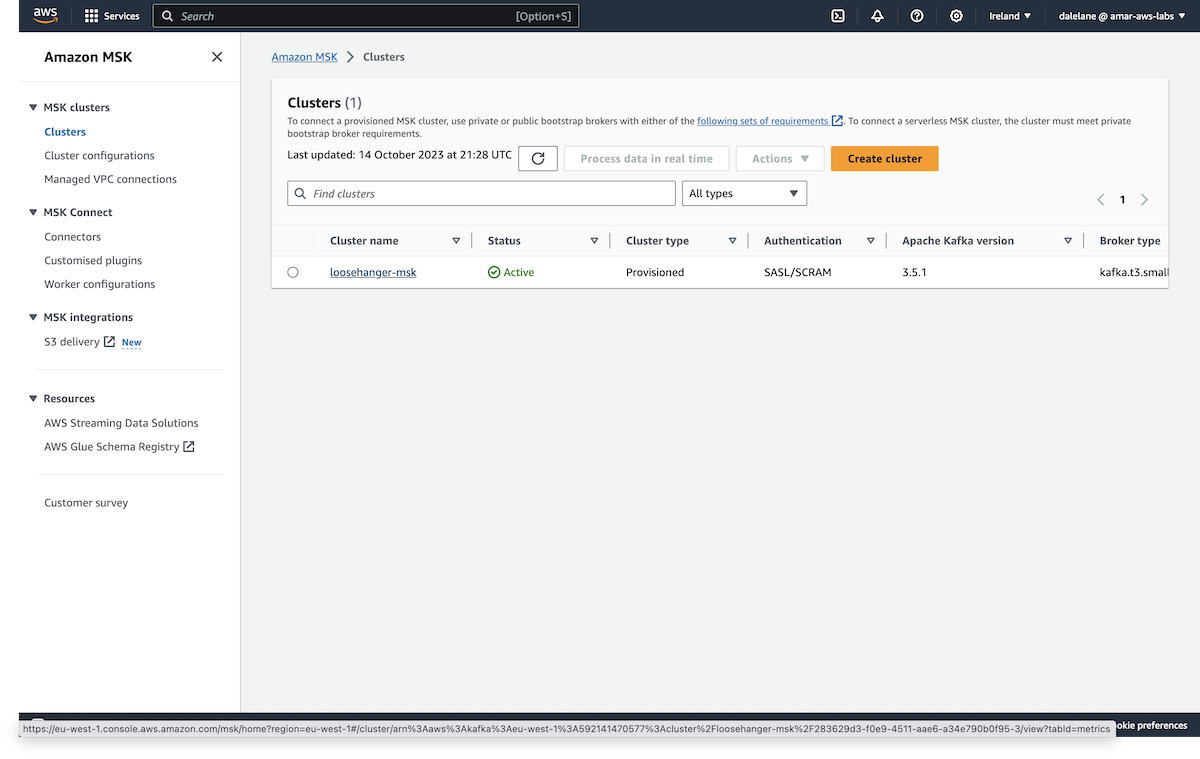

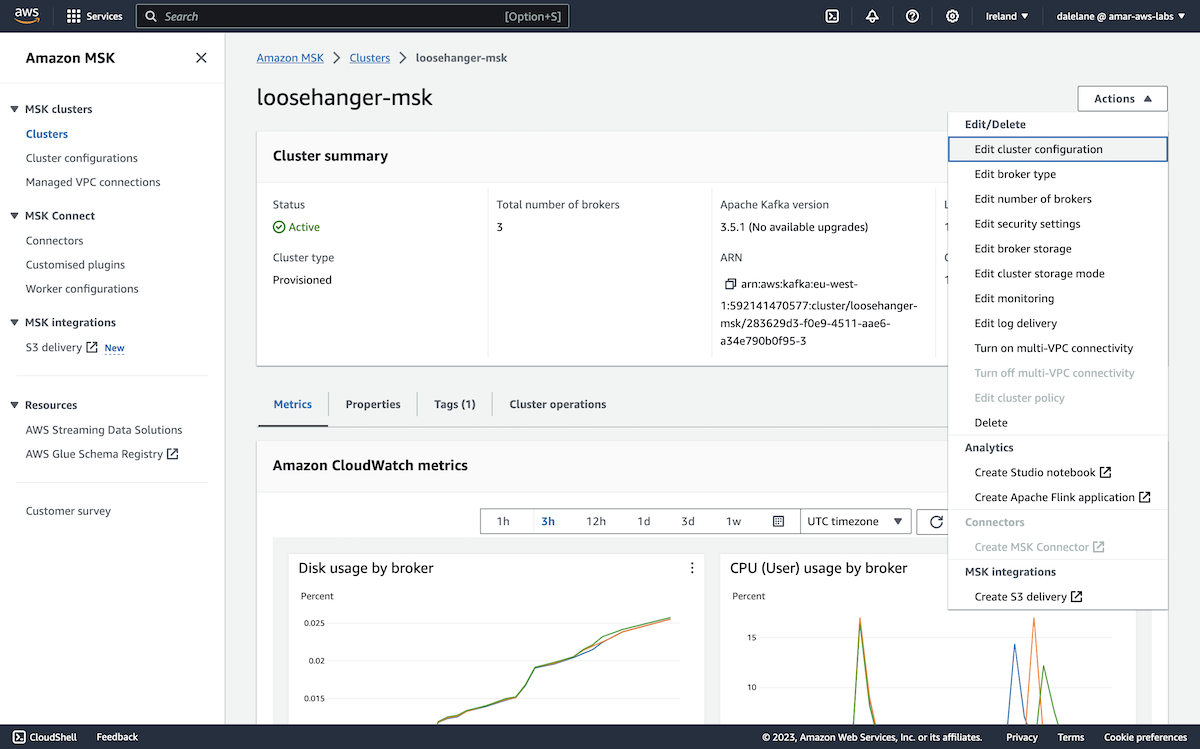

To use this modified configuration, we went back to the MSK cluster, and clicked on it.

We selected Edit cluster configuration.

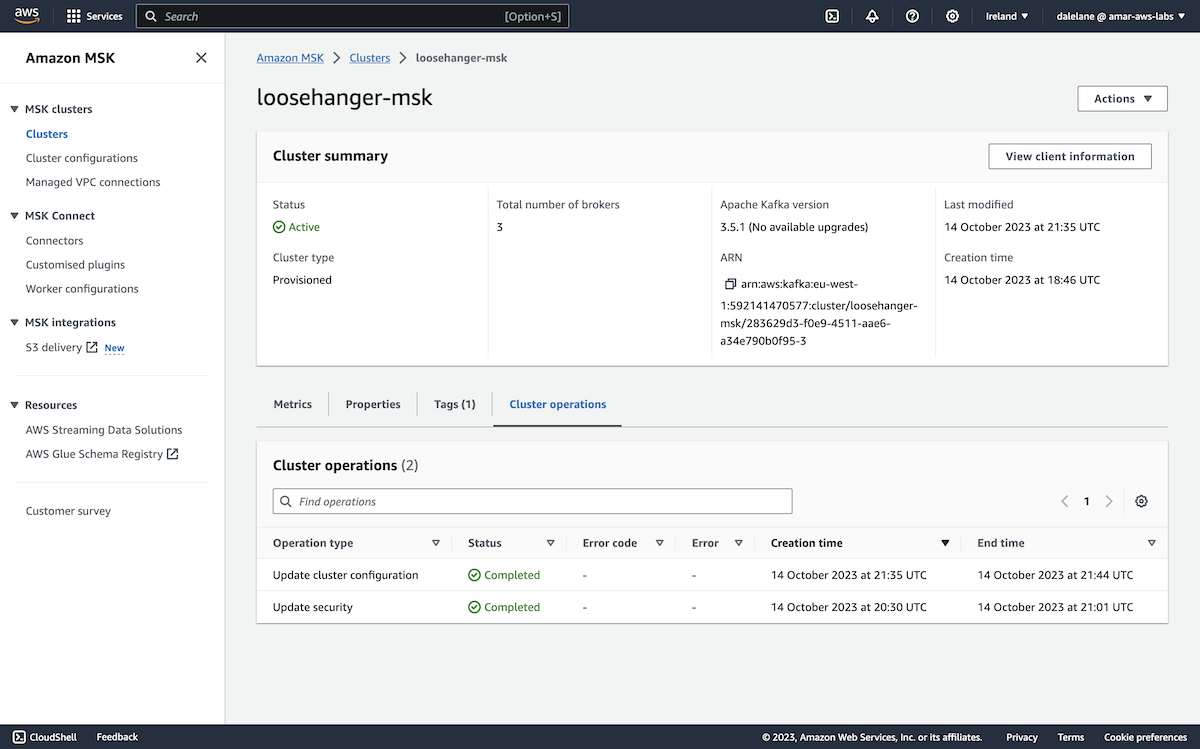

We could then choose the new config revision, and click Save changes.

This can take ten minutes or so to complete.

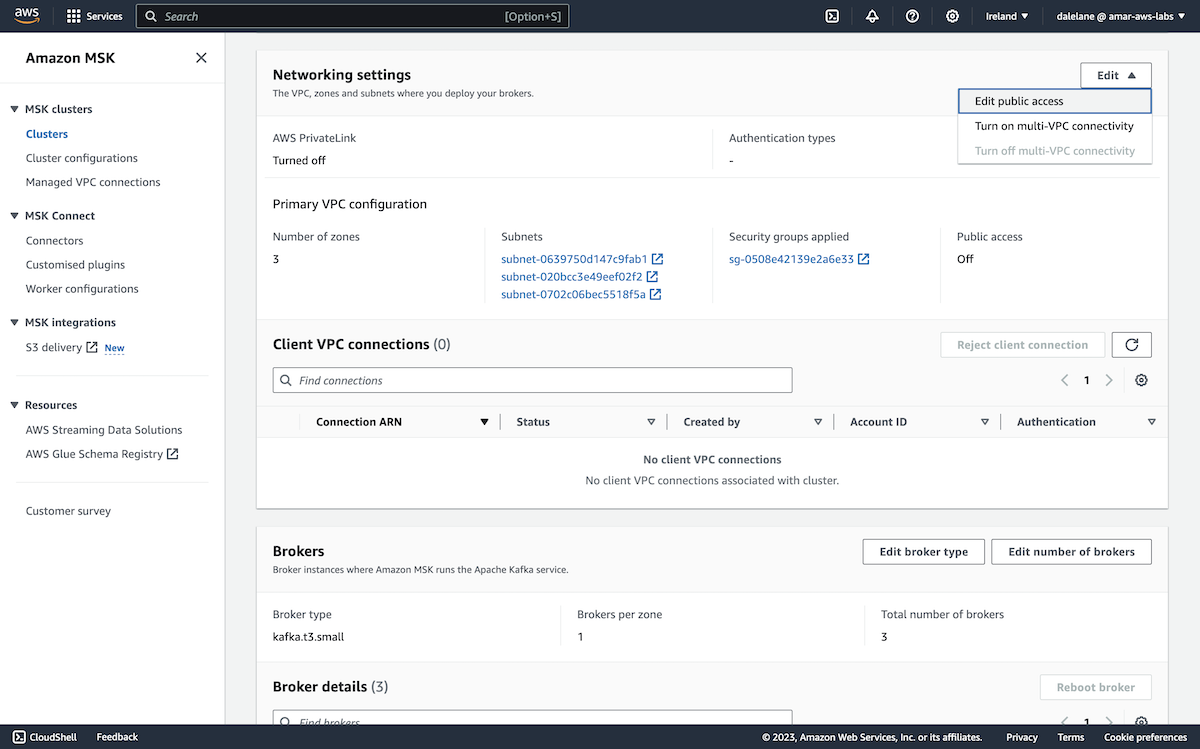

Once this was complete, we clicked the Properties tab.

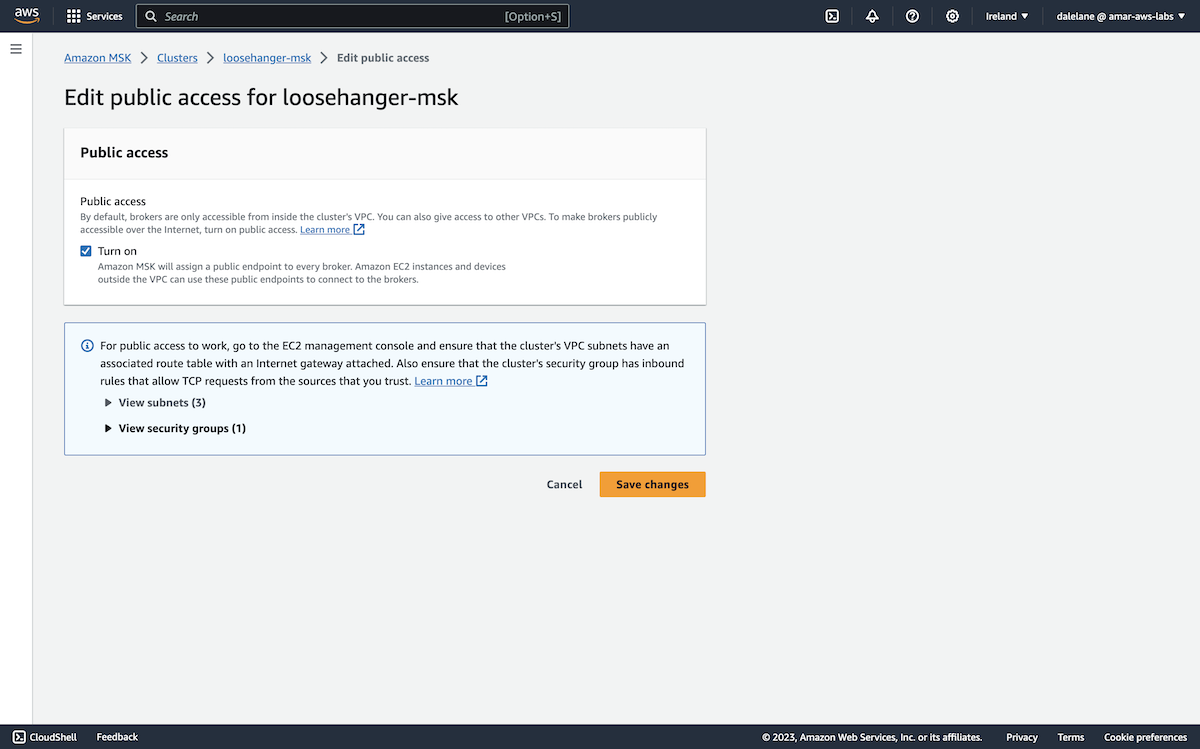

We scrolled to the Networking settings section, and selected the Edit public access option.

We enabled Turn on and clicked Save changes.

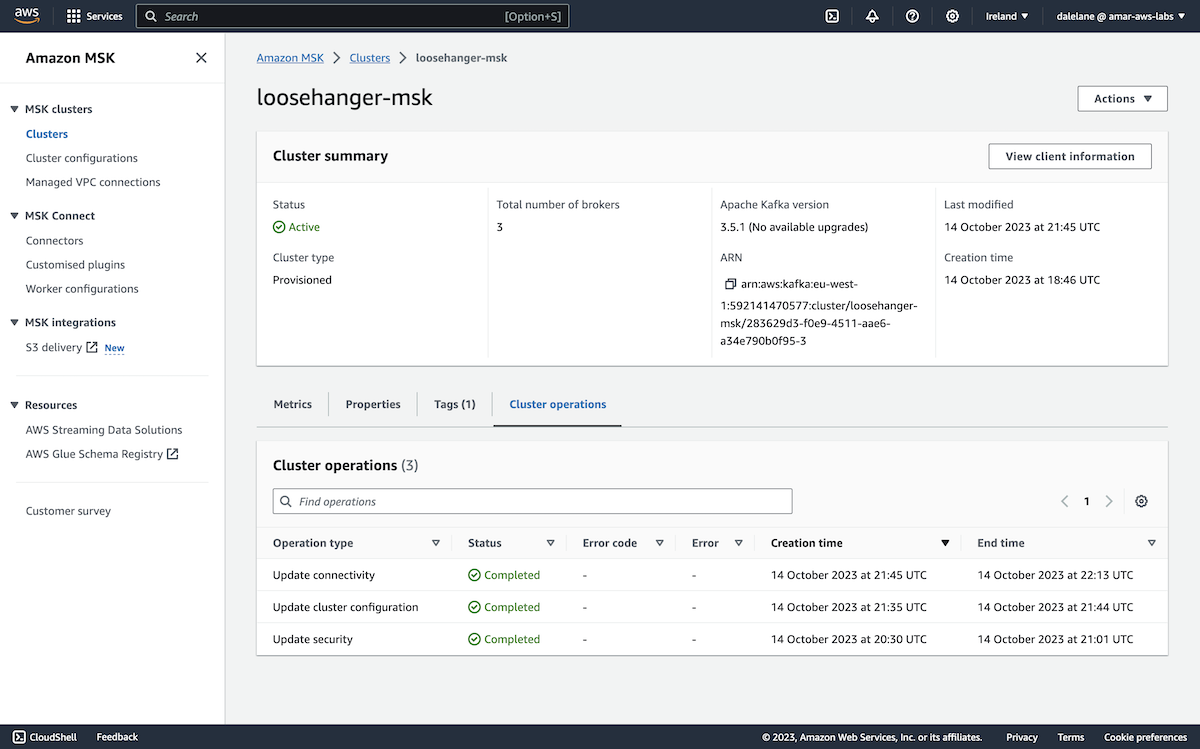

There was a bit of a wait for this change to be applied.

After thirty minutes, the configuration change was complete.

We now had an Amazon MSK cluster, with the topics we wanted to use, configured to allow public access, and with two SASL/SCRAM usernames/passwords prepared for our applications to use.

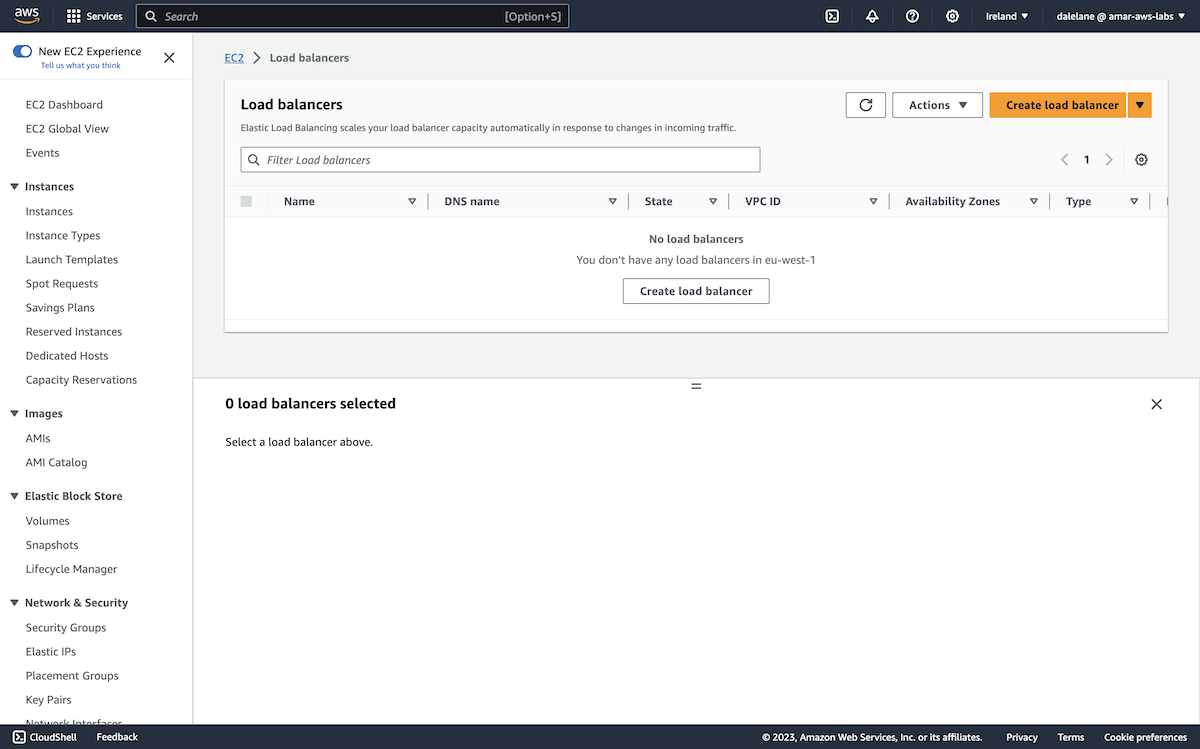

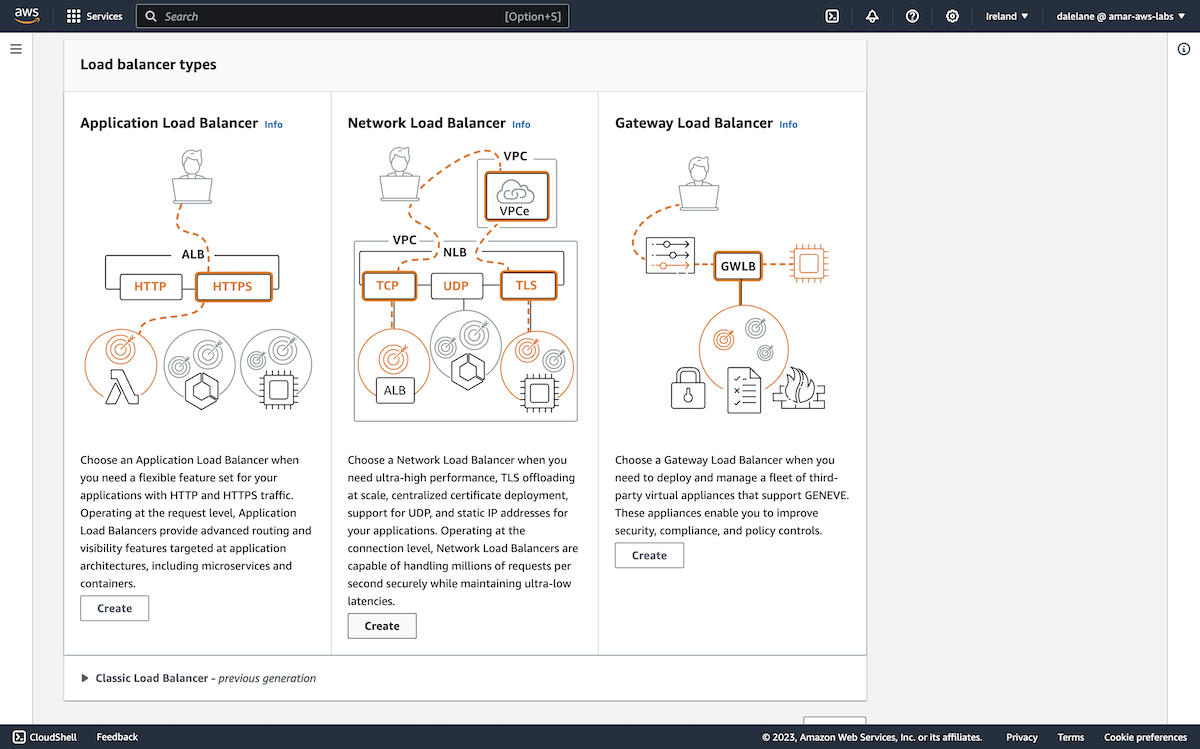

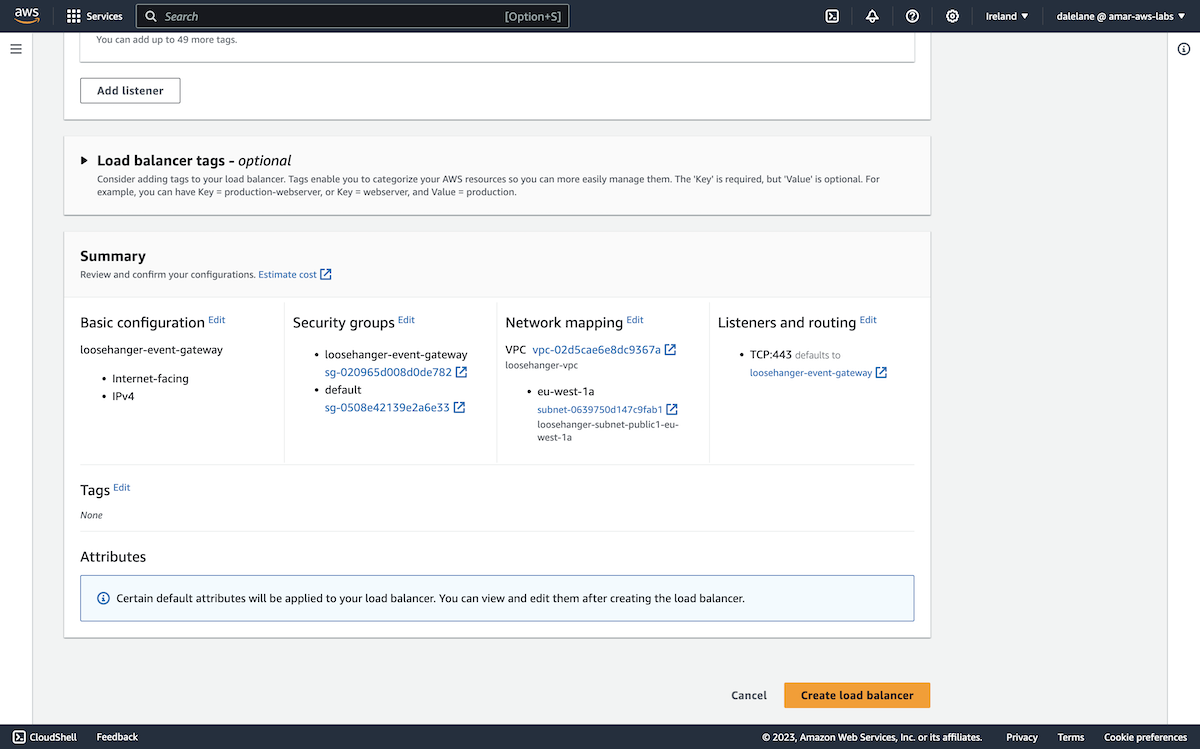

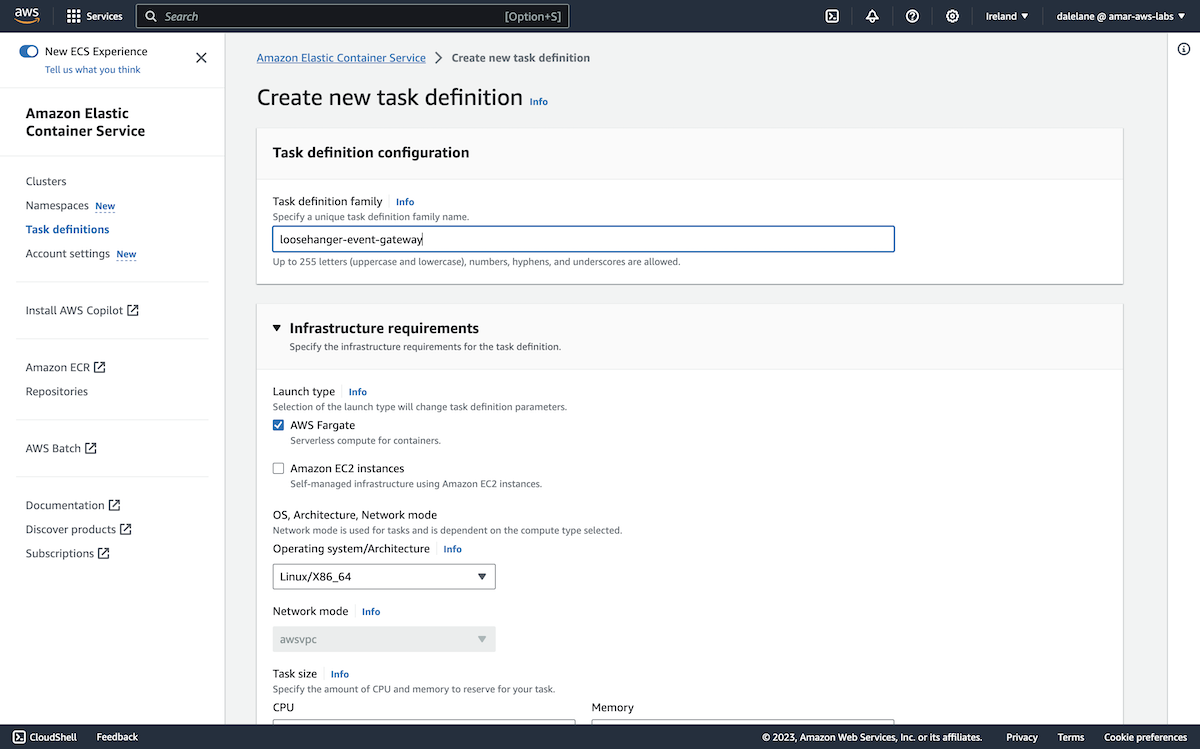

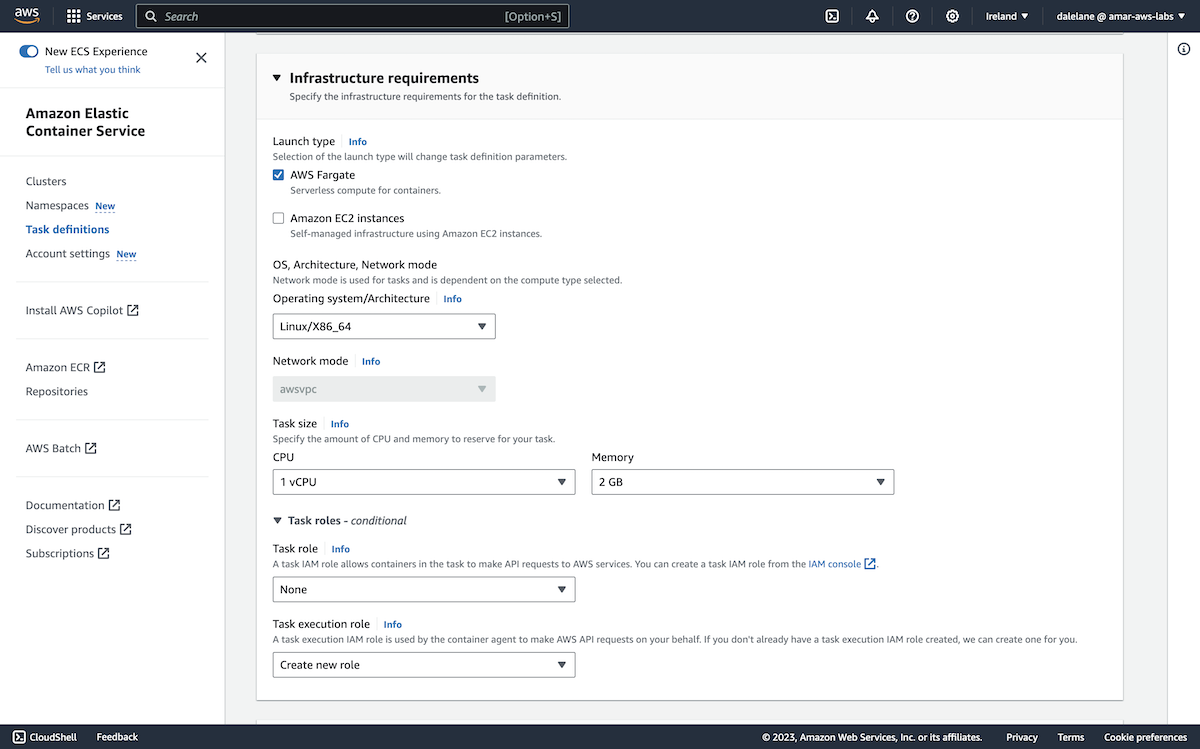

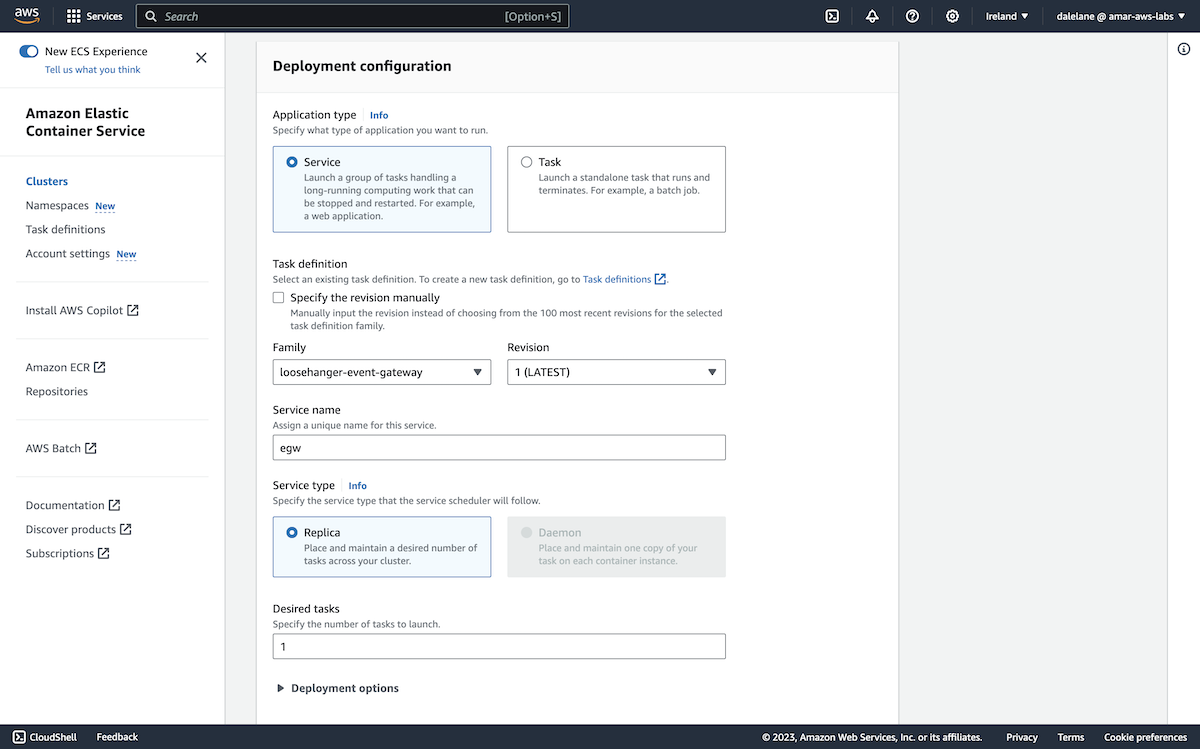

We started an app producing events to the Amazon MSK topics we'd created. ▶

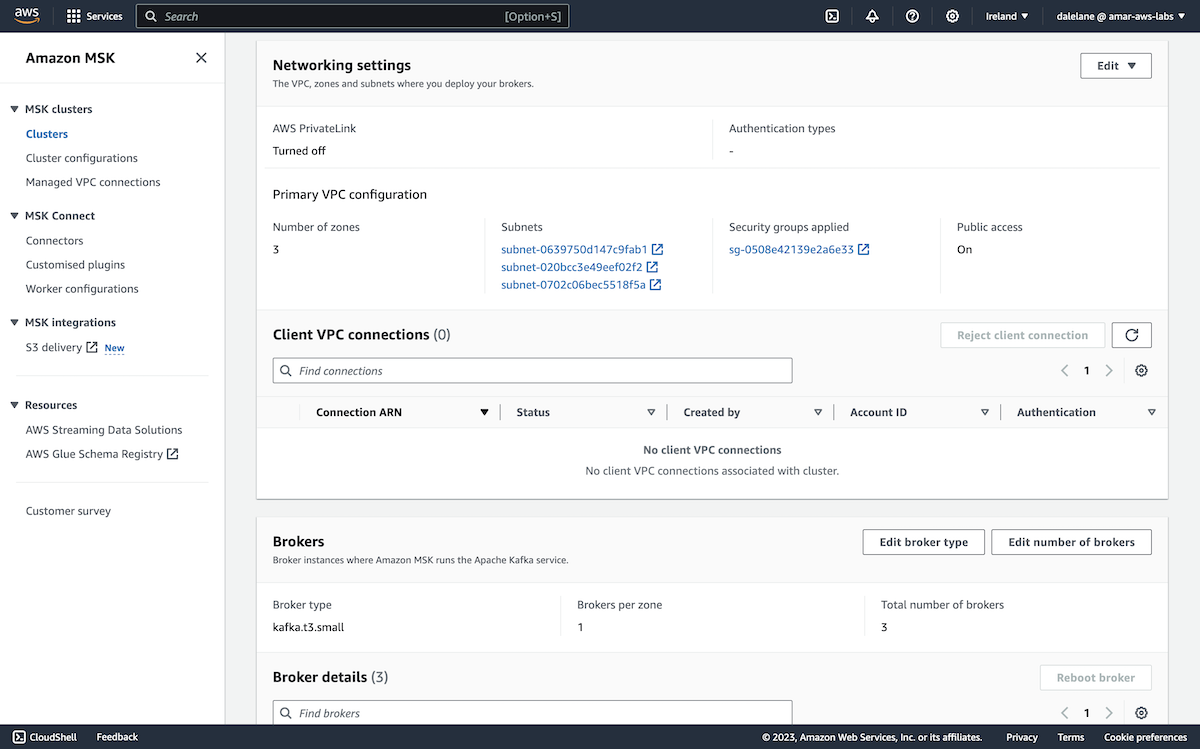

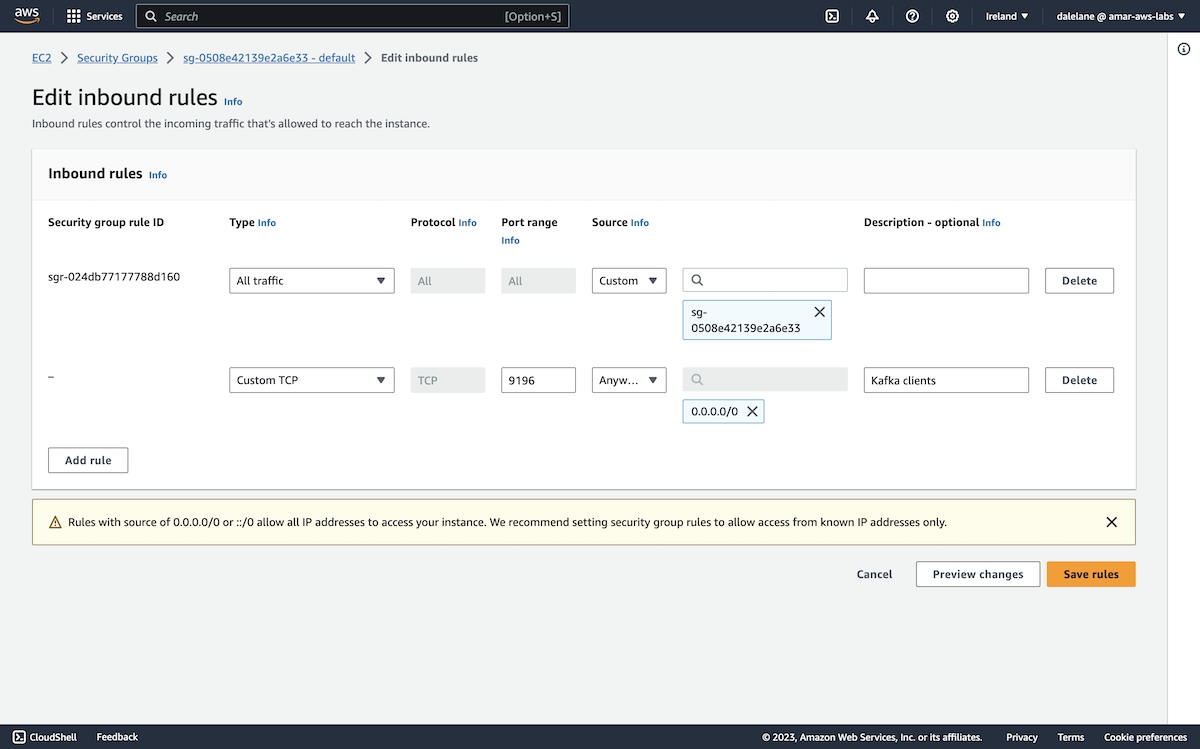

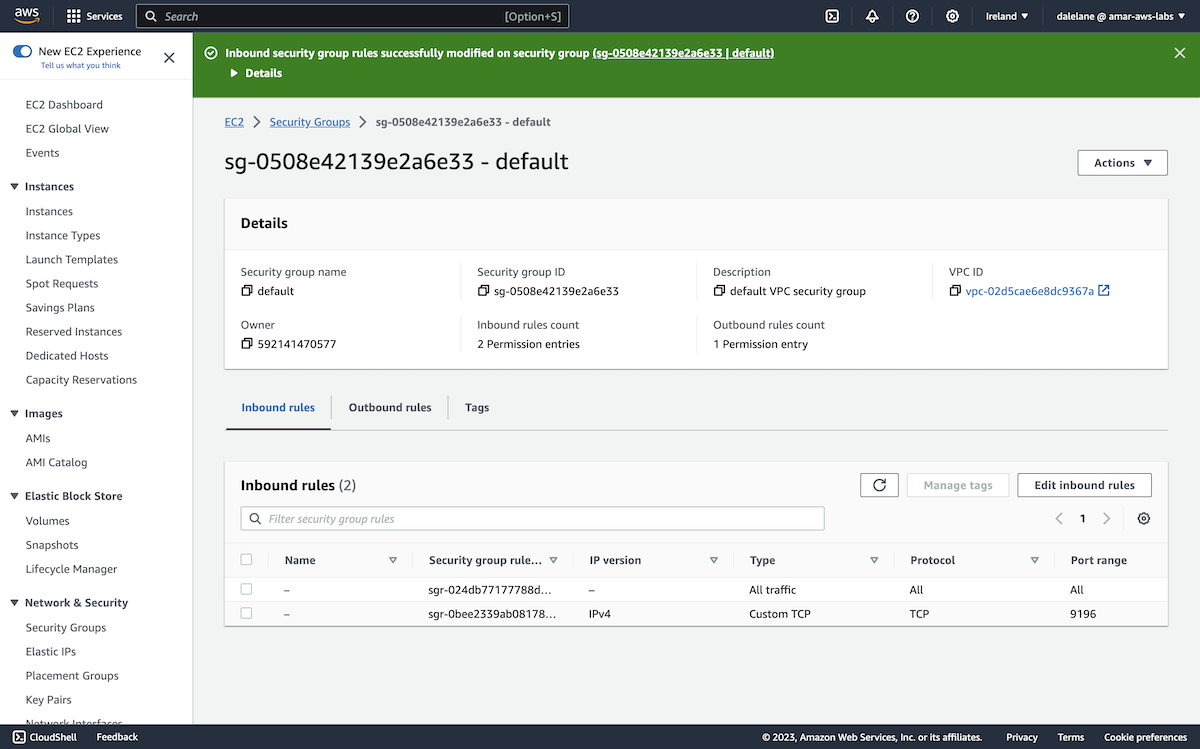

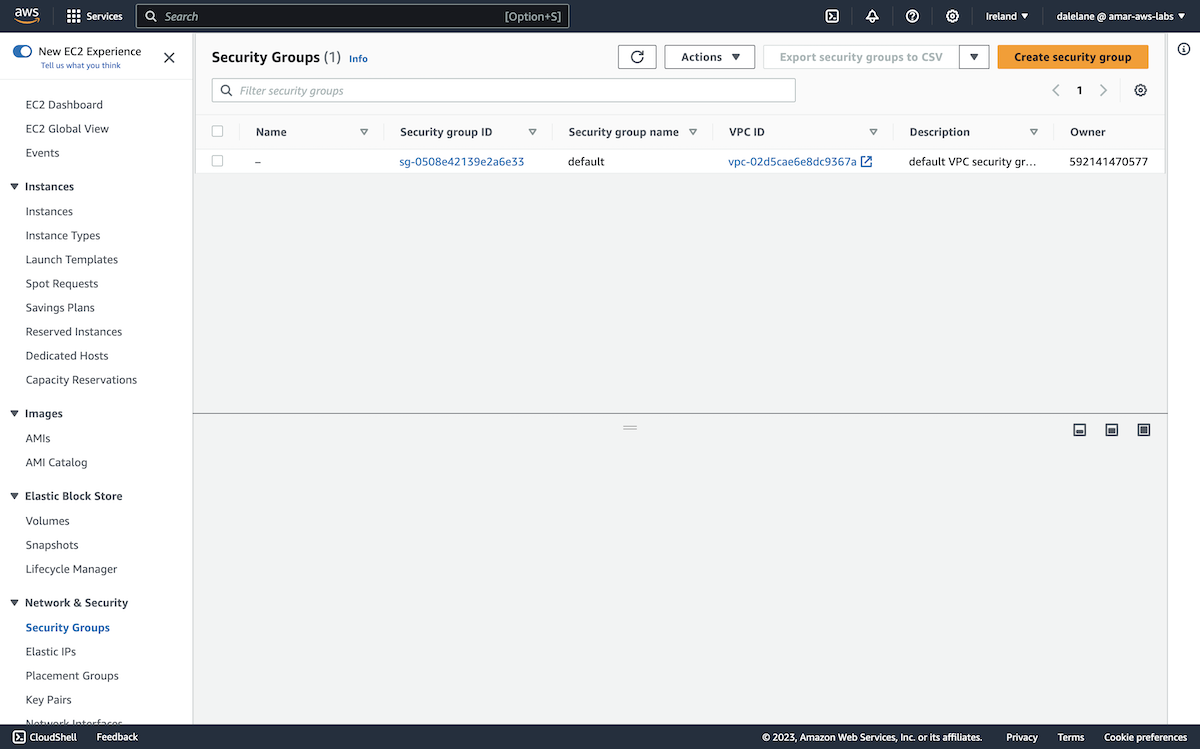

First, we needed to modify our MSK cluster to allow connections from an app we would run on our laptop.

We went back to our MSK cluster instance, clicked on to the Properties tab, and scrolled to the Networking settings section.

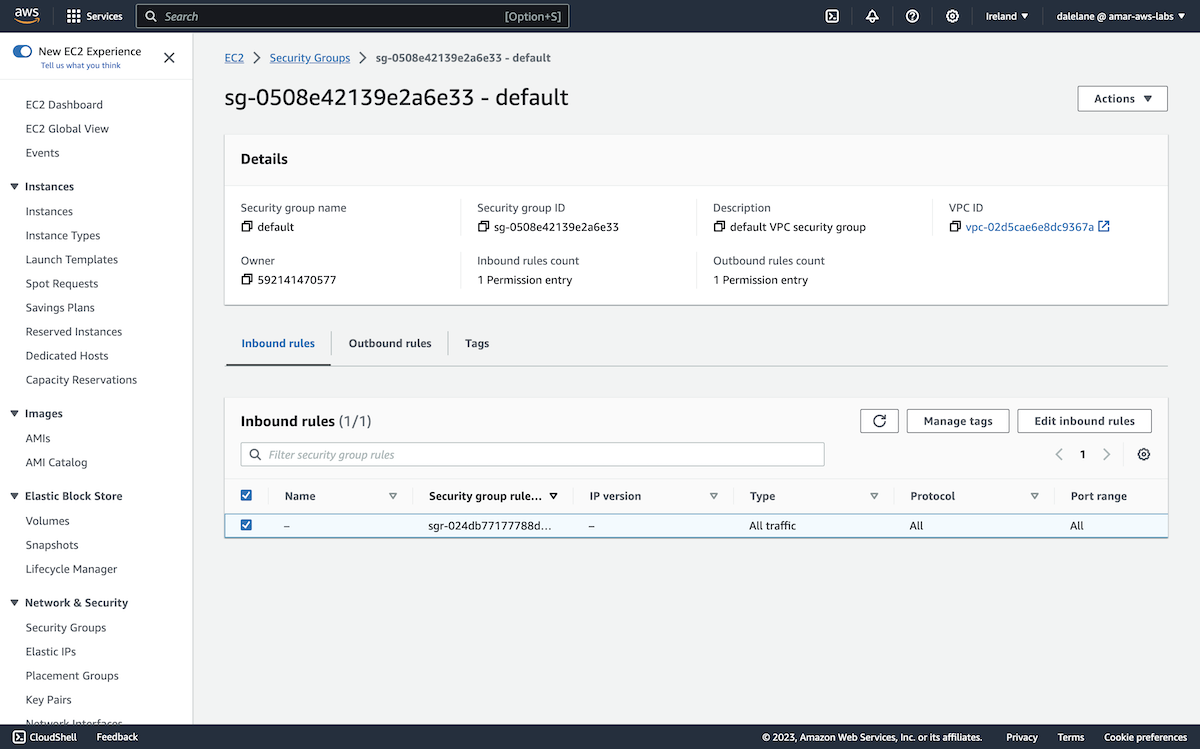

We then clicked on the Security groups applied value.

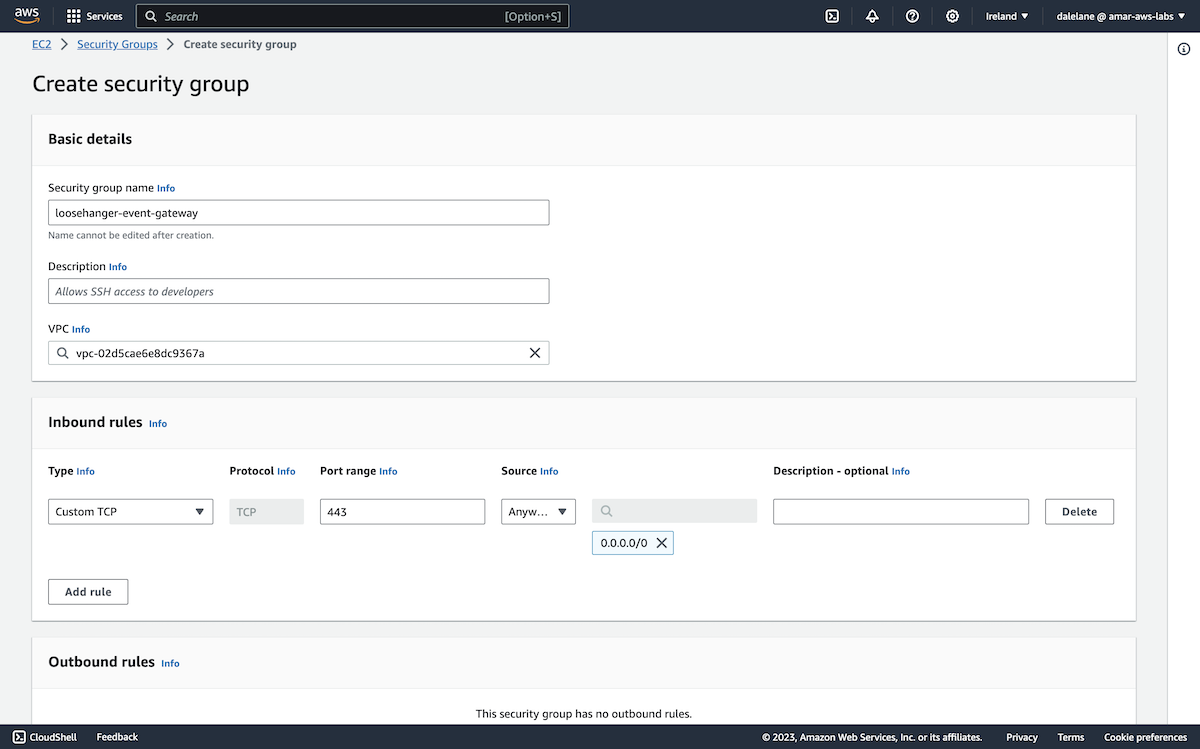

On the security groups instance page, we clicked Edit inbound rules.

We needed to add a new rule for access to the Kafka port, 9196.

We could have added the specific source IP address for where we would run our app, but it was simpler for this quick demo to just allow access from applications running anywhere.

Our MSK cluster was now ready to allow connections.

To produce messages to our topics, we used a Kafka Connect connector. You can find the source connector we used at github.com/IBM/kafka-connect-loosehangerjeans-source. It is a data generator that periodically produces randomly generated messages, that we often use for giving demos.

To run Kafka Connect, we created a properties file called connect.properties.

We populated this with the following config. Note that the plugin.path location is a folder where we downloaded the source connector jar to - you can find the jar in the Releases page for the data gen source connector.

bootstrap.servers=b-1-public.loosehangermsk.krrnez.c3.kafka.eu-west-1.amazonaws.com:9196 security.protocol=SASL_SSL producer.security.protocol=SASL_SSL sasl.mechanism=SCRAM-SHA-512 producer.sasl.mechanism=SCRAM-SHA-512 sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="producer" password="BE9rEMxwfC0eD7IQcVzC4s9csceBsKi3Enzi2wiY9B8uw73KsoNyR33vfFBKFozv"; producer.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="producer" password="BE9rEMxwfC0eD7IQcVzC4s9csceBsKi3Enzi2wiY9B8uw73KsoNyR33vfFBKFozv"; client.id=loosehanger group.id=connect-group key.converter=org.apache.kafka.connect.storage.StringConverter value.converter=org.apache.kafka.connect.json.JsonConverter key.converter.schemas.enable=false value.converter.schemas.enable=false offset.storage.file.filename=/tmp/connect/offsets plugin.path=/Users/dalelane/dev/demos/aws/connect/jars

We then created a properties file called connector.properties with the following config.

(You can see the other options we could have set in the connector README).

name=msk-loosehanger connector.class=com.ibm.eventautomation.demos.loosehangerjeans.DatagenSourceConnector

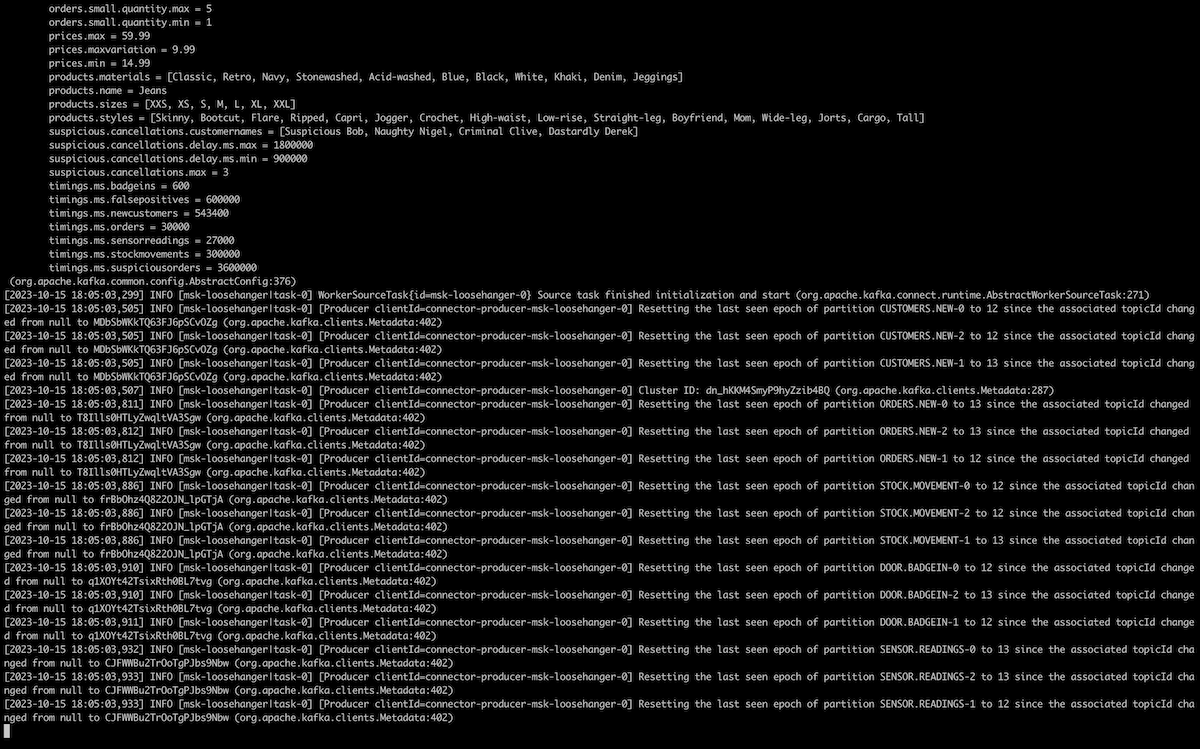

We ran it using connect-standalone.sh. (This is a script included in the bin folder of the Apache Kafka zip you can download from kafka.apache.org).

connect-standalone.sh connect.properties connector.properties

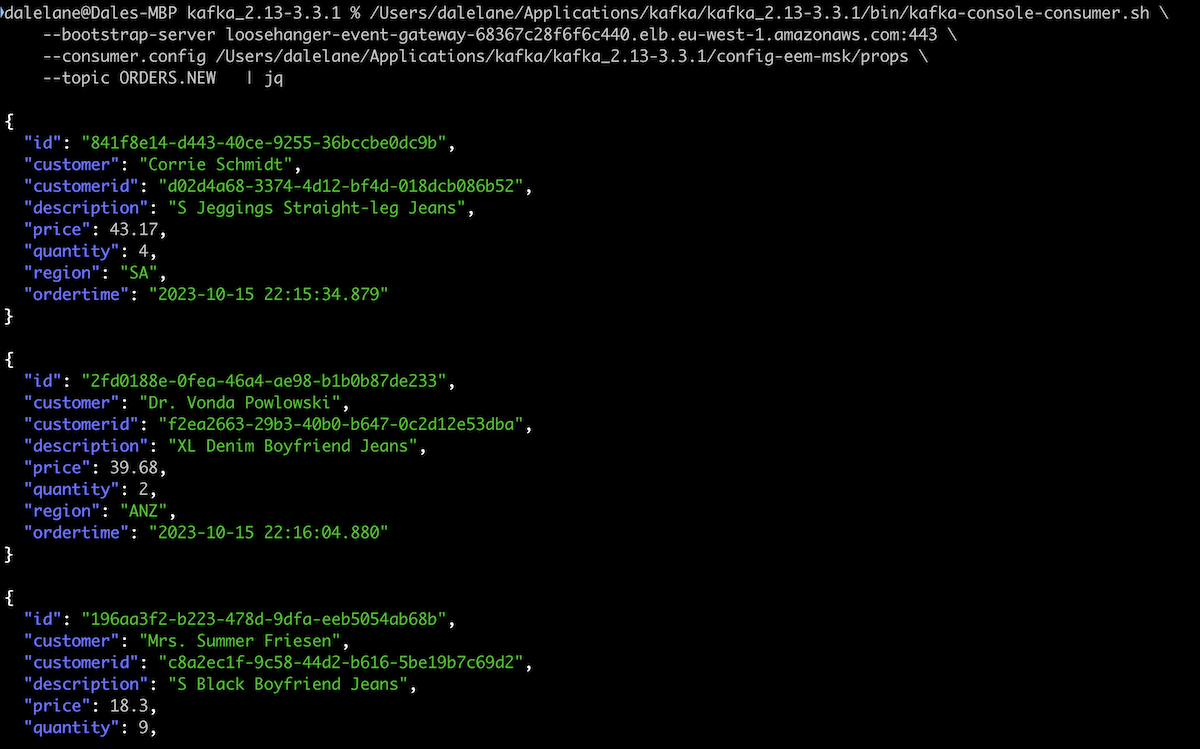

We left this running to create a live stream of events that we could use for demos.

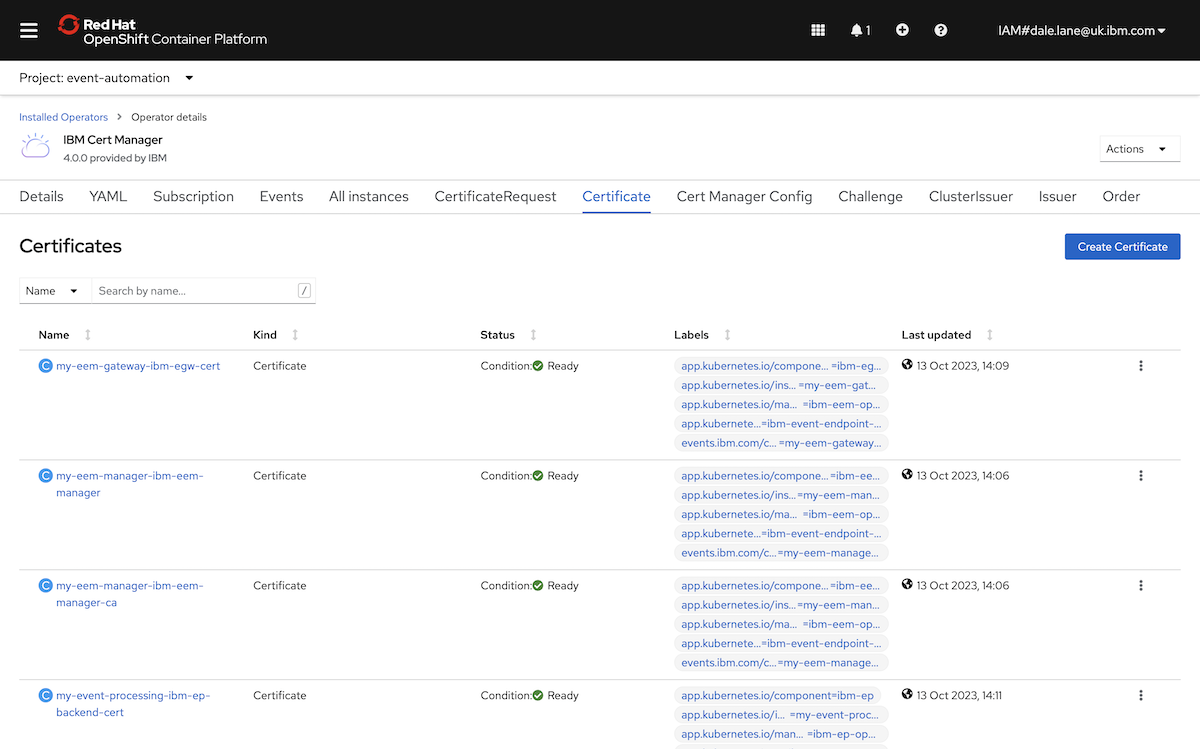

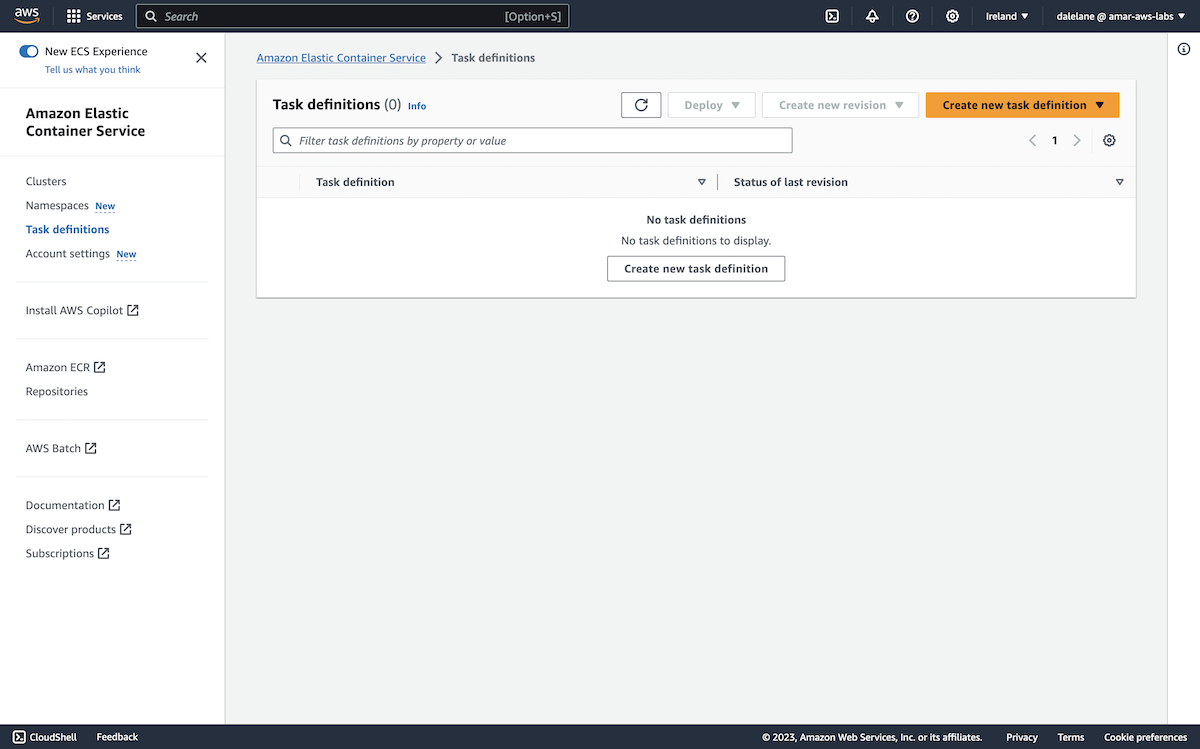

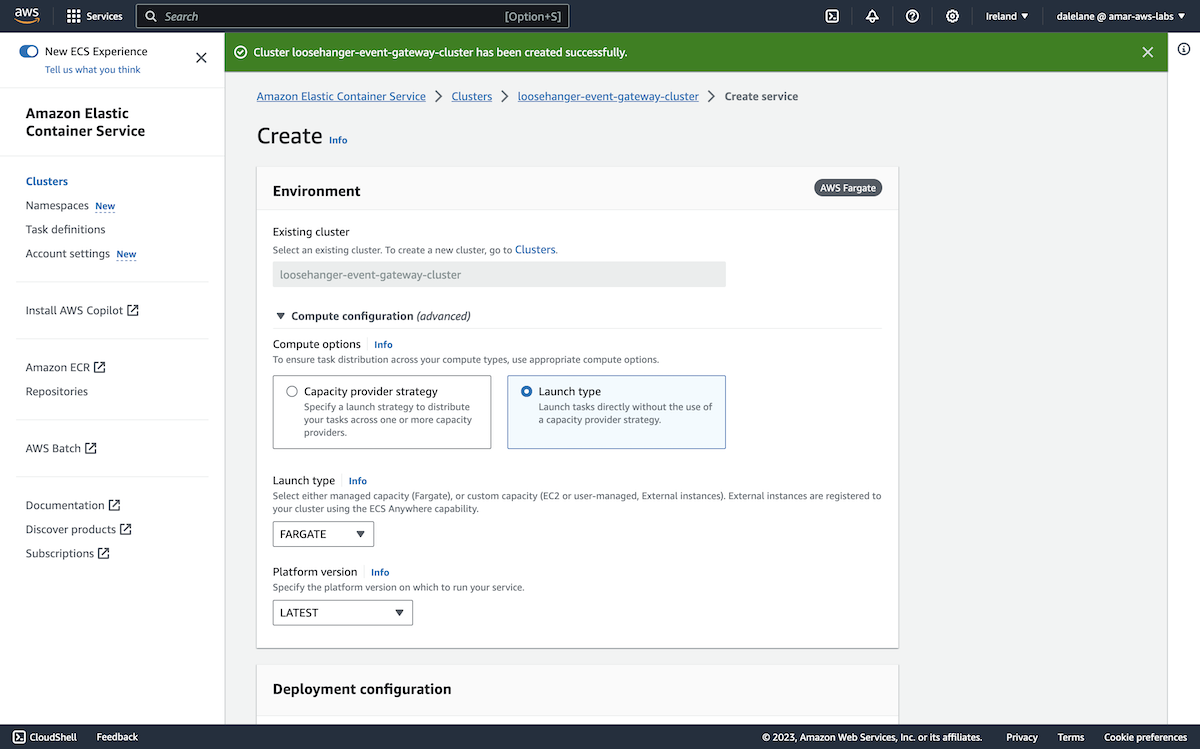

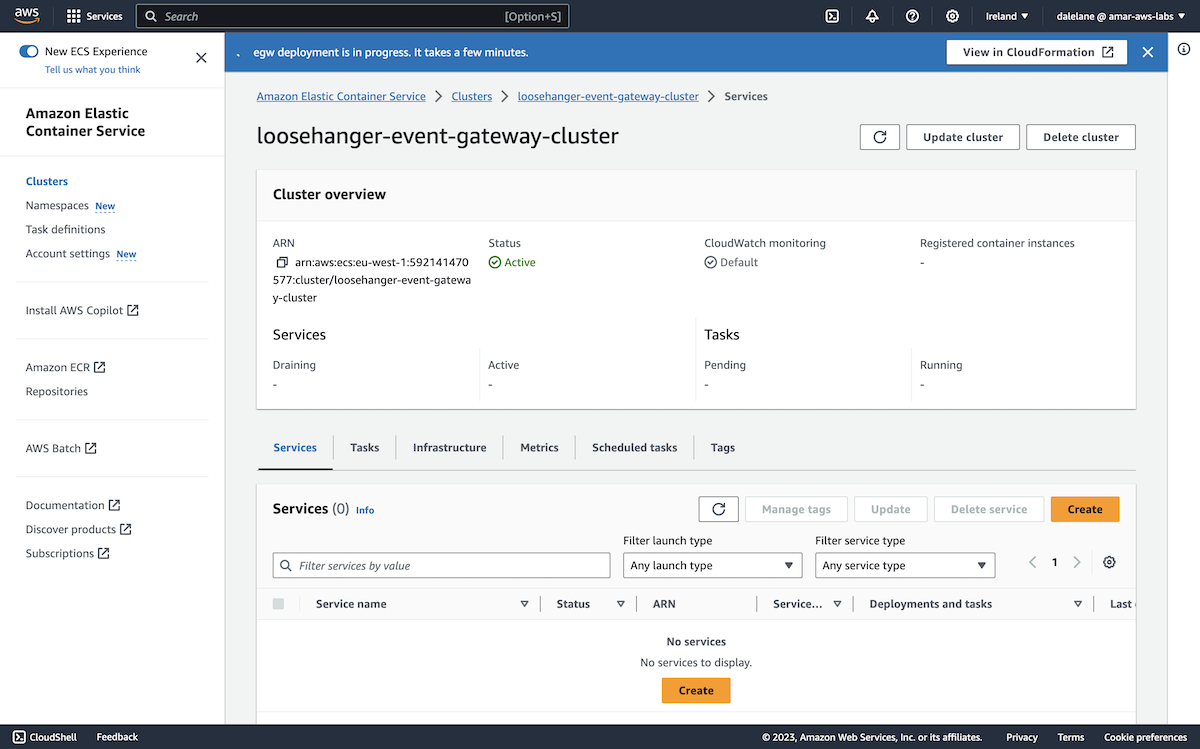

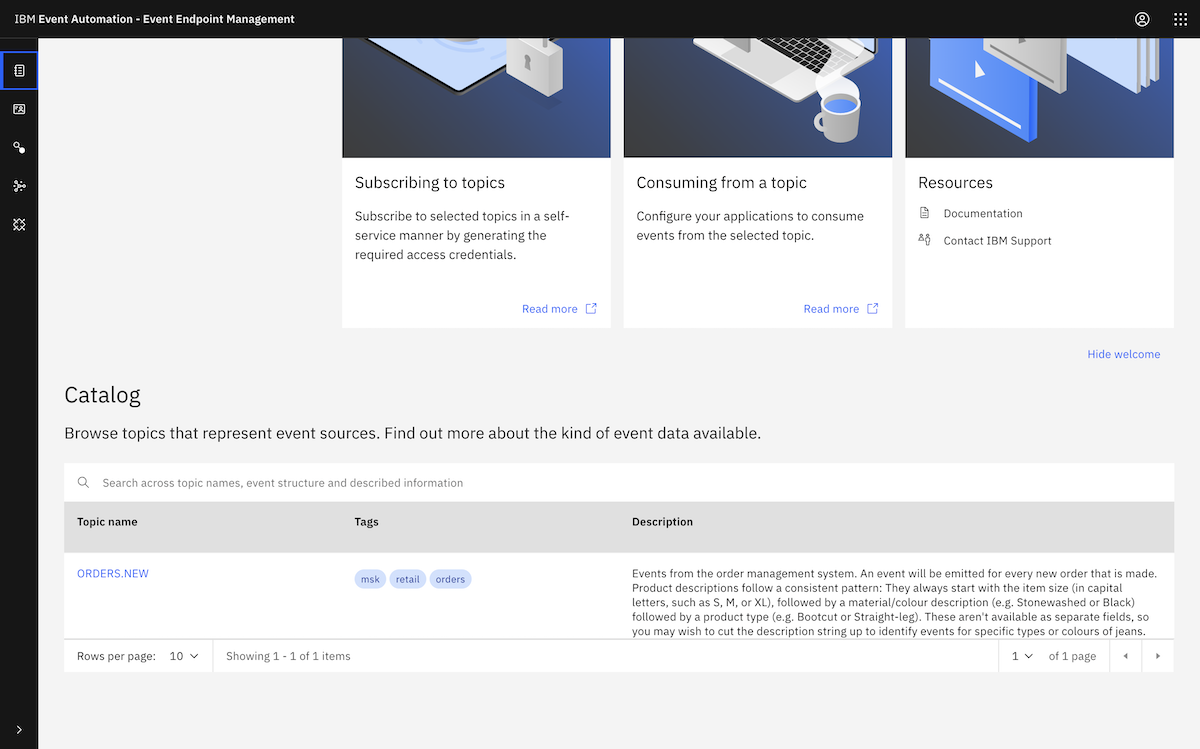

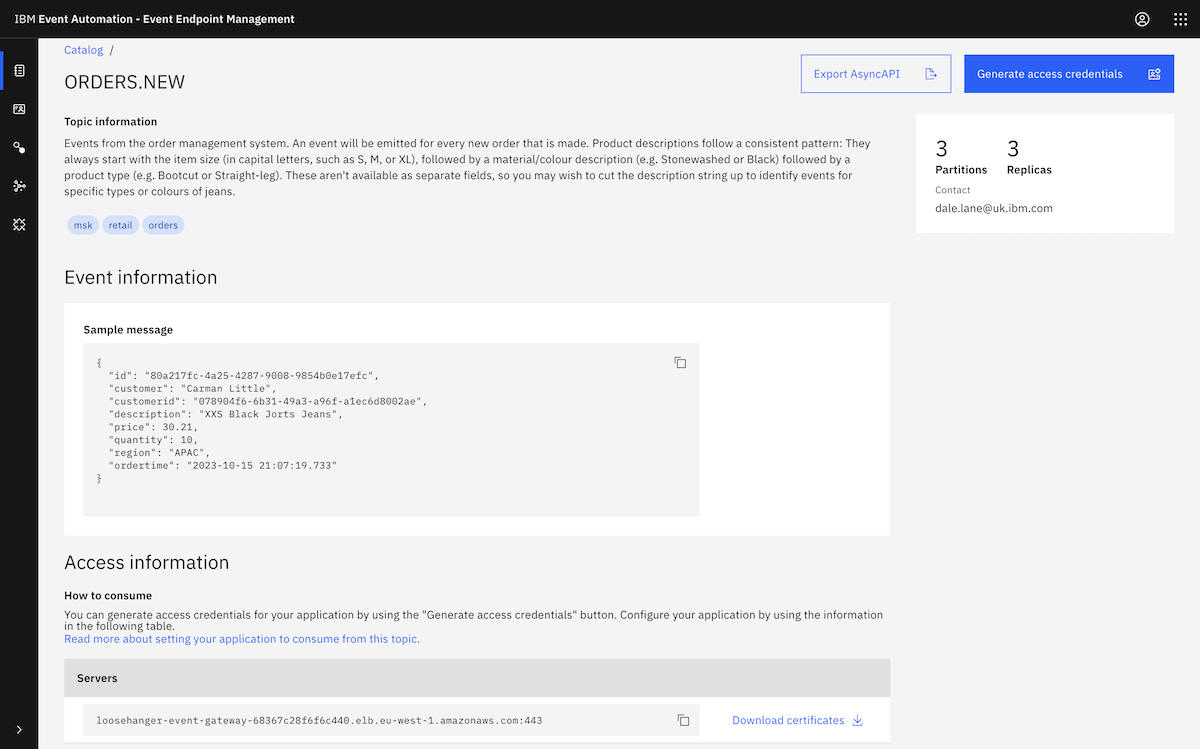

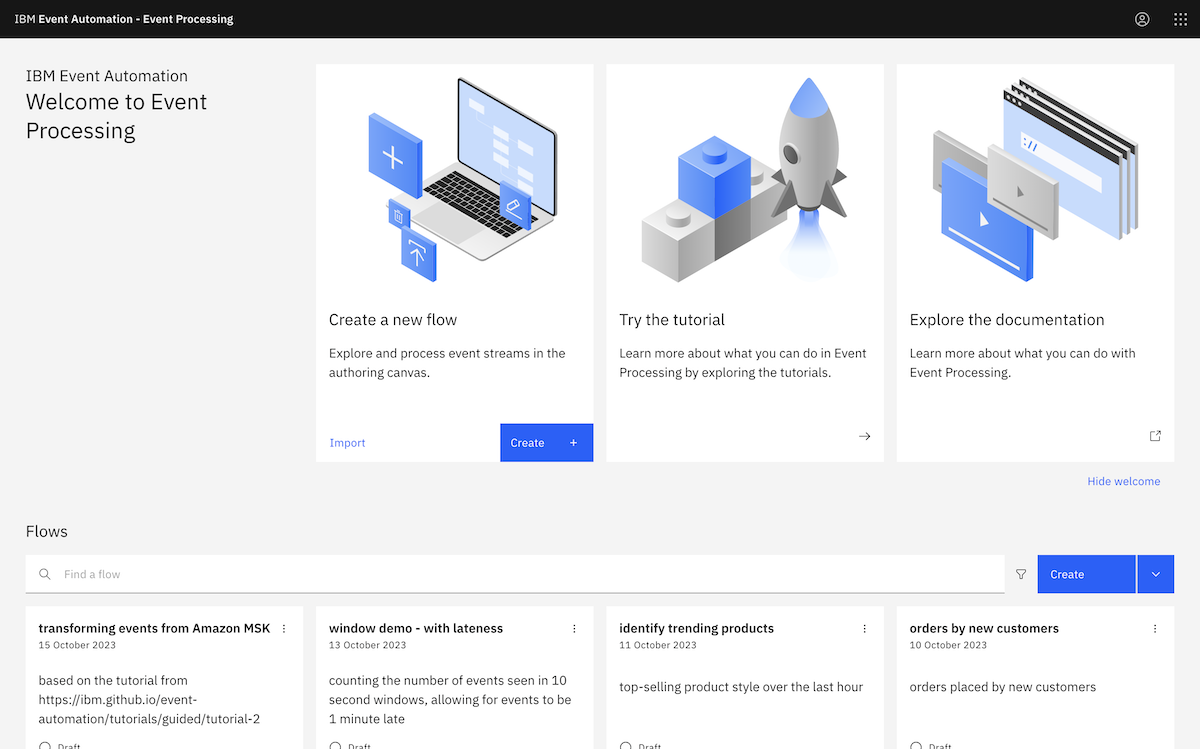

We now had streams of events on MSK topics, ready to process using IBM Event Processing. ▶

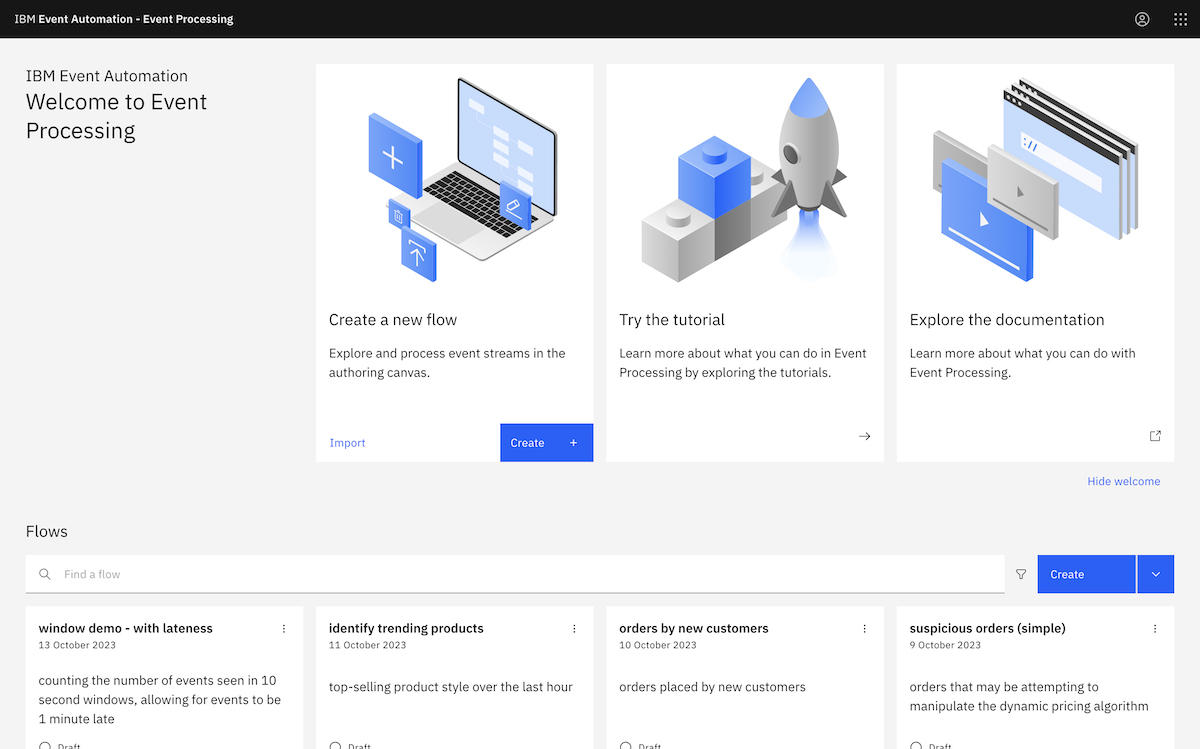

We started by following the Transform events to create or remove properties tutorial from ibm.github.io/event-automation/tutorials.

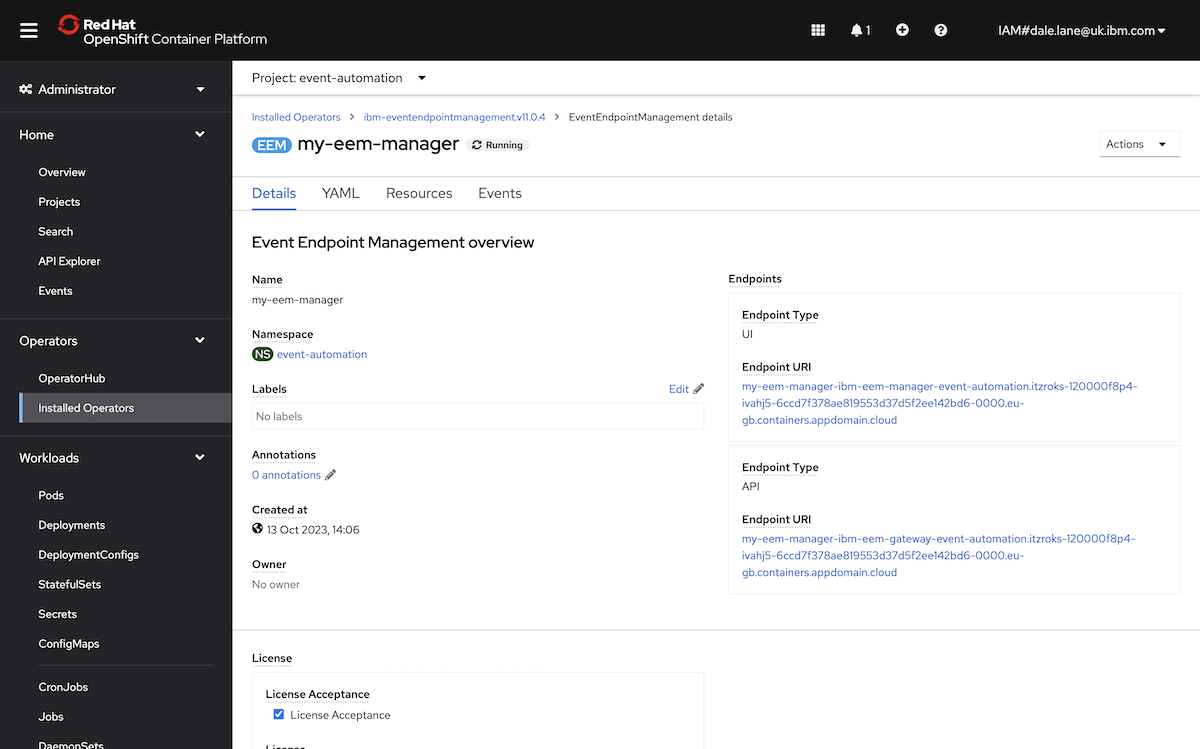

We were running an instance of IBM Event Processing in an OpenShift cluster in IBM Cloud. (For details of how we deployed this, you can see the first step in the tutorial instructions. It just involved running an ansible playbook).

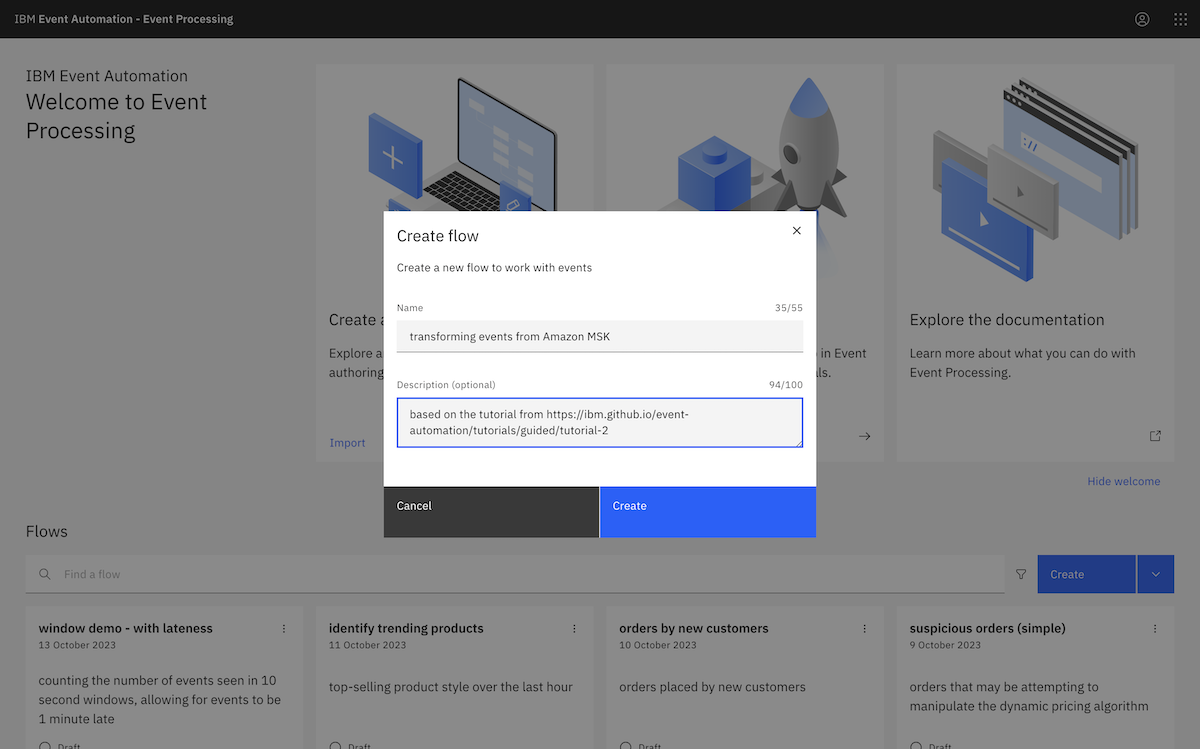

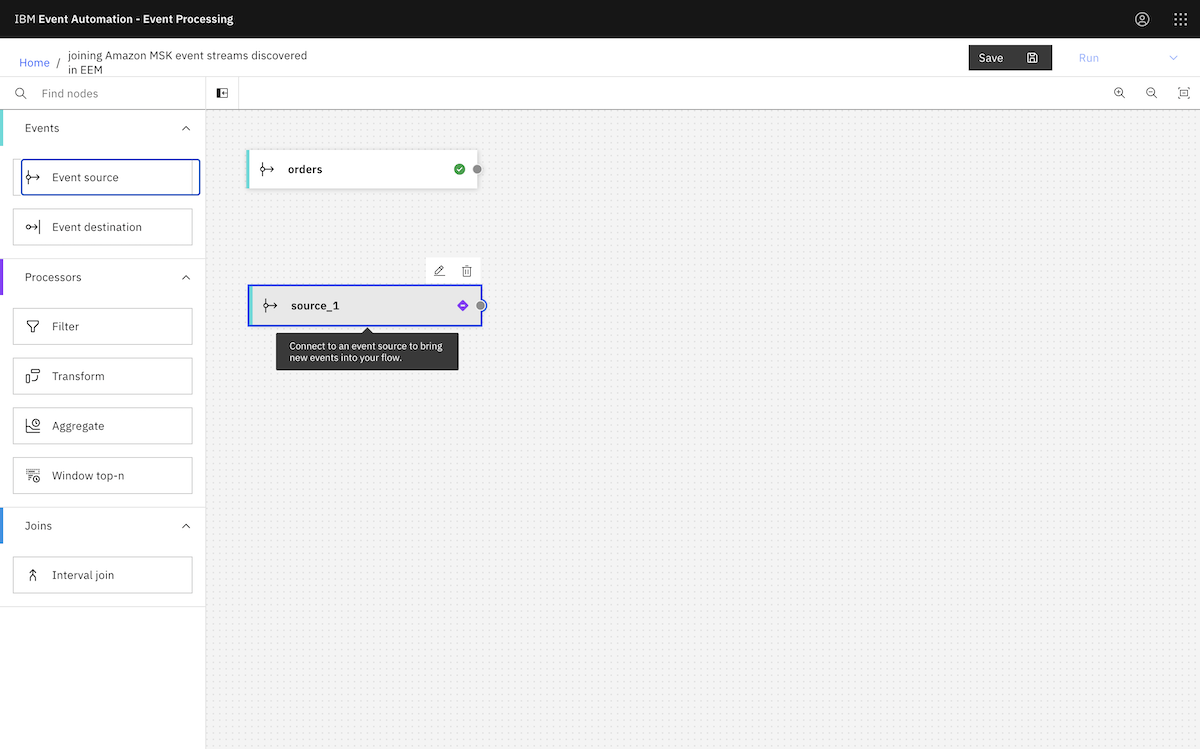

We started by logging on to the Event Processing authoring dashboard.

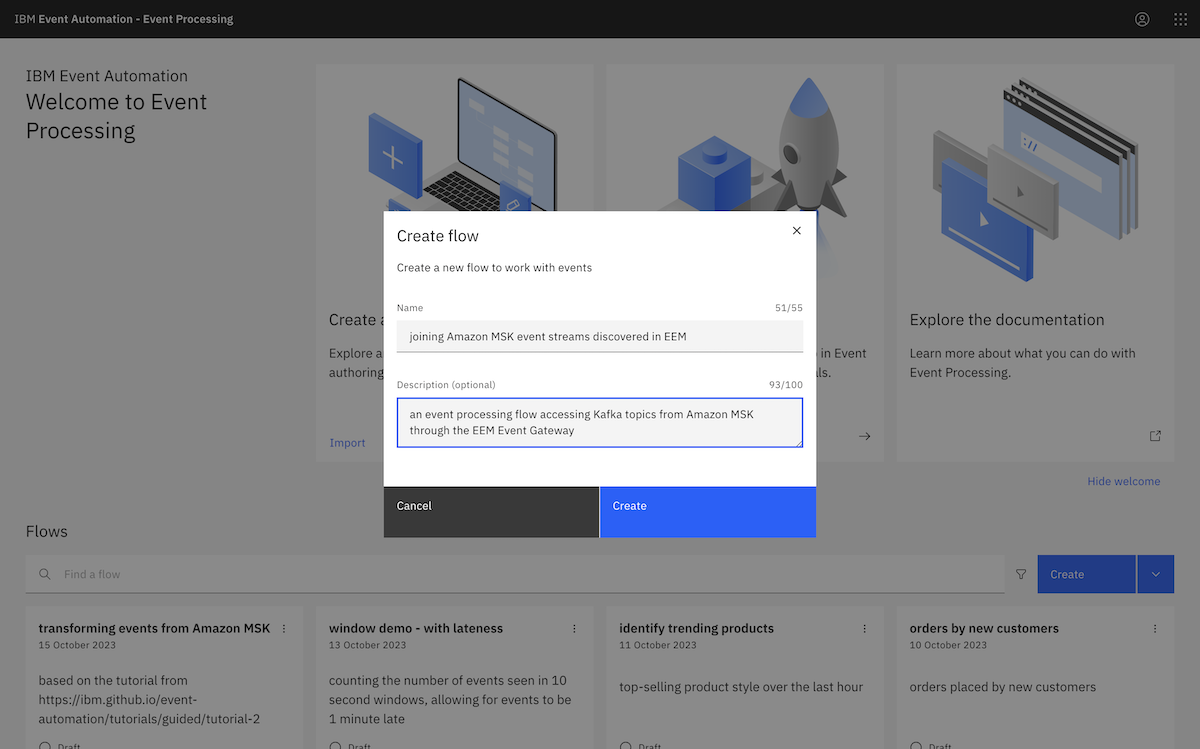

And we started to create a new flow.

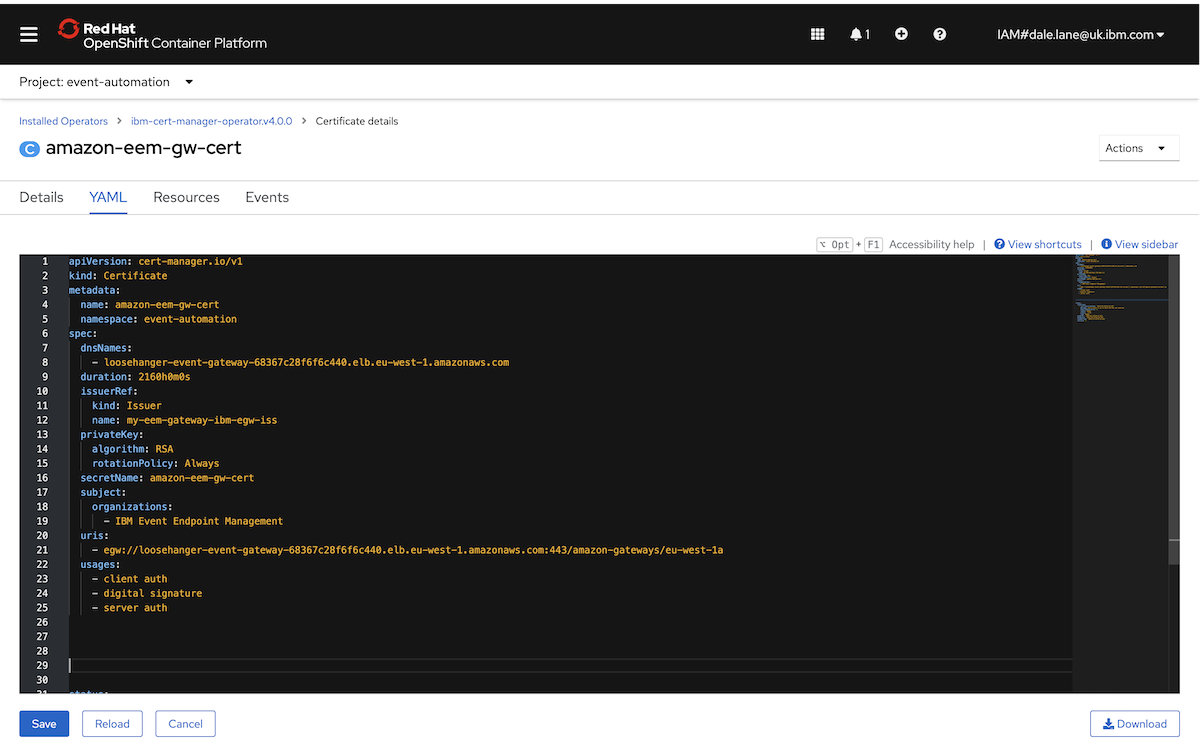

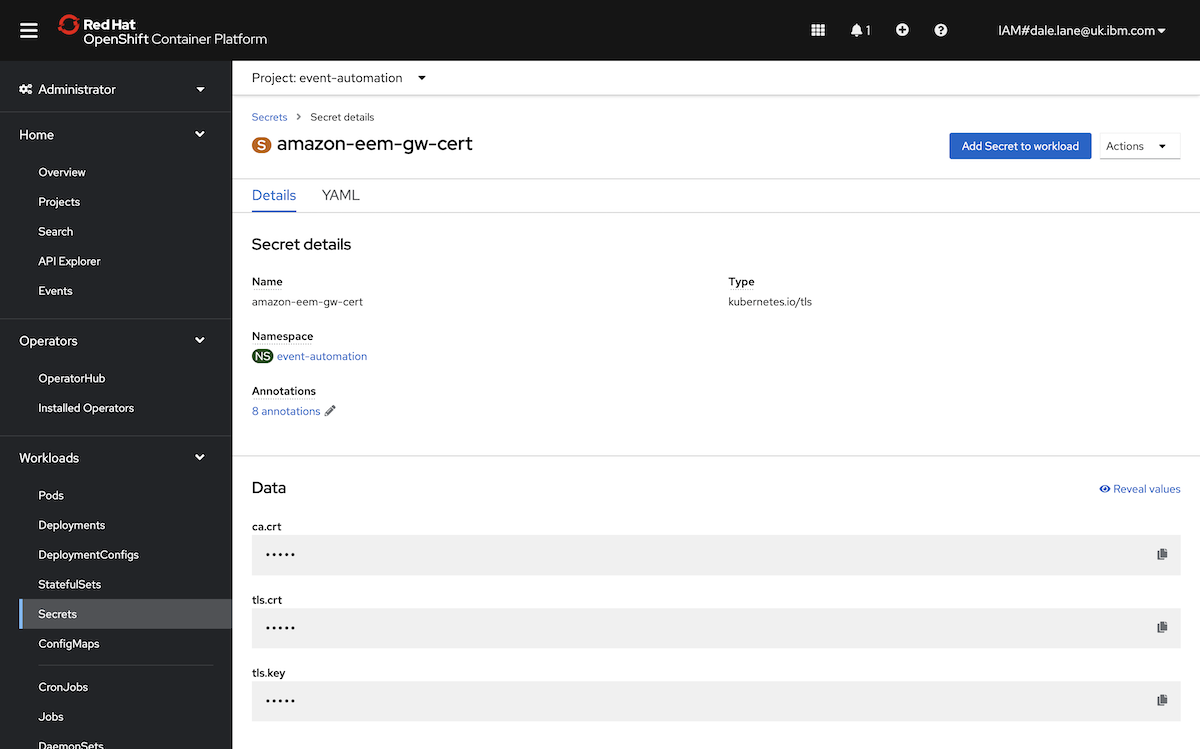

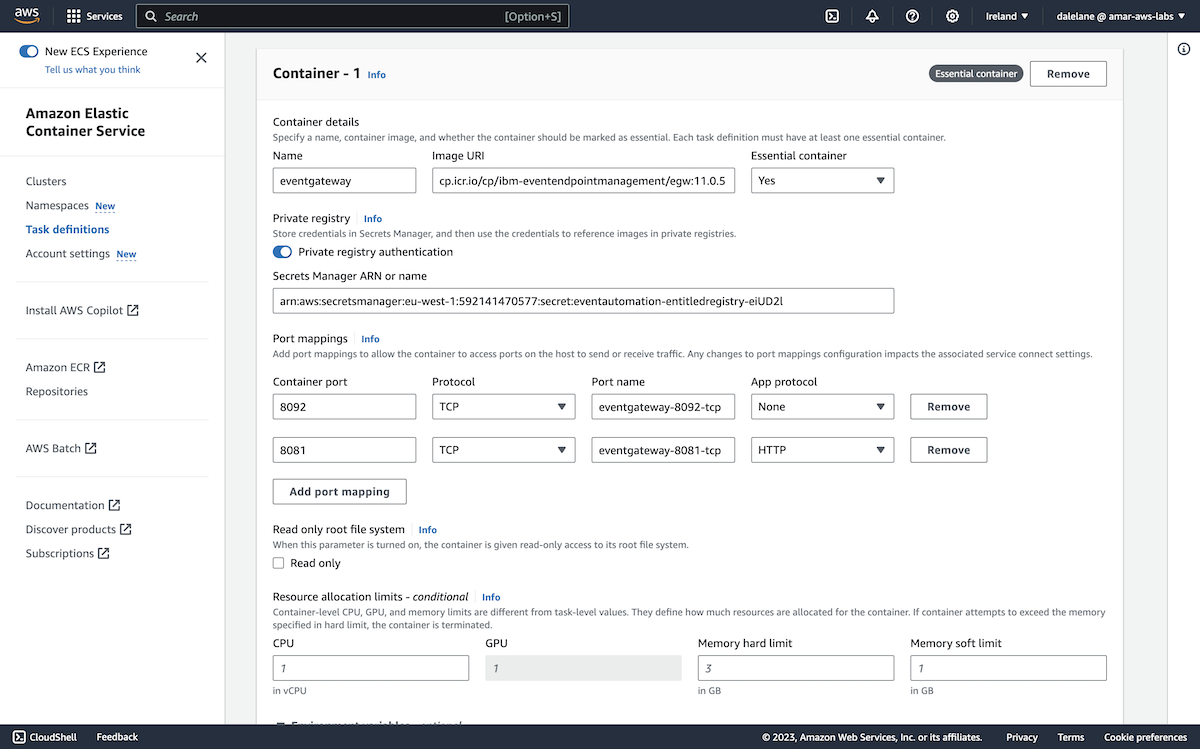

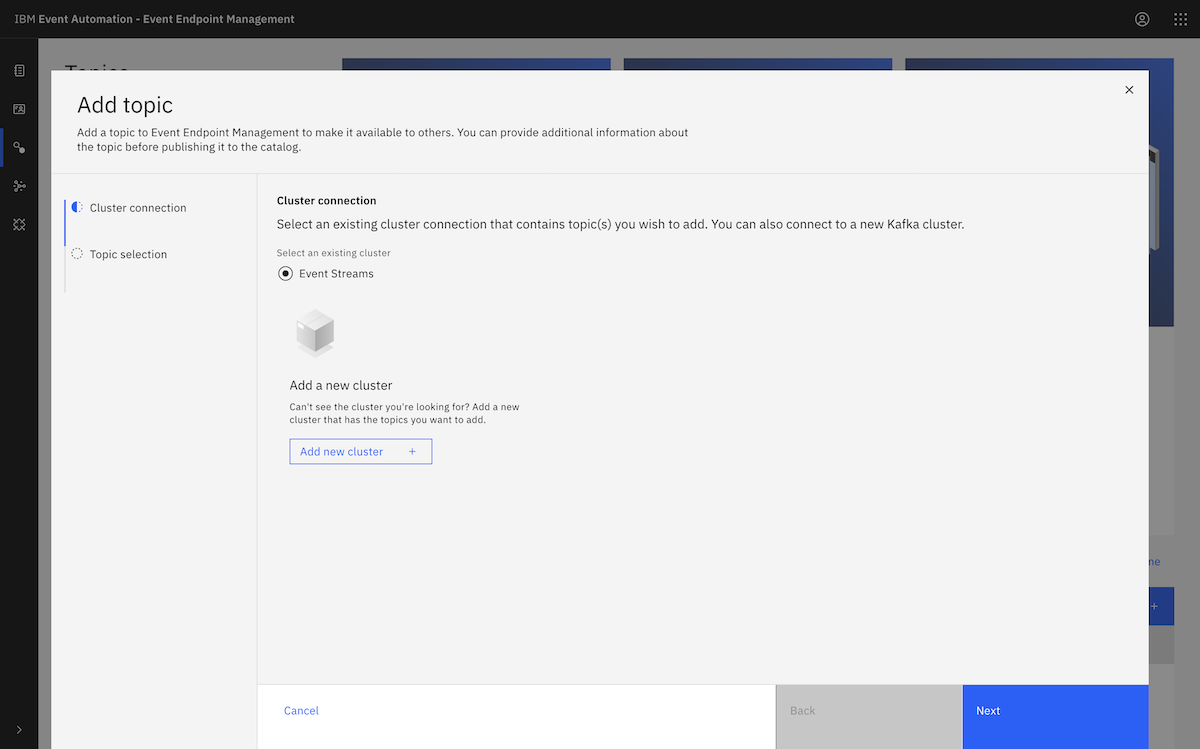

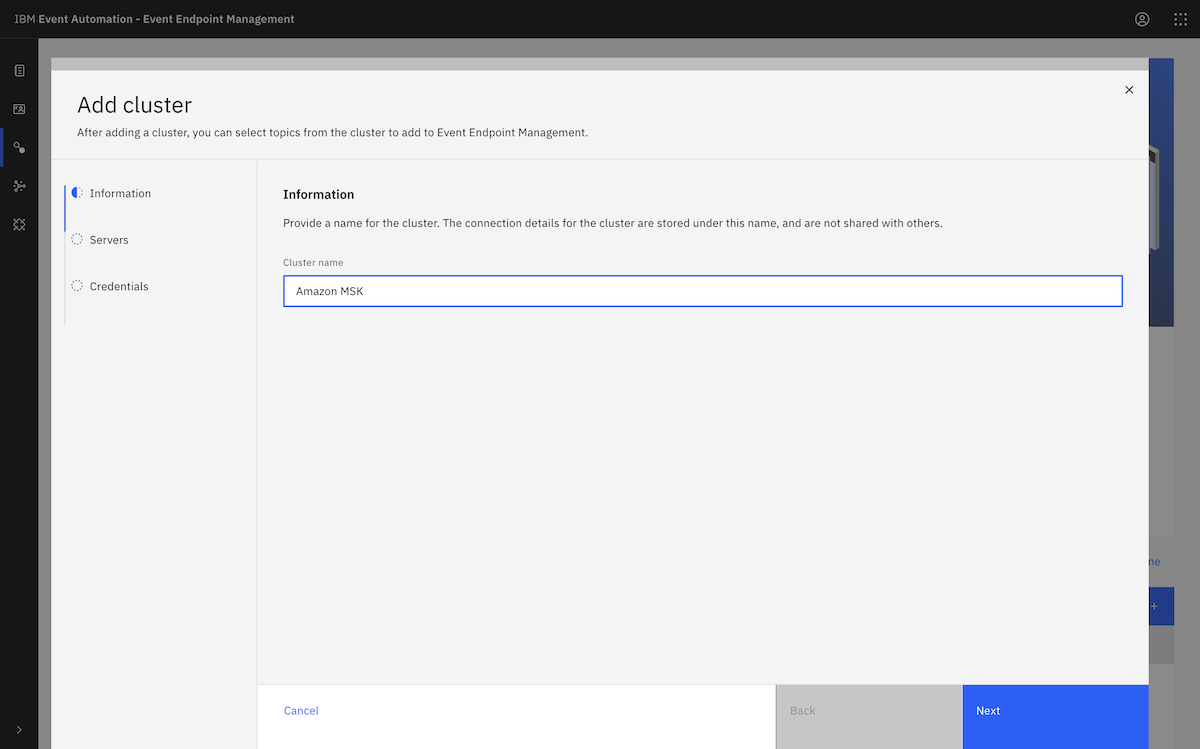

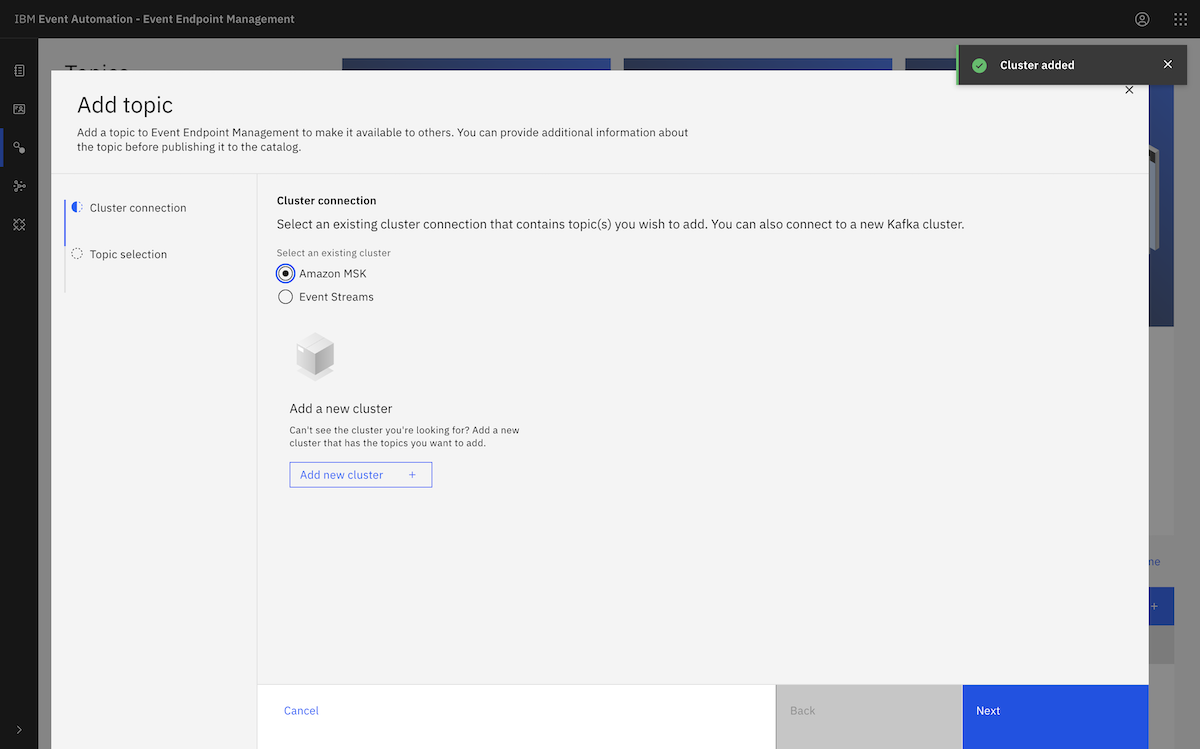

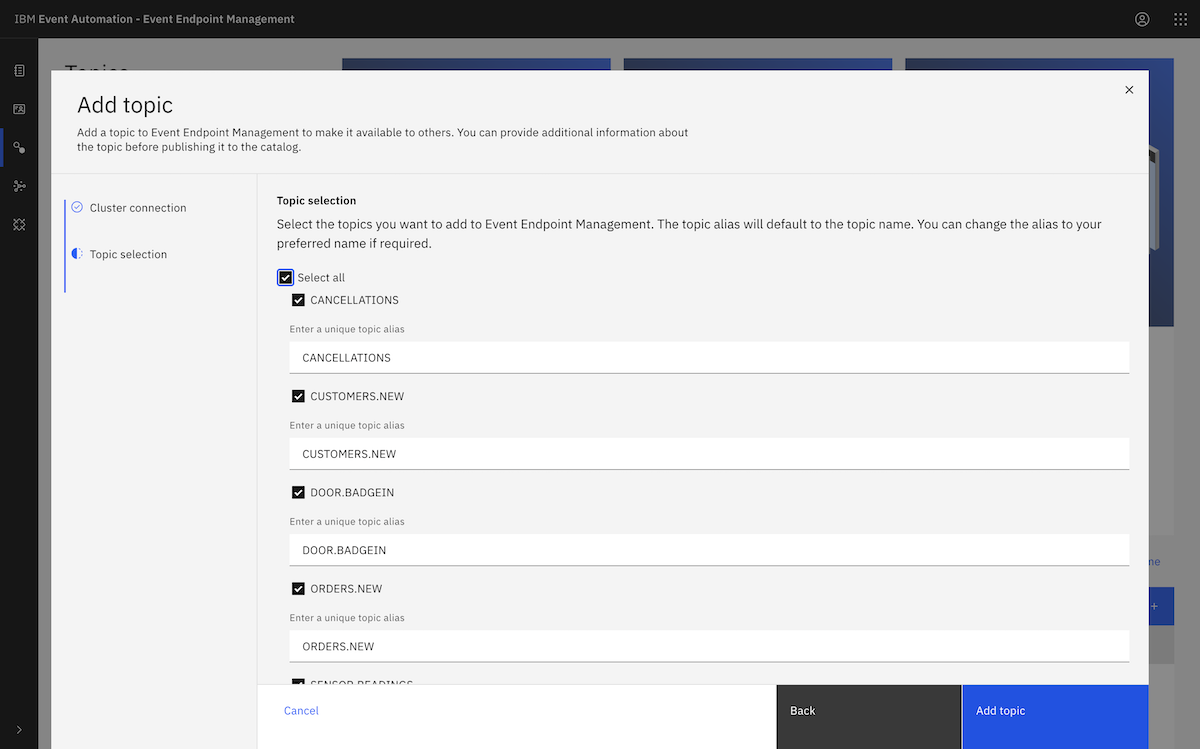

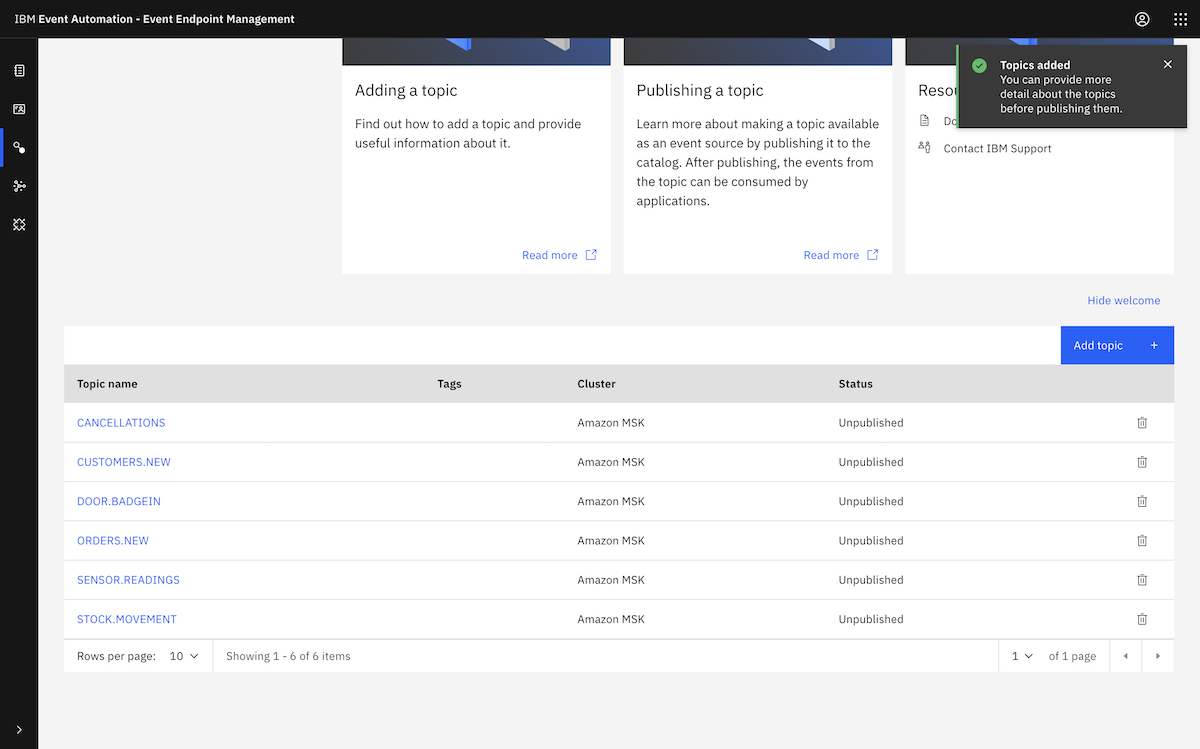

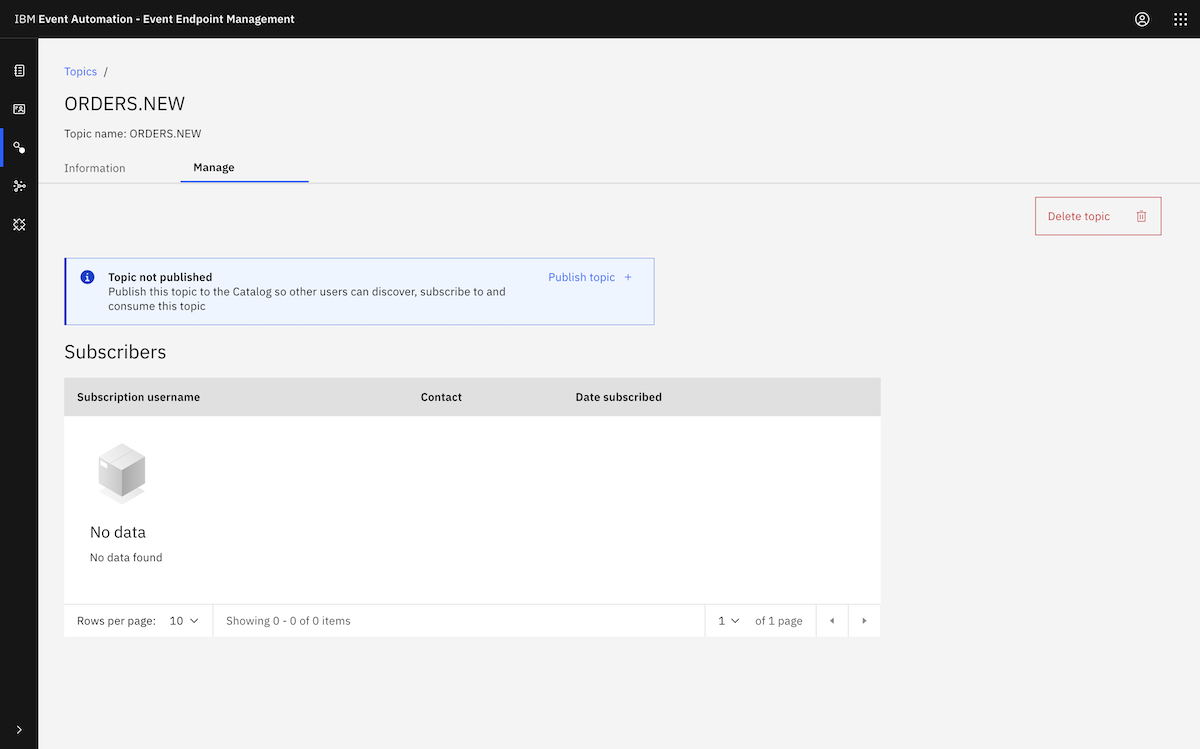

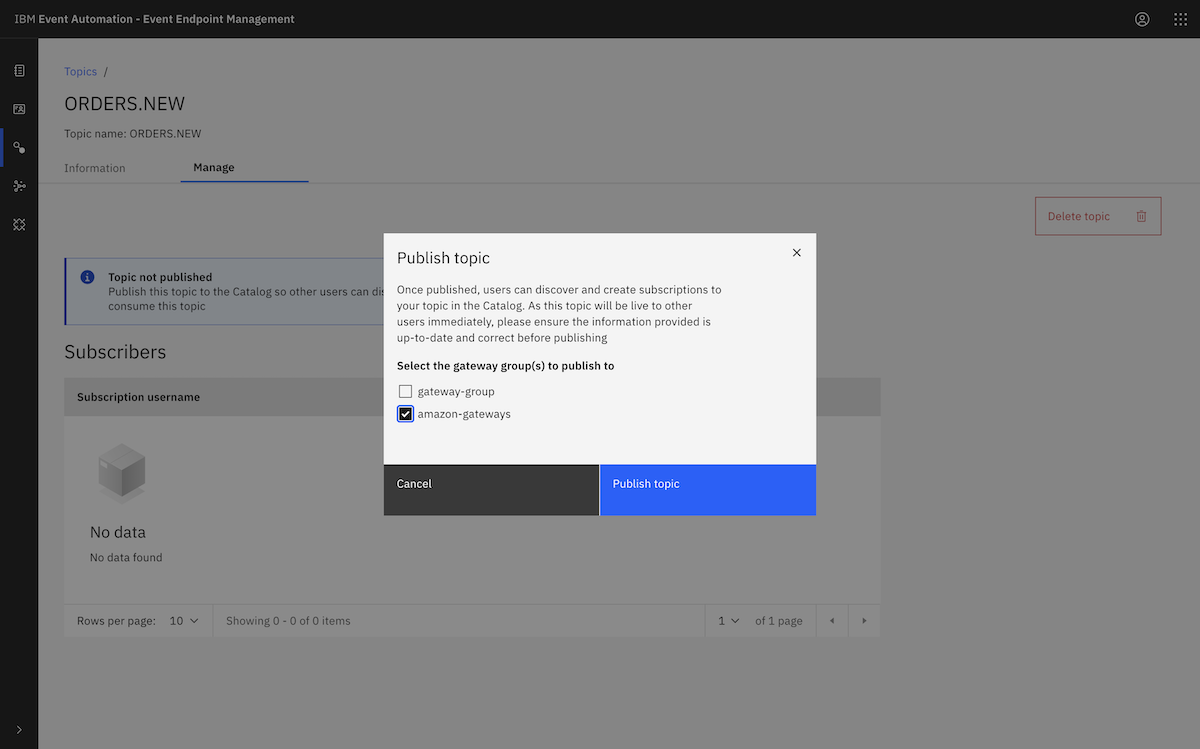

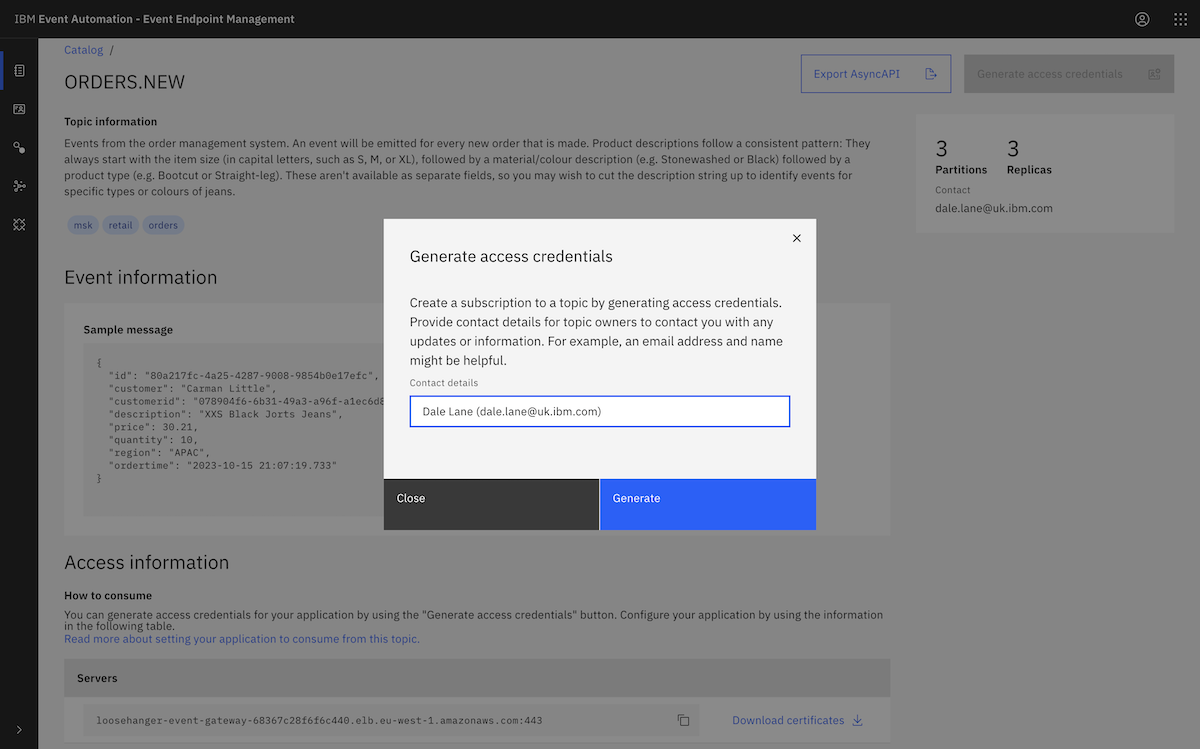

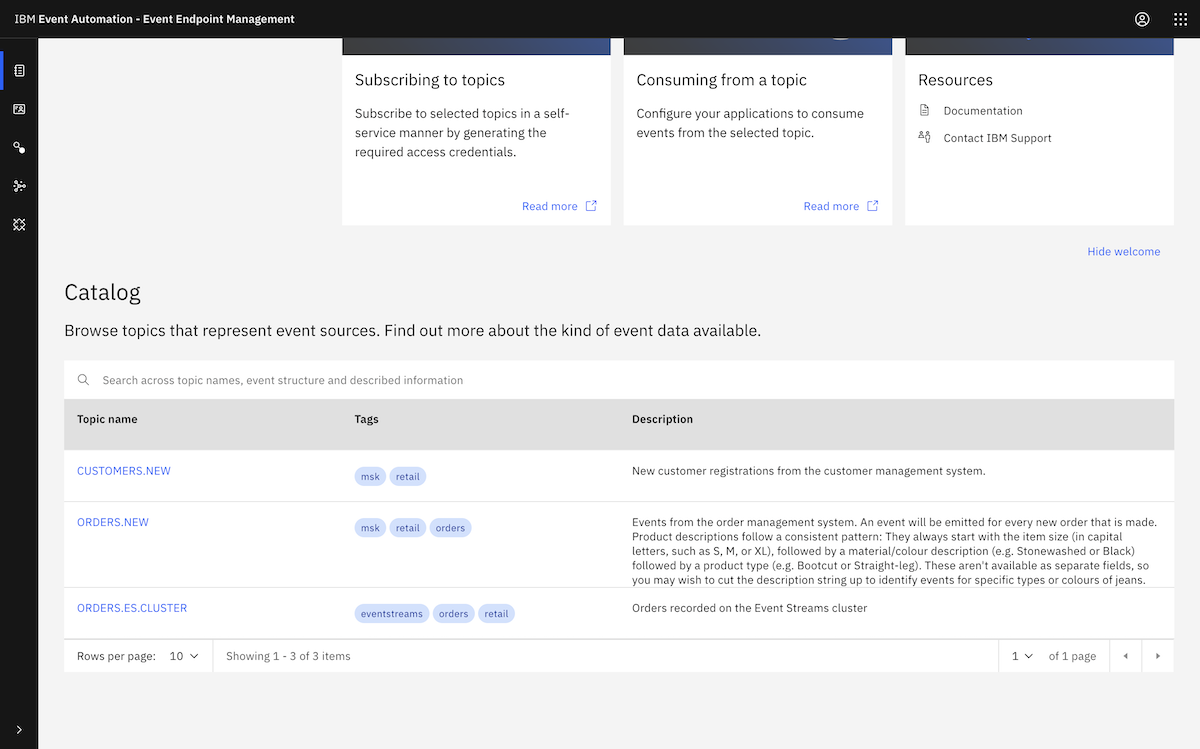

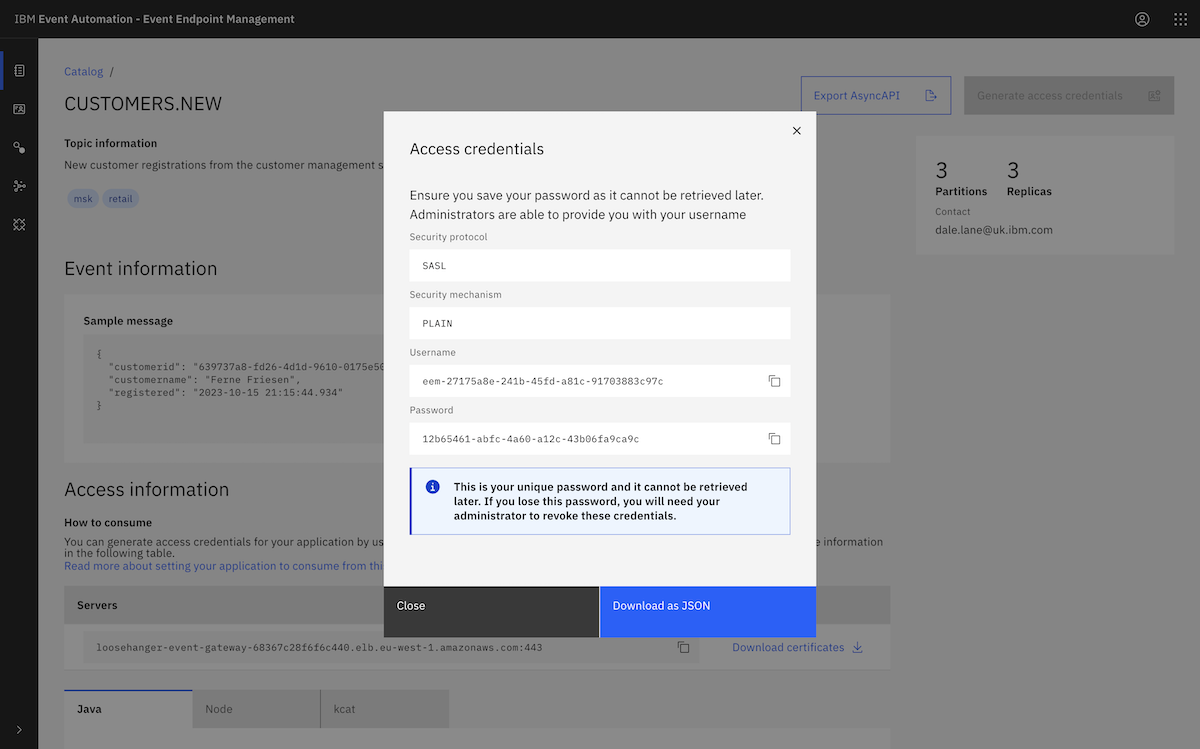

We created an event source in Event Processing using an Amazon MSK topic. ▶

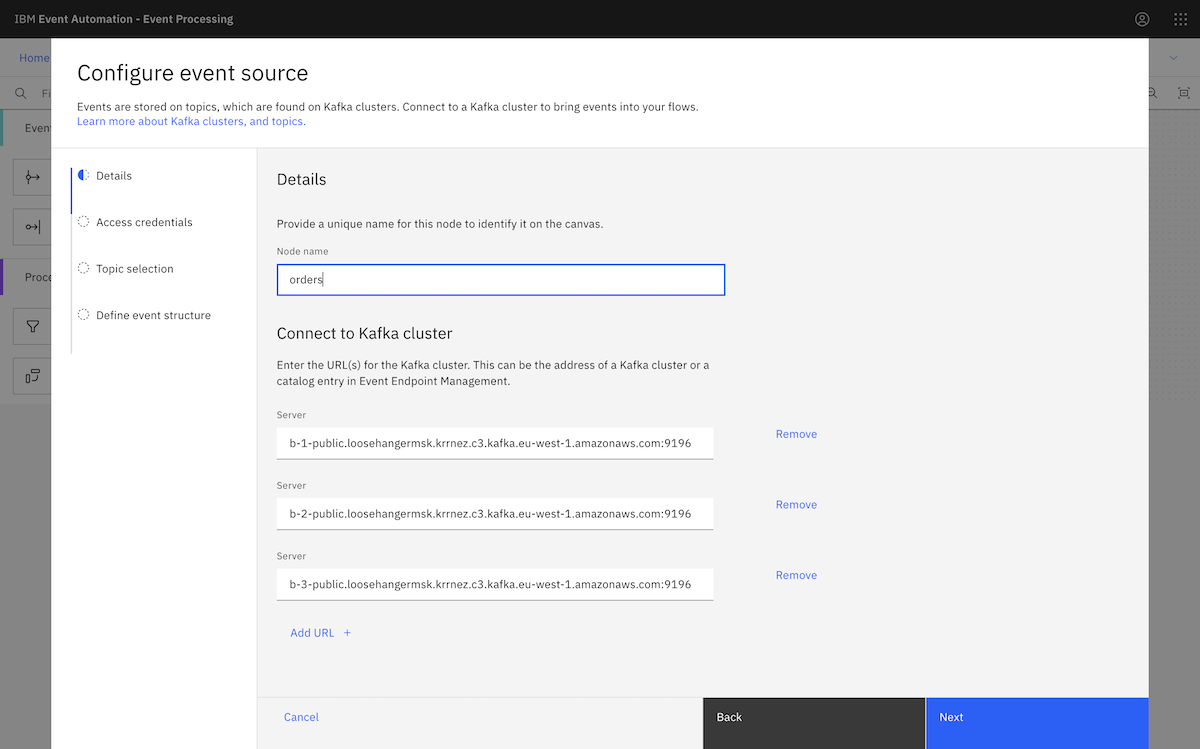

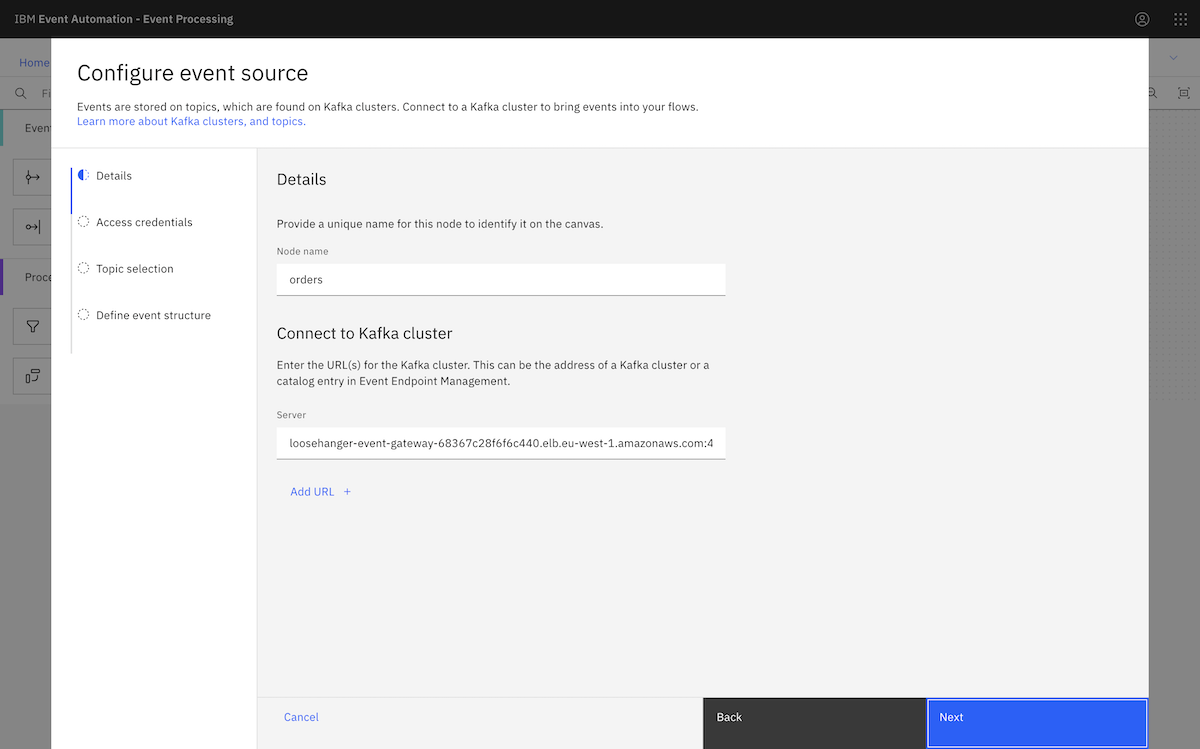

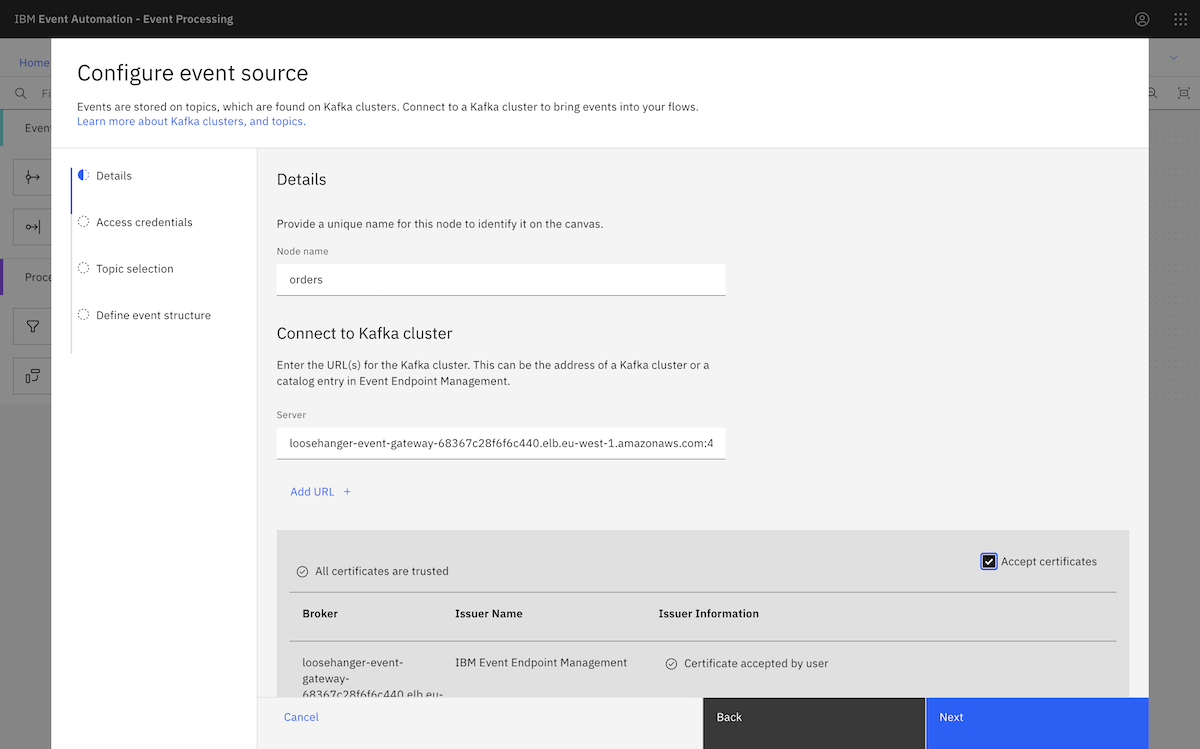

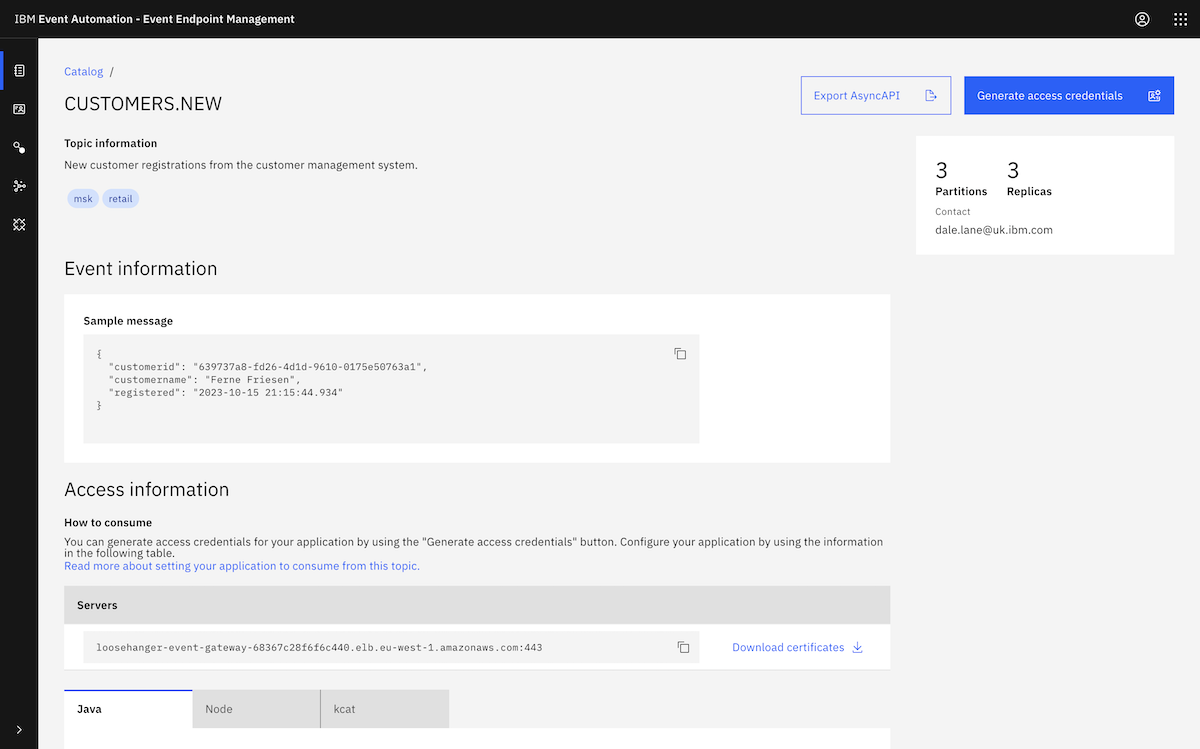

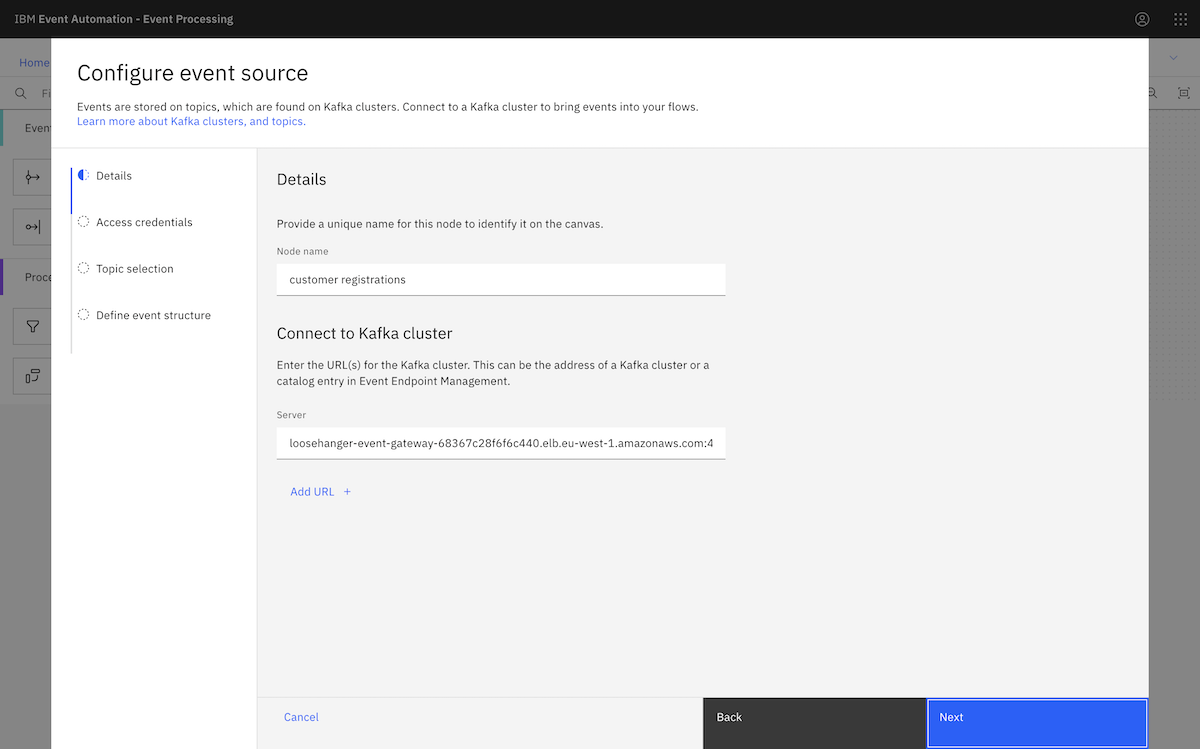

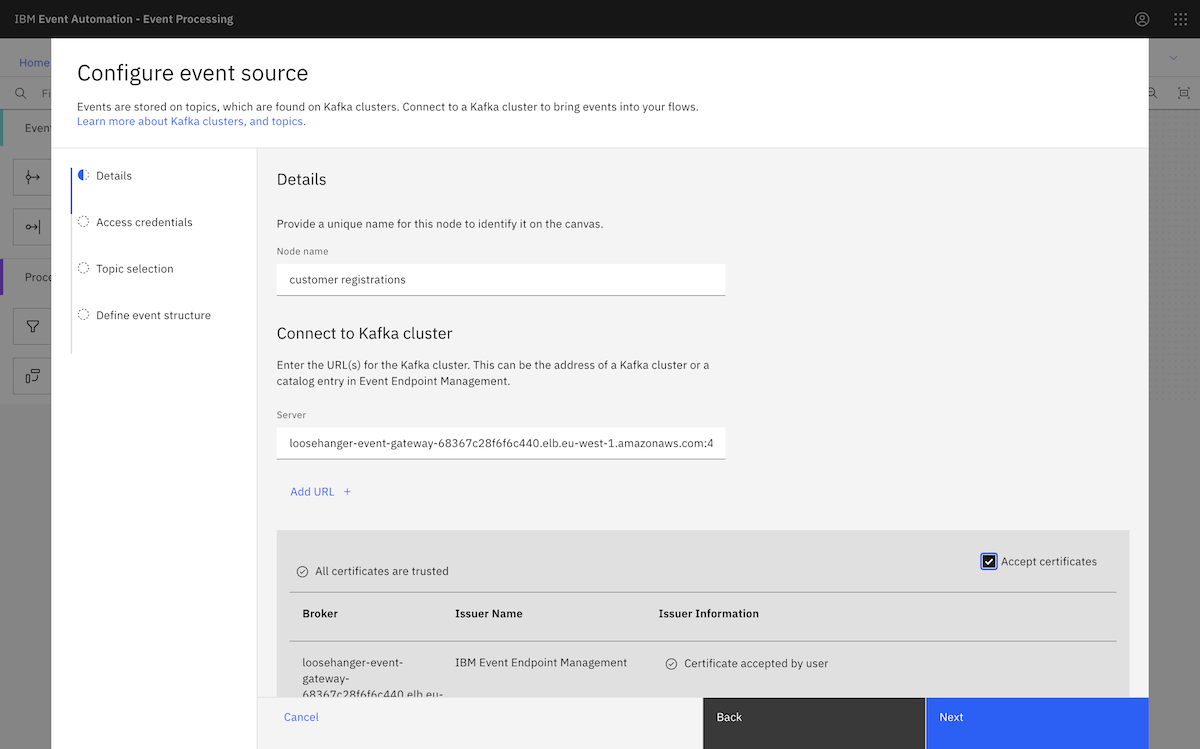

We went to the MSK instance page, and clicked View client information. From there, we copied the public bootstrap address.

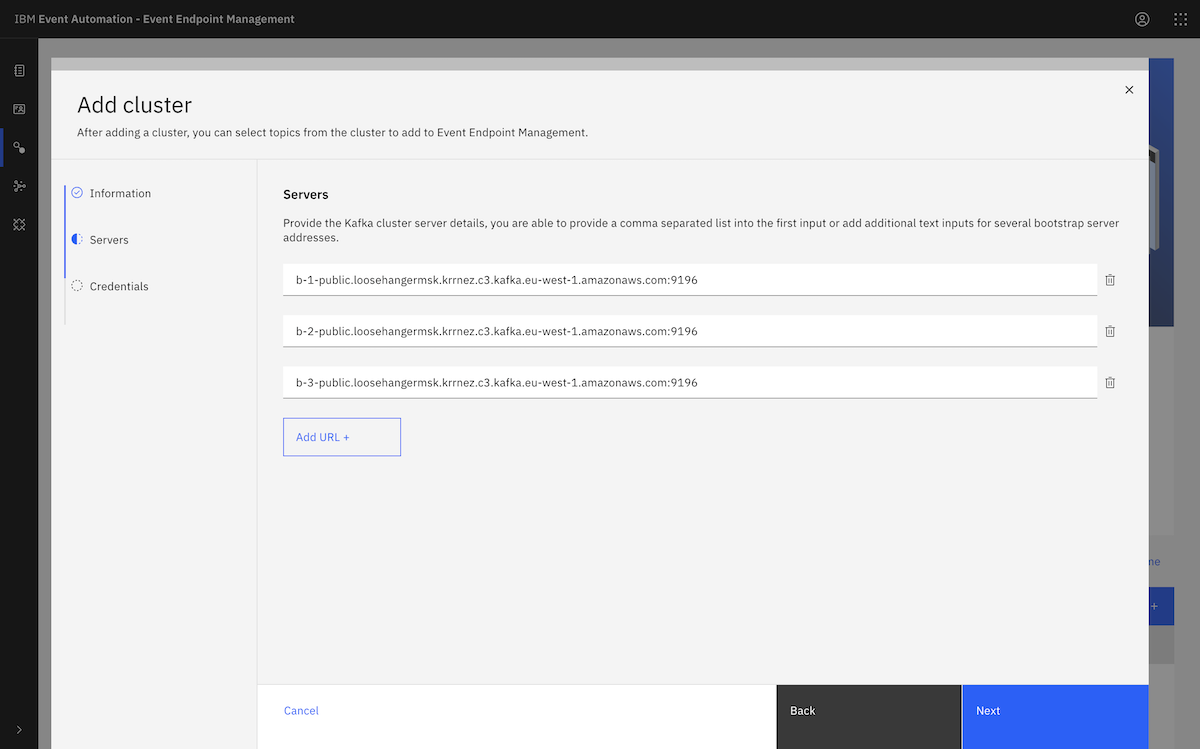

We pasted that into the Server box in the event source configuration page. We needed to split up the comma-separated address we got from the Amazon MSK page, as Event Processing requires separate broker addresses.

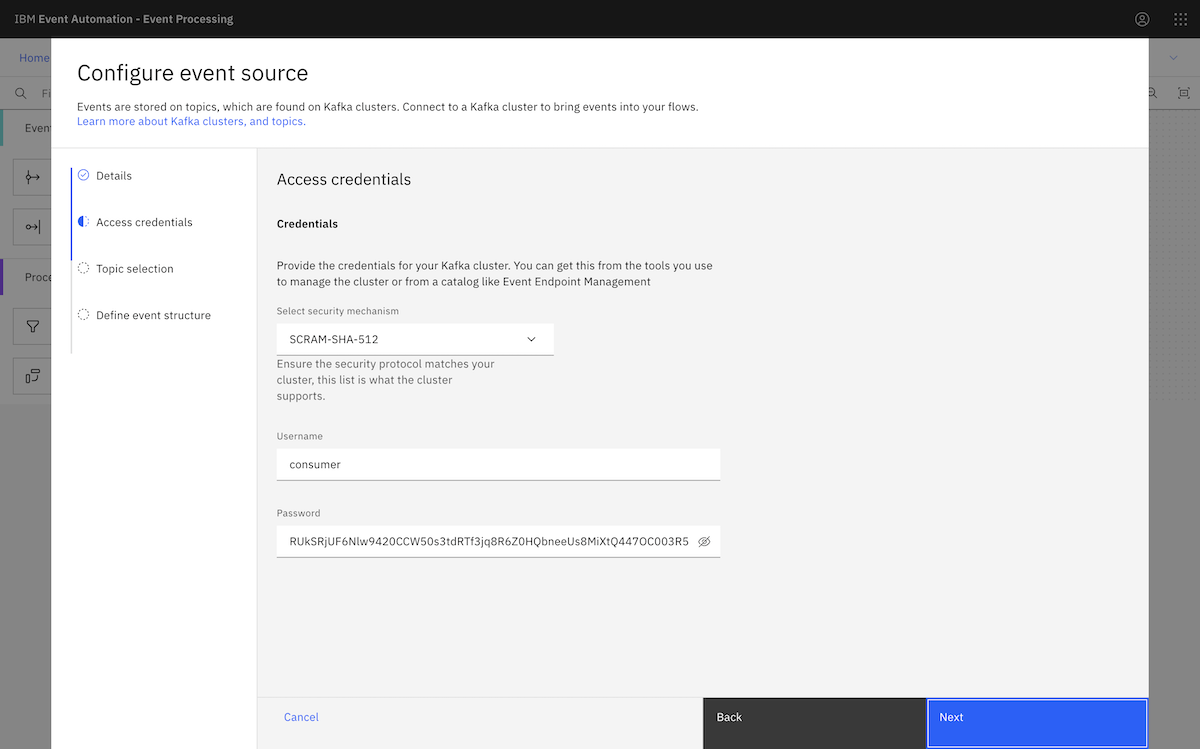

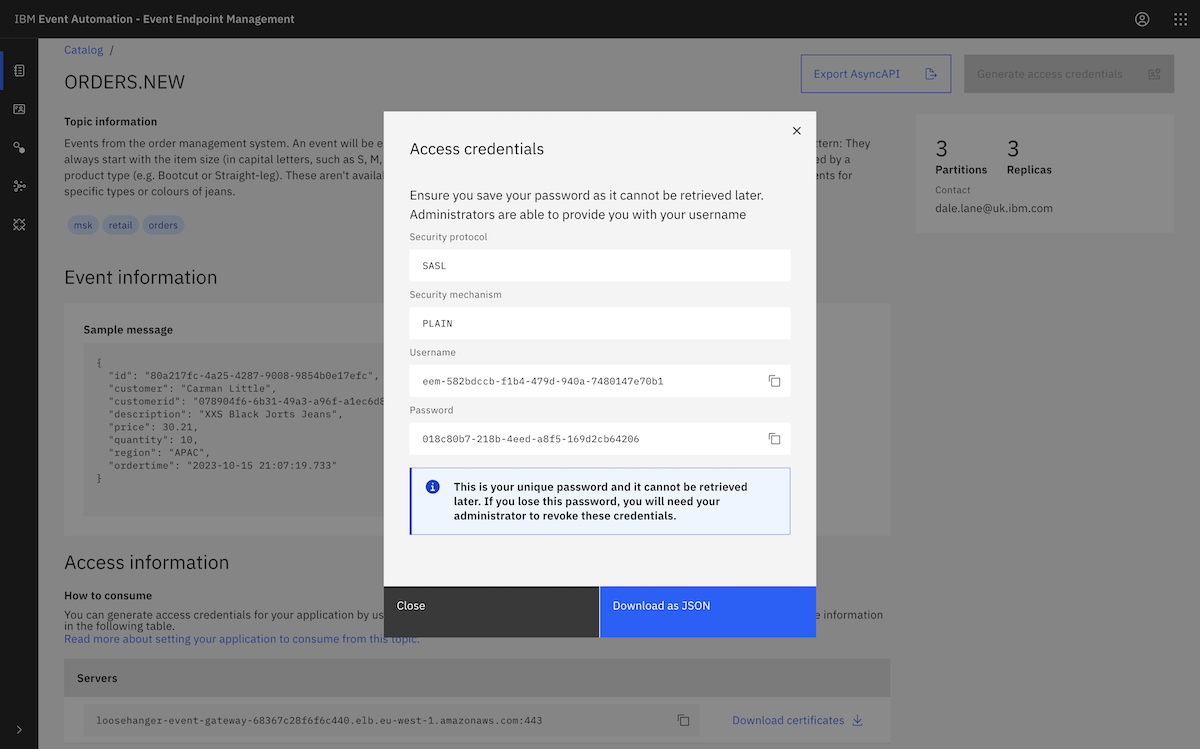

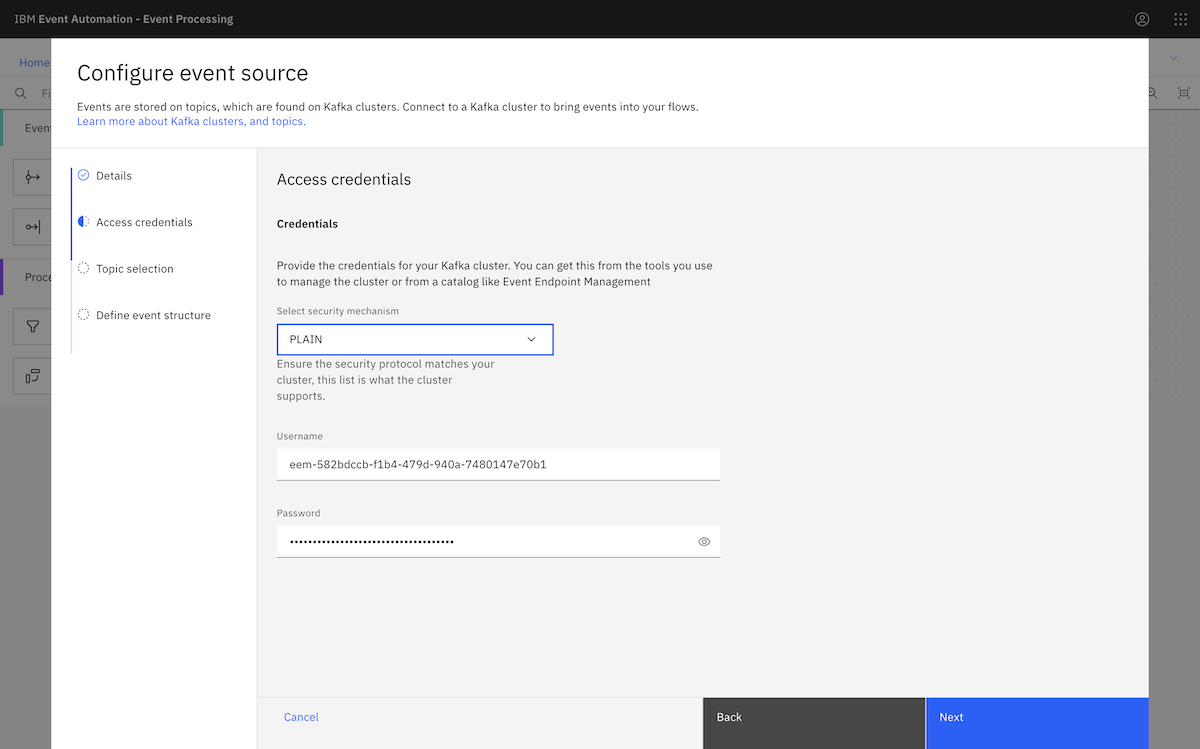

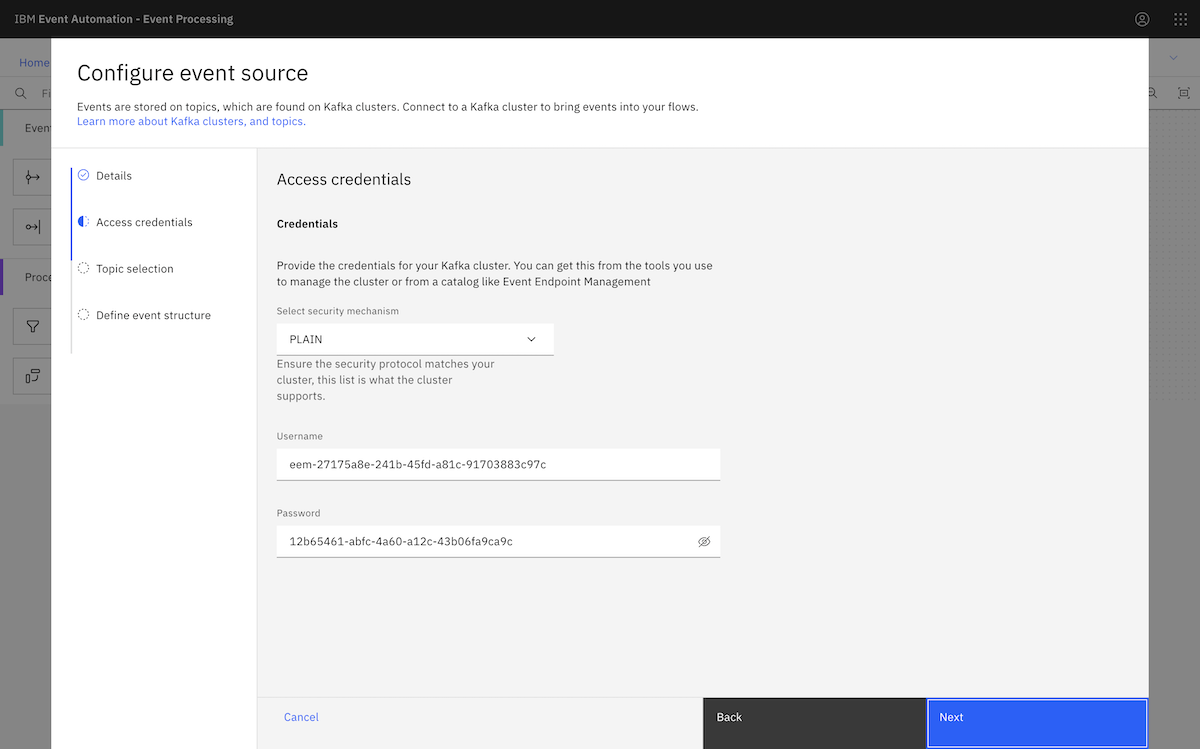

We provided the consumer credentials we created earlier when setting up the MSK cluster.

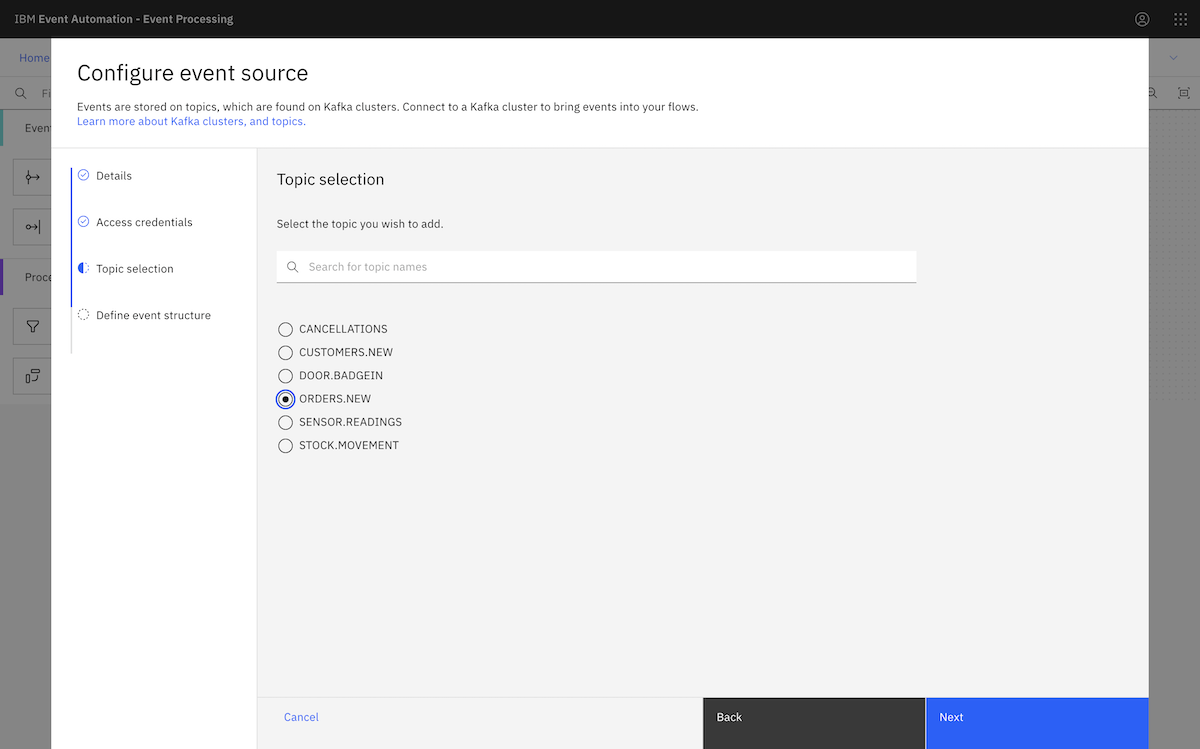

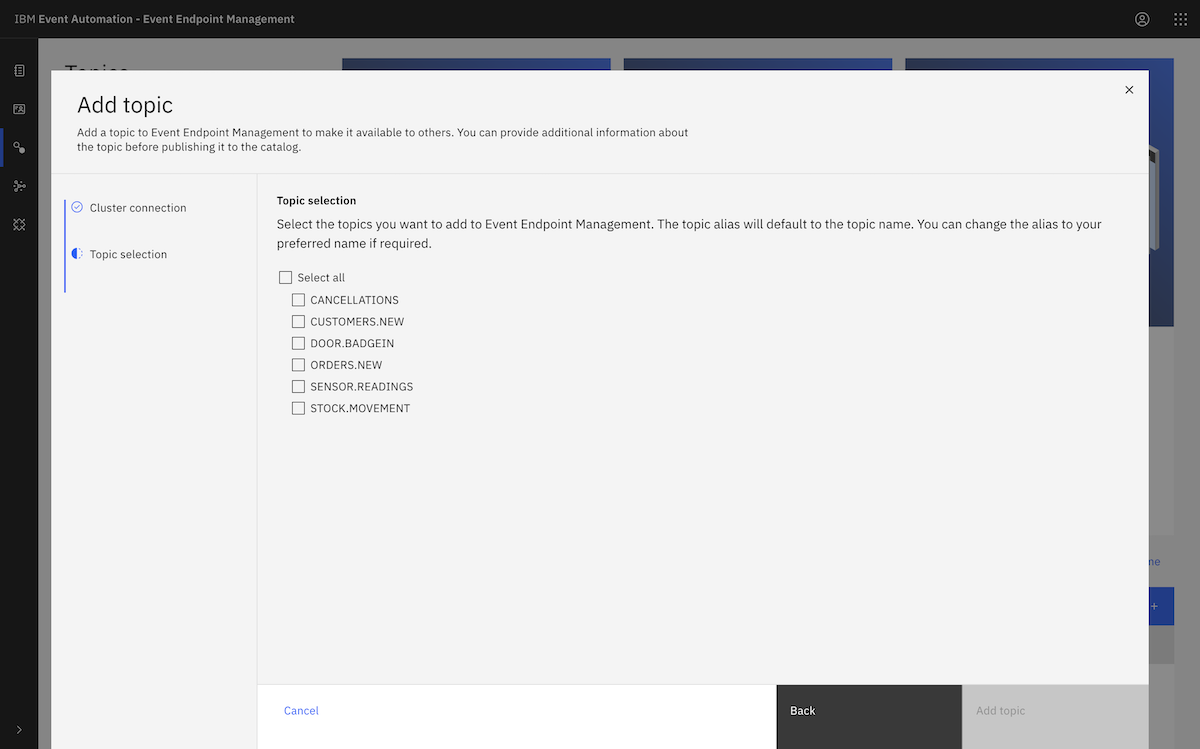

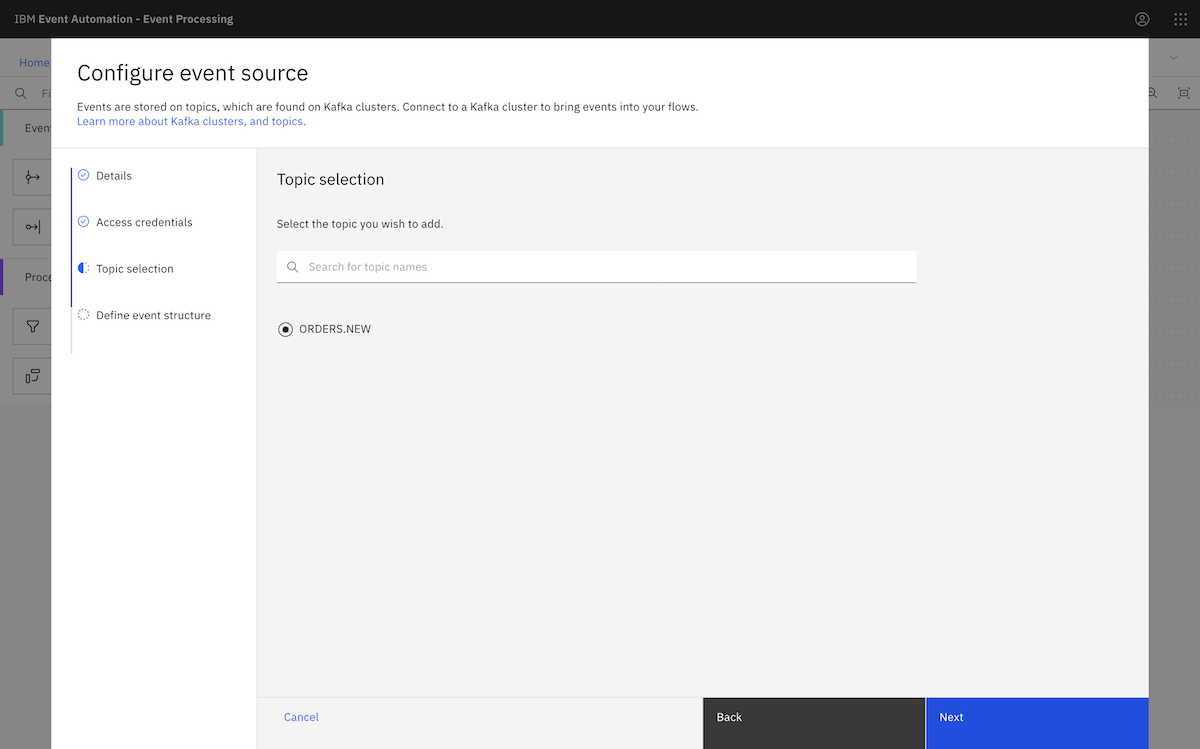

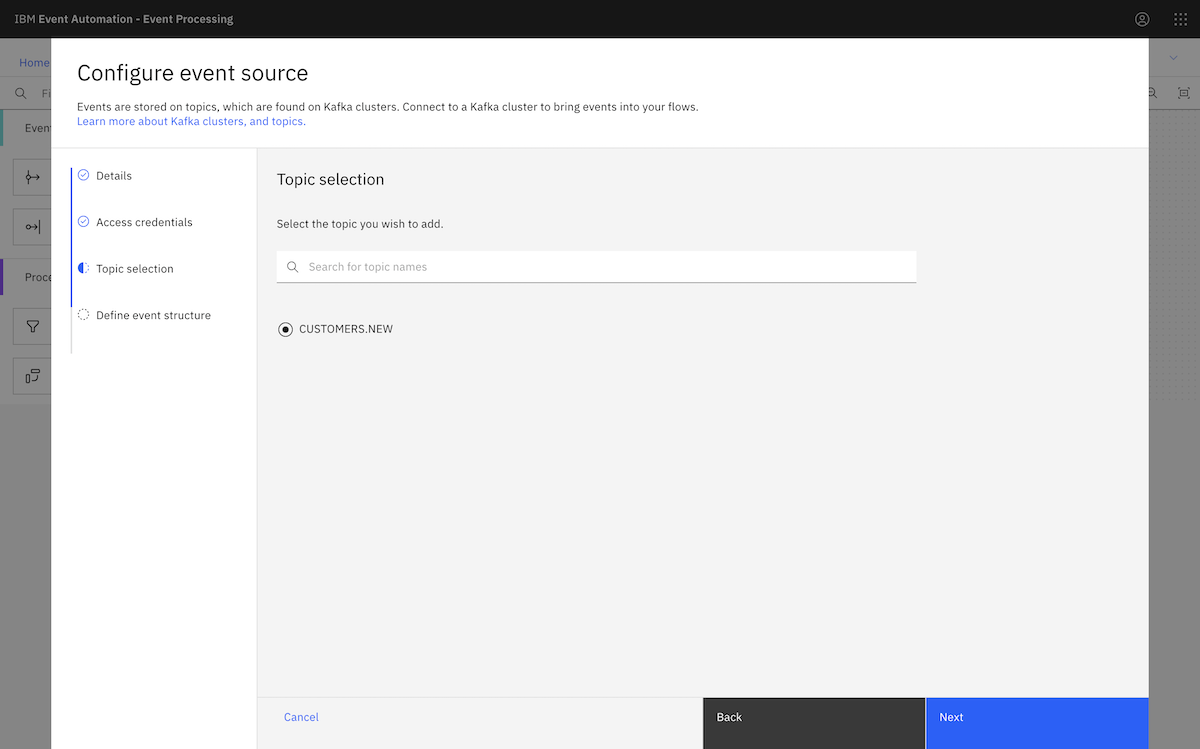

The list of topics displayed in Event Processing matches the list of topics that we configured the consumer user to have access to.

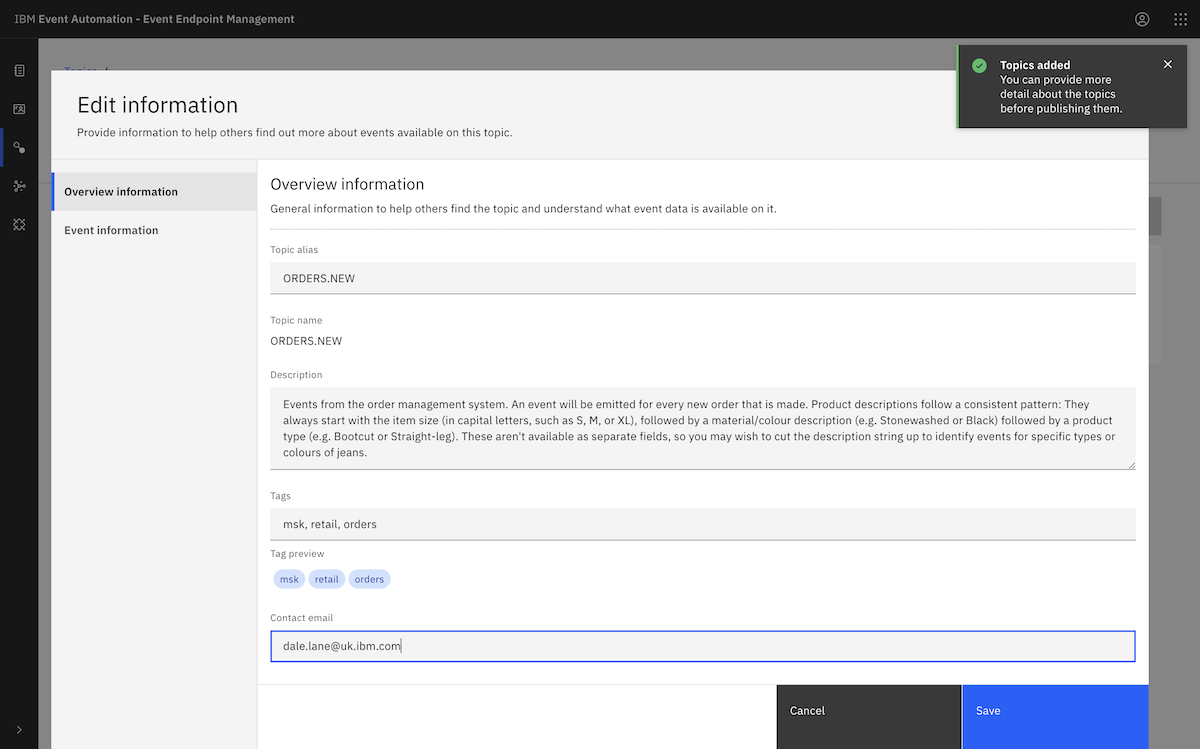

Following the tutorial instructions, we chose the ORDERS.NEW topic.

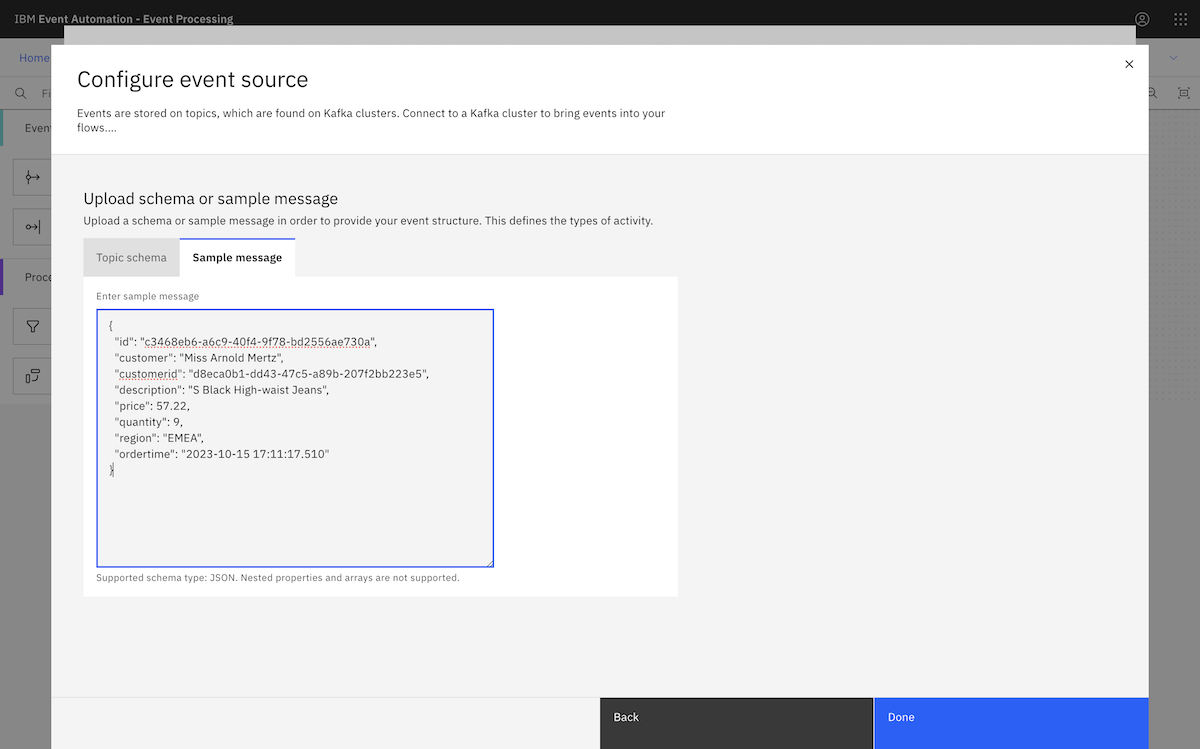

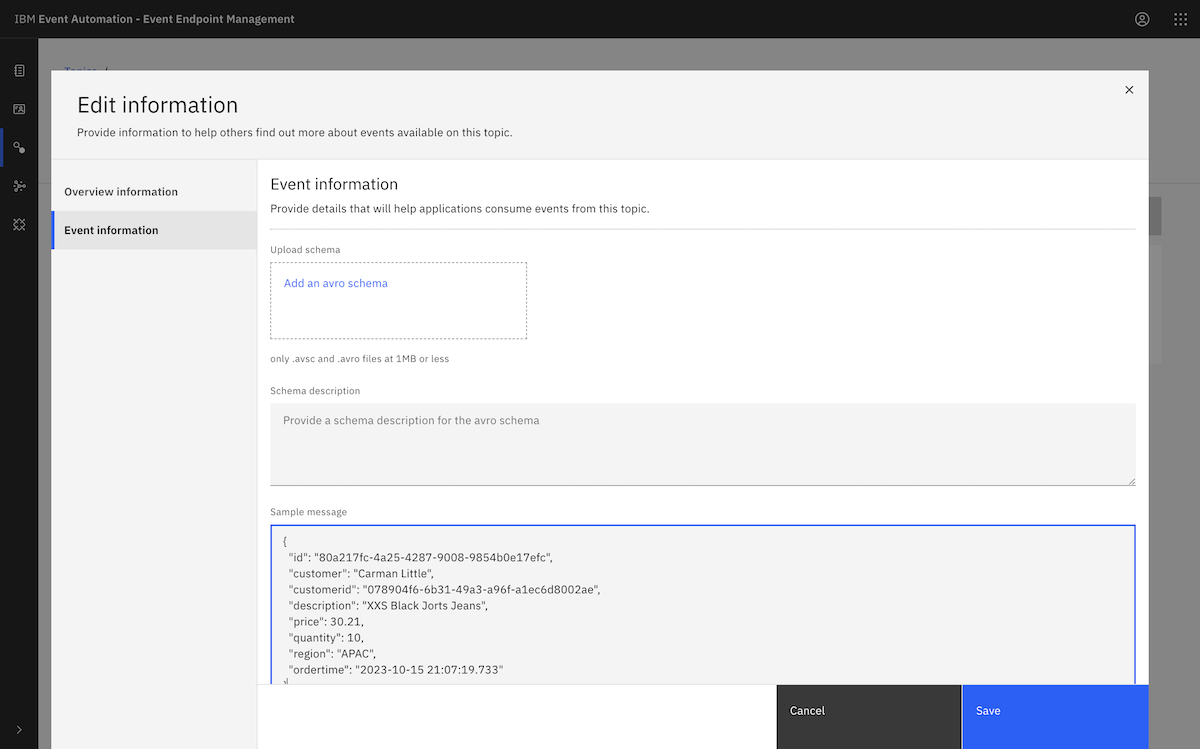

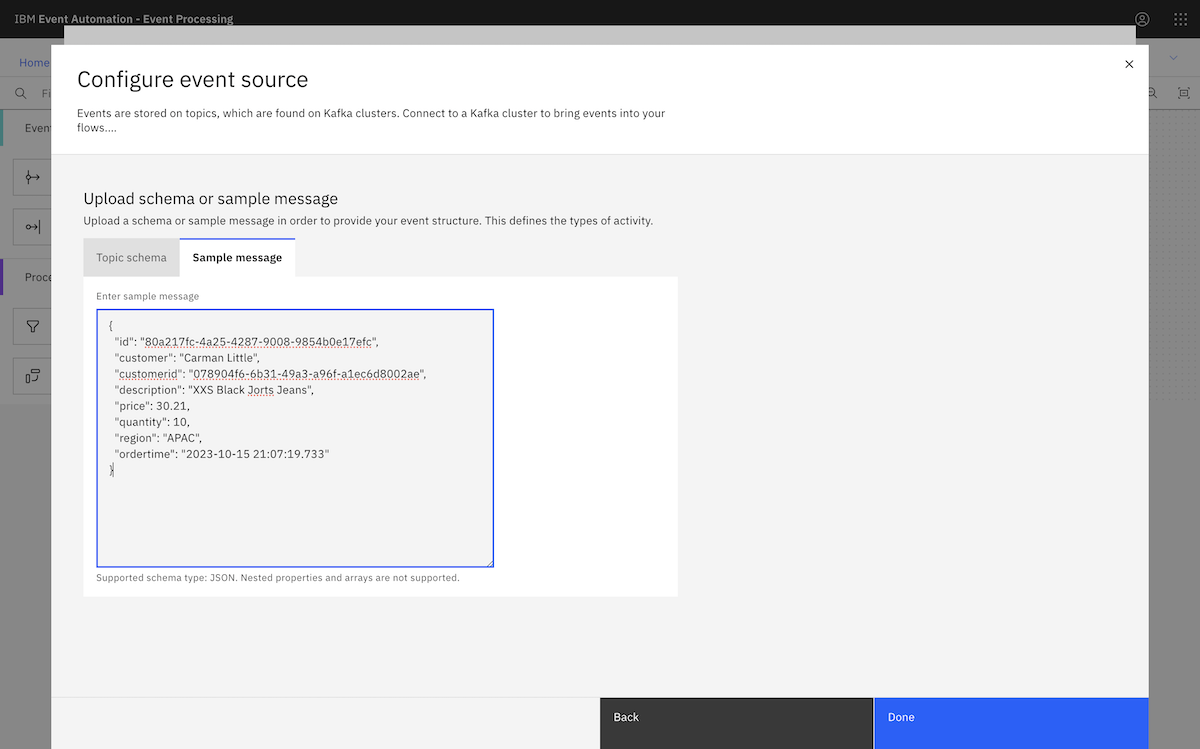

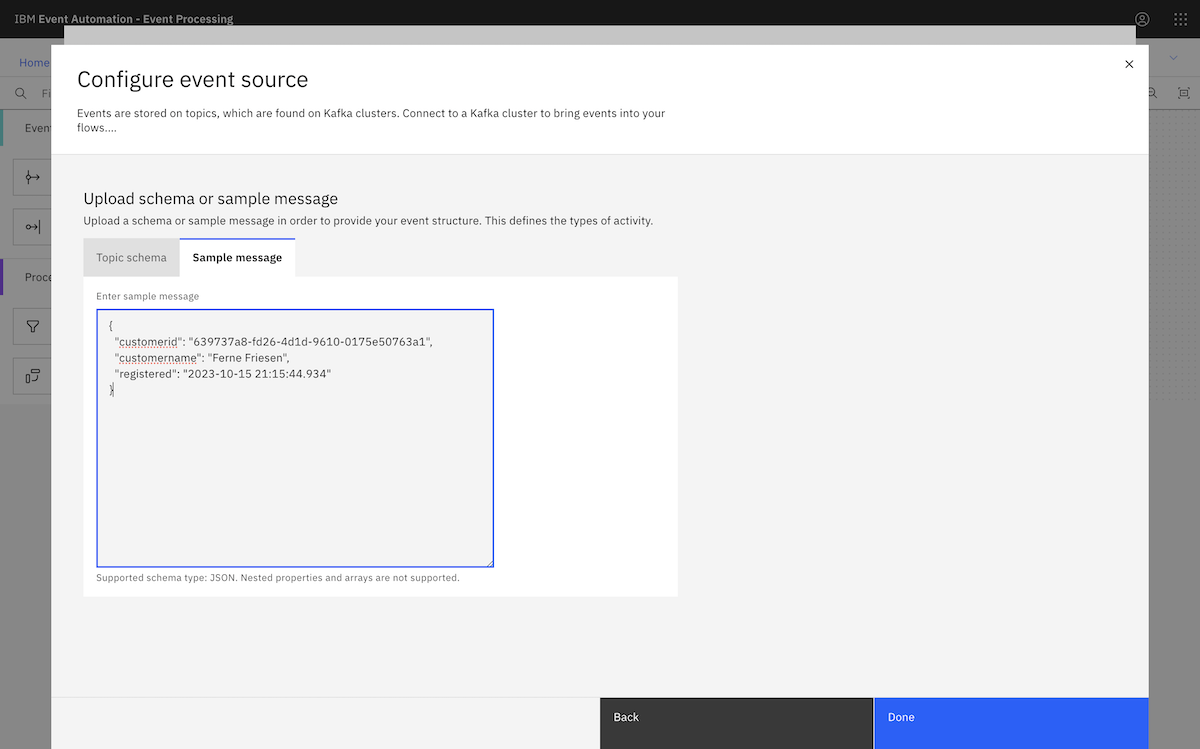

We copied in a sample message from the ORDERS.NEW topic.

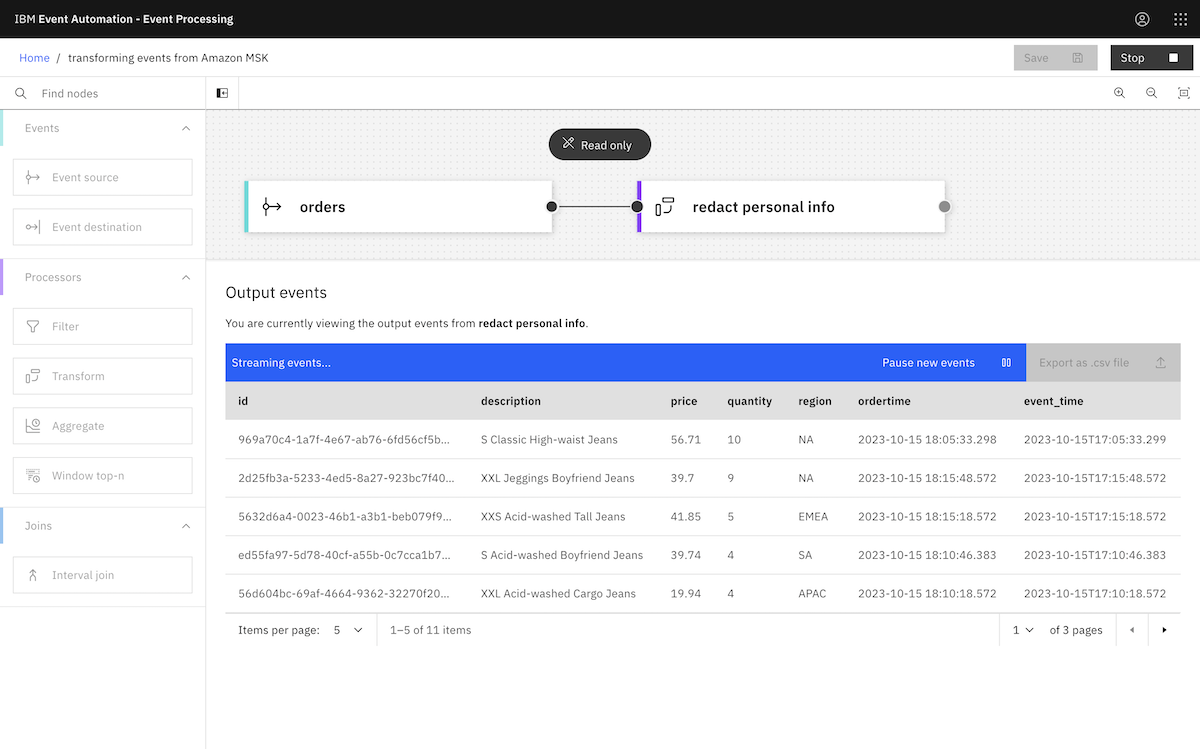

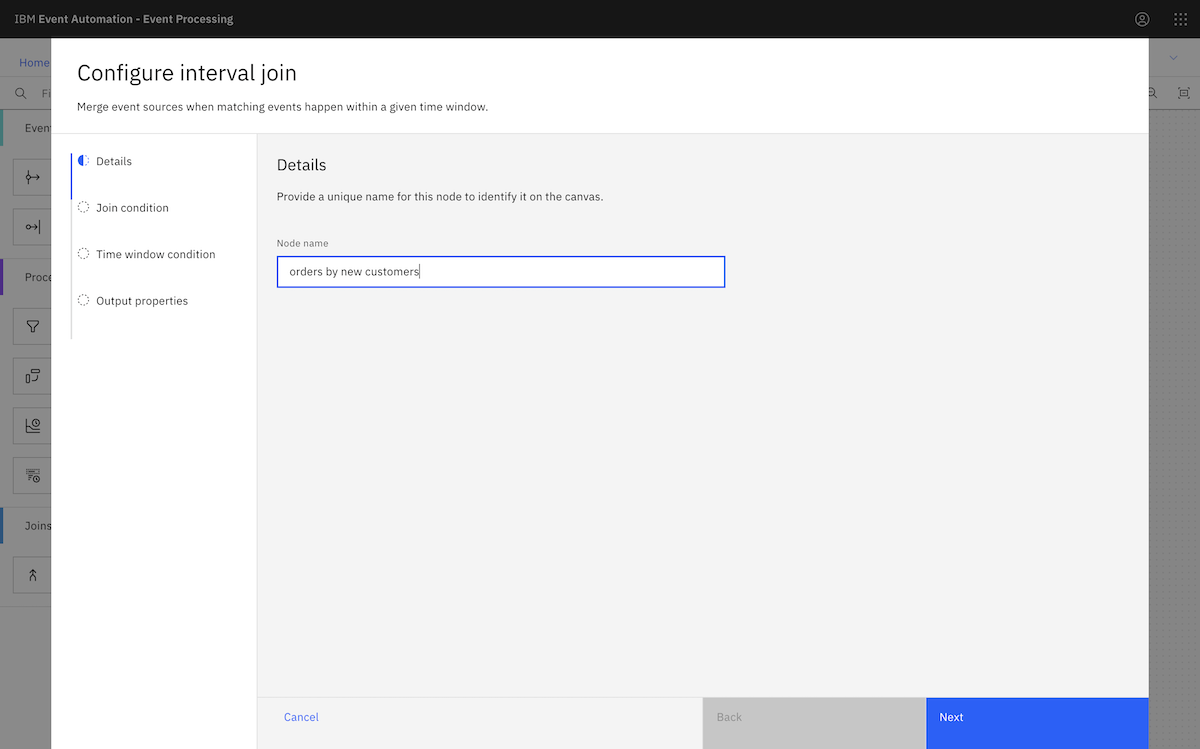

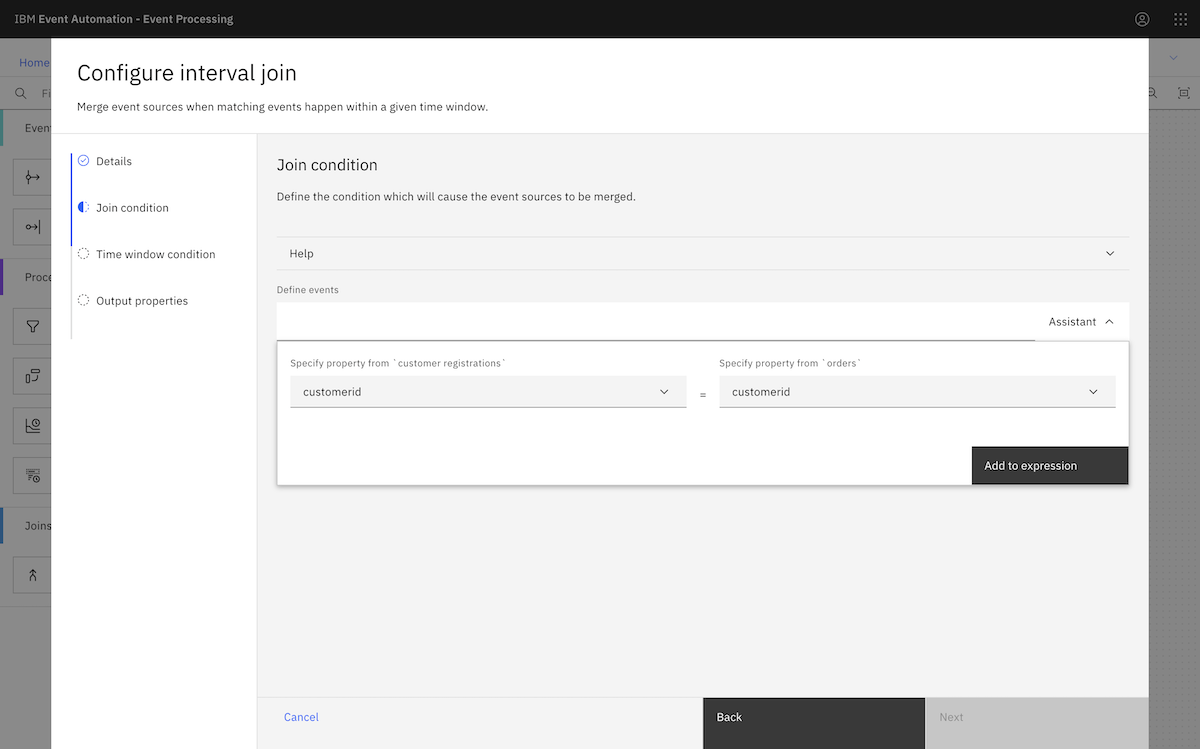

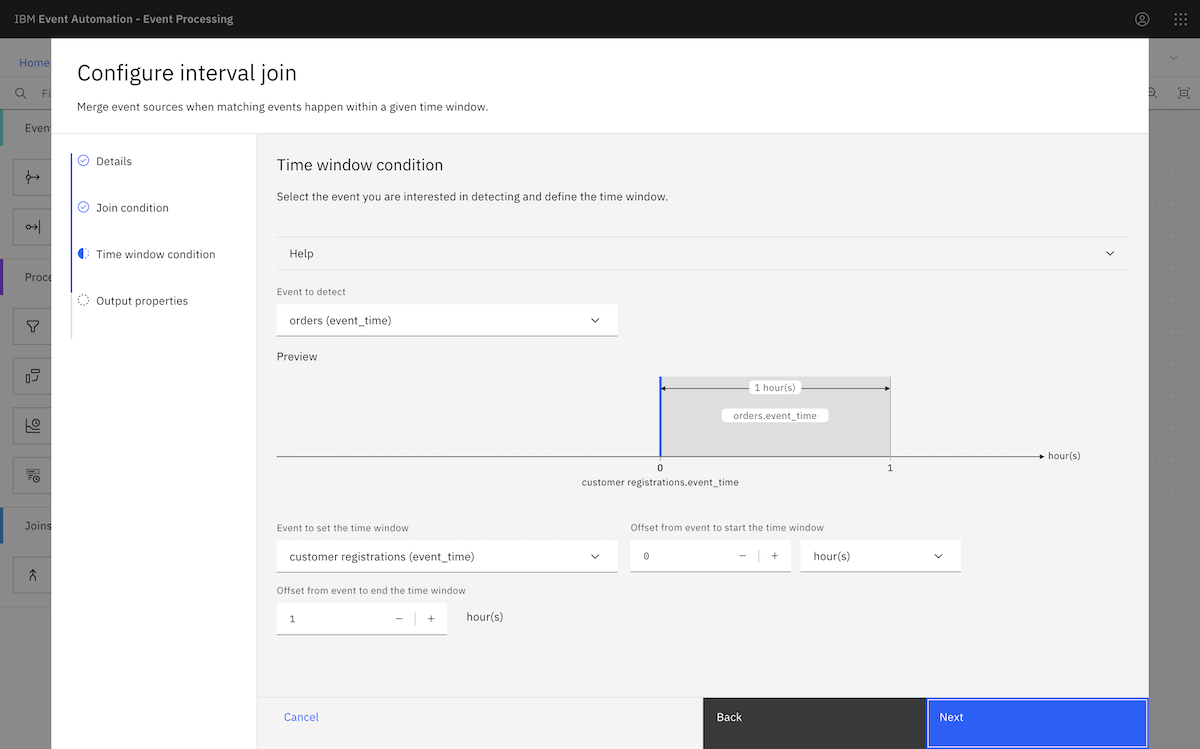

Finally, we created an event processing flow using the events from an MSK topic. ▶

We continued to create the flow as described in the tutorial instructions.

All of the Event Processing tutorials can be followed as written using the Amazon MSK cluster that we created.